This blog post is one of six keynotes by NGINX team members from nginx.conf 2017. Together, these blog posts introduce the NGINX Application Platform, new products, and product and strategy updates.

The blog posts are:

- Keynote: “Speeding Innovation”, Gus Robertson (video here)

- NGINX Product Roadmap, Owen Garrett (video here)

- Introducing NGINX Controller, Chris Stetson and Rachael Passov (video here)

- Introducing NGINX Unit, Igor Sysoev and Nick Shadrin (video here, in‑depth demo here, integration with the OpenShift Service Catalog here)

- This post: The Future of Open Source at NGINX, Ed Robinson (video here)

- NGINX Amplify is Generally Available, Owen Garrett (video here)

Ed Robinson: Good morning, everyone. Welcome to Day 2 of nginx.conf. As we sit here in this hotel room, there’s a hurricane storming towards Florida, and Texas is still cleaning up after the last hurricane. I read last night that the sun has just sent out an enormous solar flare that’s larger than any solar flare in the last ten years. And even here in peaceful Portland, the mountains are on fire and it’s raining ash.

I suggest to you that there’s no better place to be today than in this room, learning about open source NGINX. We’ve got a jam‑packed morning for you. When we put together the program for nginx.conf, we break it into two parts. Day 1 is always about enterprise content; it’s about what we’re doing for enterprises.

Yesterday, you heard about NGINX Controller, and the new stuff we’re doing there. As we balance things, Day 2 is always about open source. In this first session, we’re going to look at open source and the enterprise, bringing those two things together.

We’re also really lucky that we have Elsie Phillips from CoreOS to talk about open source business models, and Kara Sowles from Puppet on building an exothermic community.

But first, I want to explore how open source is changing and reshaping enterprises, and reshaping the future of what’s possible to build inside enterprises.

I’m going to start with a very simple proposition: for enterprises, the future is built on open source. Let’s just think about that for a second. In the last 10 years, every innovation that enterprise has adopted has had its roots in open source. From cloud computing to microservices, to containers, to databases, and to the new languages like Node.js and Golang: they all start in open source.

Enterprises are starting to listen. They’re starting to embrace open source. We heard from Red Hat, yesterday, that 90% of the Fortune 500 are using Red Hat products and services. That was unheard of 25 years ago.

There are three major trends behind this. Let’s look at those three things very quickly.

First of all, think about what enterprise compatibility was like 25 years ago – 25 years ago was important, because that’s when enterprises first started adopting open source, and I’m sure there were many people in this room who were driving this, who were reaching out and trying different things. People started adopting open source because it solved problems that closed source wasn’t addressing.

The minute people started looking at open source, the closed source vendors tried to stop us. They built walls to try to stop open source from getting into enterprises. The primary objection was: open source is risky and it’s not enterprise‑ready.

There are so many fantastic quotes from that era – I’ve got a couple, and Elsie has slides and slides of interesting quotes. Bill Gates, from Microsoft, said that open source is “good for hobbyists and for enthusiasts.”

It was also famously said that open source is like a free puppy: I mean sure, it’s cute, it’s got big paws, and floppy ears, and droopy eyes. But you take this puppy home, and you’ve got to feed it, you’ve got to train it, and then you have to clean up after it.

But guess what? We took that puppy home, and that puppy grew up to be a wolf. It’s got teeth, it’s got claws, and it’s got its sights set firmly on enterprise software, and it’s not going to stop.

Twenty‑five years later, we have enterprise compatibility. Everything has changed. Enterprises now have choice that they never had before. Companies like NGINX – we’ve invested an enormous amount of time. Sure, you can download the source code. But if you’re in enterprise, now you’ve got packaged software that’s completely tested, and backed up with professional services, with training, and with support that has won Stevie Awards two years in a row.

What’s more, we have an army of developers and operations staff in the community who’ve grown up with open source, who’ve embraced open source right from the beginning. Those people are ready to take it into the enterprise.

Open source in the enterprise is now proven. It’s now ready, and nothing is going to stop it.

In the last 25 years, closed source has stood still while open source has invested. They’ve invested in software that works everywhere.

If you look at NGINX, no matter what you’ve got, we’ve got you covered: we work on‑premises, we work on public clouds, private clouds, hybrid clouds – any kind of cloud you want – and we work on containers as well.

If you’re doing something like microservices, suddenly the tables have turned. Open source is now less risky than closed source because we invented this technology, and we battle‑proved it, and we delivered it to the enterprise.

A second driver: closed source is still stuck in a three‑year product cycle. If you want to have a really clear example of this, think of Microsoft Office. Inside Microsoft, Office is kind of known as a train that always arrives on time, every three years. Like clockwork since 1997, Office has released every three years – except for one of them which was four years, and you can kind of guess: people got pretty unhappy about that.

Why does it ship every three years? It’s great for license renewals, and it’s great for maximizing the amount of money that you can get from customers, but it’s lousy for delivering innovation.

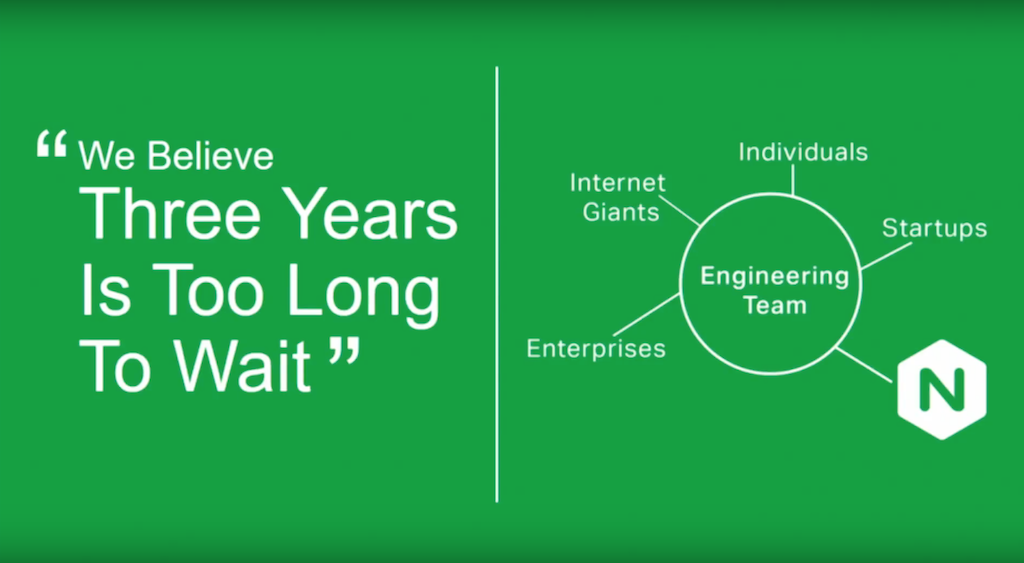

We believe there’s a better way. We believe that three years is too long to wait for innovation. Innovation doesn’t happen in three‑year cycles. The future is happening now.

Our open source approach is fundamentally very, very different from closed source. We take bug reports, idea submissions, and code submissions from amateurs, from professionals, from students, from professional developers, from architects, from individual development companies, from startups, from mid‑sized companies, from enterprises, and from the largest Internet giants in the world today.

We take those ideas. We curate them through our engineering team. We make sure the ideas align. We argue about them. We make sure that they align with our product vision and with everything else that we’re doing in the product.

Then we release very, very rapidly in stable and mainline branches, then also in our NGINX Plus branches as well. We deliver innovation at the speed the enterprises and businesses are asking for.

You can adopt it at whatever rate you wish. You can adopt it at your own speed. We fundamentally give more options to enterprises and to companies.

If you think about it, as you’re adopting this technology, new technology is always very, very complex. A lot of companies will look to platforms to simplify this and to reduce risk. As enterprises look at platforms, they see them as a set of best practices, patterns, guidance, and software that work better together.

The challenge with closed source platforms is that you are always locked in. Closed source companies have to protect their intellectual property (IP). They have to protect their own developers, their own engineers from being tainted by other people’s IP. Fundamentally, they try to keep everyone locked into their platform.

A really simple kind of consumer example of this is iTunes. If you’ve got an Android phone, it’s very, very hard to use iTunes. Why is that? Why have they not made those two platforms work together? This kind of lock‑in creates hostages, not happy customers.

We believe there is a better way. With open source, we take a fundamentally different approach to platforms. Yes, we’re building our own platform. Later on today, you’re going to hear from Igor Sysoev and Nick Shadrin, who are going to show you NGINX Unit, which is a core part of our new NGINX Application Platform. But we also integrate with every other platform as well. This is baked into our DNA as an open source company.

We build on AWS, on Azure, on Google, on GCP. We also build on Kubernetes, and even Istio – we’re announcing integration for that today. On‑premises, private cloud, containers, hybrid cloud, any kind of cloud that you want: we believe that platforms should free you and not lock you in.

NGINX is very excited by open source. This is baked into our DNA, and we believe that open source is a better way to deliver software.

I’m going to pass over now to Owen Garrett, who is NGINX’s Head of Products. He’s going to talk about our roadmap in the next year and how we’re going to deliver on that promise to our customers and our users. Thank you for your time.

Owen Garrett: Thank you. And yes, we firmly believe that open source is absolutely the right foundation for everything that we do at NGINX.

What makes a successful open source project? Touching some of the aspects, we measure the success of the NGINX project on a number of criteria: on its adoption, the influence the project has, the footprints that you see, and the value that NGINX brings.

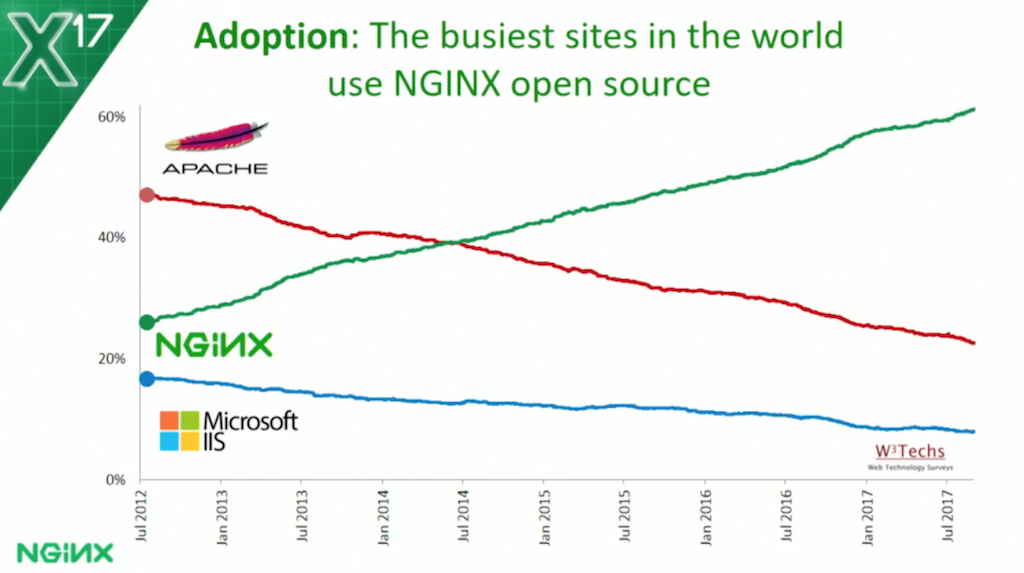

In terms of adoption, let me give you a different cut of some of the figures we shared with you yesterday – visits to the 10,000 busiest sites in the world.

Five years ago, Apache held almost 50% market share. It was the de facto standard. NGINX had 25% – a little bit less, actually.

Over the last five years, as we’ve innovated and developed the NGINX open source project, [NGINX’s] proportion of users and the busiest sites in the world has more than doubled, while other web servers and platforms have halved. Our adoption is staggering.

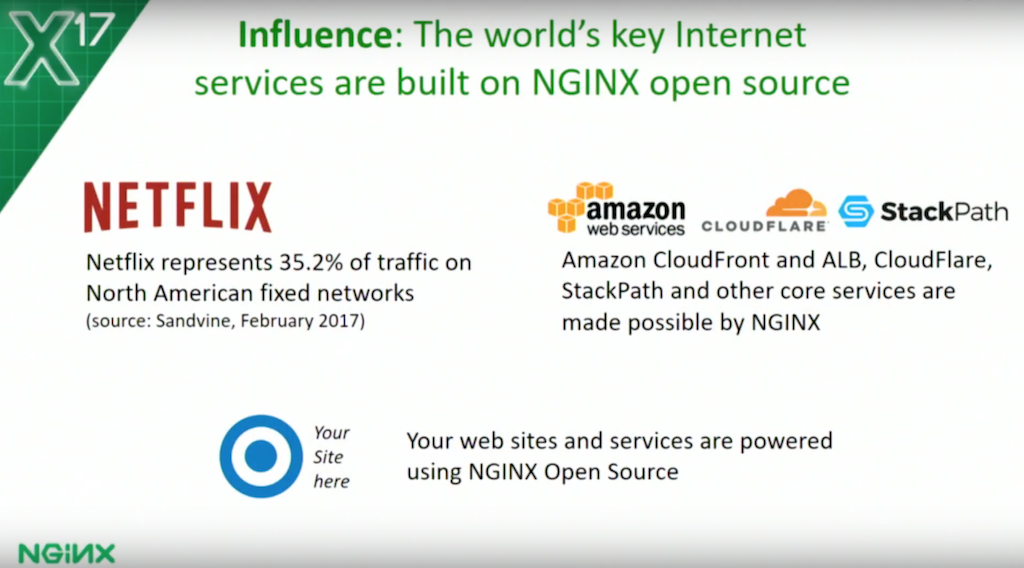

Our influence – Netflix powers over a third of the US Internet traffic. Their platform is built on NGINX.

Almost every major cloud platform and CDN platform is built or made possible by the technology in NGINX.

Amazon’s CloudFront, some of their load‑balancing technology, and market‑leading CDNs like CloudFlare and StackPath, are all made possible purely by the availability of the NGINX open source project and the permissive licenses that we wrap around that.

In terms of influence – the sites and services that you’re building, that are going to change the Internet and business in the future – they’re powered using NGINX open source at the core.

Our footprint: almost 1.5 million physical servers are directly connected to the public Internet and running NGINX software.

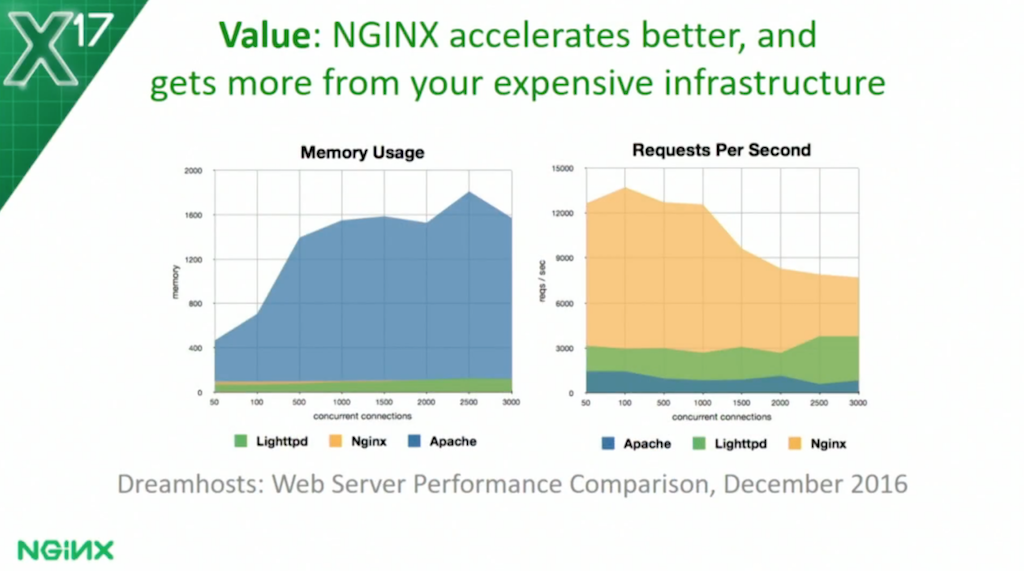

In terms of the business value, what does NGINX unlock for you?

Greater performance, greater stability, and much less resource usage means that, in terms of the hosting costs – what it costs for you and your partners to run your business – it’s dramatically lower. These cost savings are realized by the architecture of NGINX.

A phenomenally successful open source project with huge market share: we’re confident in saying that whether you’re watching a video on Netflix, you’re ordering a taxi from Uber, or you’re conducting transactions on Amazon, you’re accessing one of the millions of services hosted on the army of CDNs and infrastructure built on NGINX. You’ll be touching NGINX open source software.

Well over half of the Internet transactions happening right now are being accelerated or delivered by the NGINX open source project. That’s a fantastic testament to the quality of the engineering and the vision of the team who built, and are continuing to maintain, this critical part of modern Internet infrastructure.

At our core, we are open source first.

You might be forgiven for taking from the presentations and slides and things that we shared yesterday that we have a big focus on our enterprise and commercial community. But we are an open source company. We debate continually within our organization about every single product decision. There’s always a tension, balancing our commercial activities and the open source activities.

Our engineering team acts as a very healthy conscience. They fiercely defend the interests, and represent the views and the needs, of the community of NGINX open source users.

Igor Sysoev and his colleagues founded NGINX, Inc., the company, in 2011, six years ago. The key purpose of founding the company was to assure the future of the NGINX open source project through commercial activities, to provide the ability for us to develop, and to grow and foster our open source community.

We’ve seen great success. I don’t think anybody can argue about that: many of the features that have appeared in NGINX, the events we’ve done, the fact that this conference is only possible because of how we balance our commercial and our open source obligations.

In terms of investment in code: the code base has more than doubled, to a still very modest, compact, efficient, 190,000 or so lines of code.

In terms of features that we’ve delivered: about 70–75% of our engineering effort over the last 5 years – more so this year – has gone directly into the open source project, recently bringing you things like:

- Caching improvements that allow you to more effectively serve content and reduce the load in your upstream servers

- WebP support – there’s a session later on from one of our engineers touching on this

- Request mirroring – a very new feature we just brought out in the last open source release (I’ve been amazed by some of the users I talked to over the last day – how they’re leveraging this to do some really smart stuff)

- nginScript [now called the NGINX JavaScript module]

And there are other capabilities we’re still developing as part of the open source project.

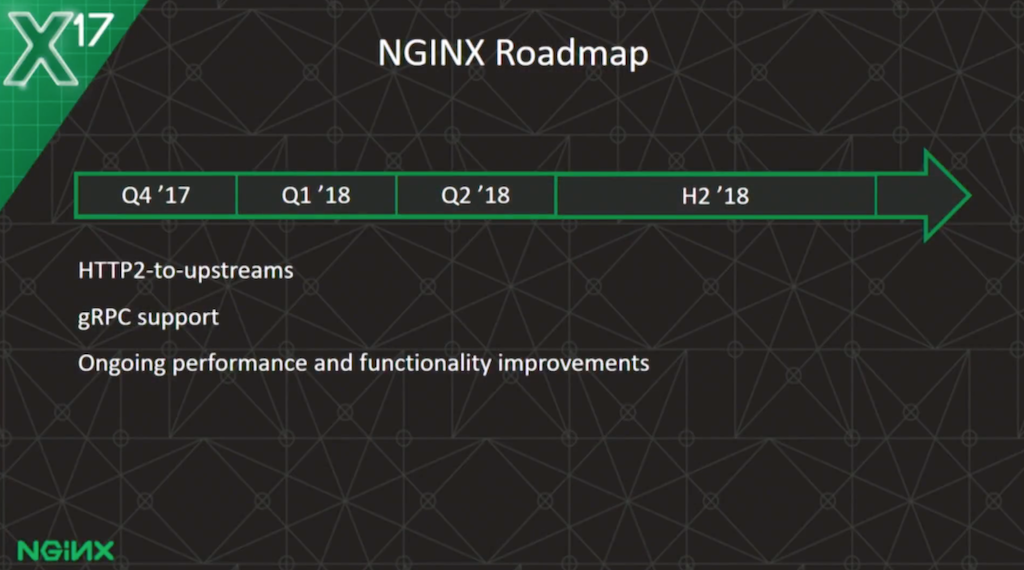

Unlike some of the other projects on our roadmap, NGINX the open source project is relatively mature. We look at our roadmap for this on, typically, about a three‑month cycle. We’re careful not to take on long‑term commitments that would impede how we can respond to the input and the needs for the community.

You see the areas of focus for our team through to the end of the year and into 2018:

- HTTP/2‑to‑upstreams is critical. It’s something we’ve committed to internally, to work on and develop.

- We’re collaborating with partners who’ve already contributed code That will unlock the capability to fully support gRPC traffic, to terminate gRPC requests coming in, and then to multiplex them into other HTTP/2 connections upstream.

- An ongoing and continuing focus on performance and functionality improvements: caching, scalability, coping with large numbers of cores, large memory usage: these are all things we’re continually experimenting with, looking for new techniques and new ways to improve the NGINX open source project.

We rely closely on our community – our community of open source users, commercial partners, and some of the big Web monsters – to contribute patches, and suggestions, and inspiration back into our open source roadmap.

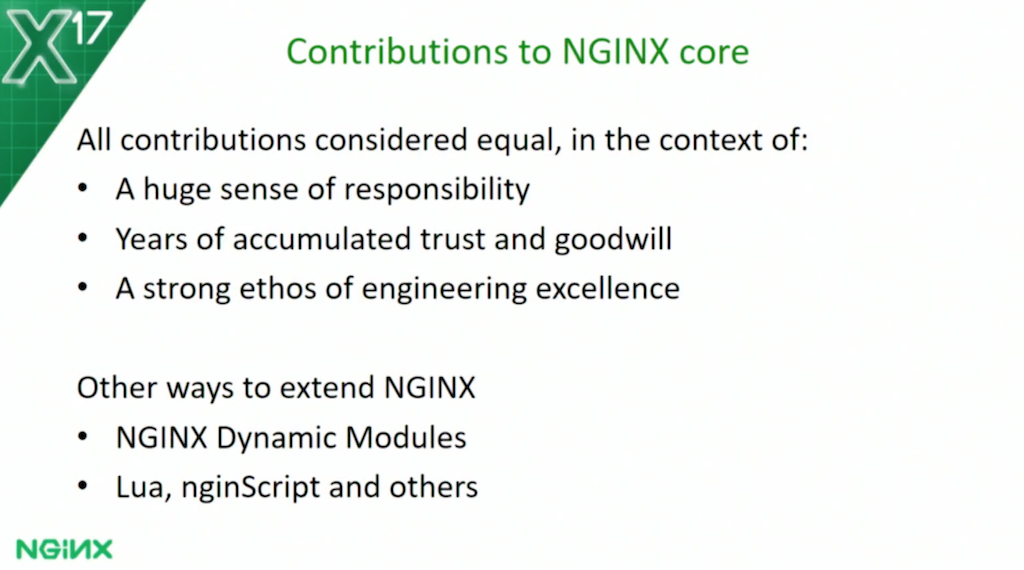

Sometimes it feels a little bit strange for a company that is so open source in its focus to appear, paradoxically, quite closed. There’s a good reason for that: our engineering team carries a huge burden of responsibility. We look at every single contribution that comes in, typically through the nginx-devel mailing list. As we consider it internally, we have to judge the contribution (and the interests of the user or the organization who made it) against the interests of the quarter of a billion websites running on this project.

When we’re powering over half of the world’s Internet transactions, if we go against the interests of the community, or if we bring code in that fails, or causes issues, that can potentially break a large part of the Internet. There’s no getting around that.

As we consider the roadmap, the capabilities, and the contributions, we have to evaluate them against a huge range of criteria: against the trust and goodwill we’ve built up,and against our own internal coding standards, our coding styles, and the ways we test and develop code.

That process is often invisible to our community, but that process is necessary to ensure that we can continue to deliver on the trust and goodwill we’ve earned, that we can continue to maintain the integrity of our open source project.

There’s an incredibly strong sense of engineering excellence. If any of you have been monitoring the mailing lists or have made code contributions, I hope you appreciate that.

For that reason, we’ve invested in ways to help you extend the functionality of NGINX without needing to modify the core. Last year, we iterated and developed the module architecture within NGINX to make it easier for you to create dynamic modules that you could link in directly to the core.

We know that technology alone is not enough to help you make the most of NGINX. We’ve invested in documentation, and the first NGINX dynamic module developer training course is being held tomorrow. I know 30 or 35 of you have secured places in this course. It’s part of our ongoing commitment to the community to help you make the most of what is very complex, very tight, very well‑refined technology.

The Lua module – many of our partners build technology on top of key third‑party modules, like the Lua module developed by the OpenResty team. Internally, we’re committed to supporting many of those key third‑party modules to the extent that we include them in our own internal build and test systems.

And we provide support to end users who have support subscriptions with us. With a warranty, we’ll ensure that the module compiles and works, and we ship a build with our supported software.

Internal projects like nginScript [now called the NGINX JavaScript module]: still under development. It’s got a long way to go, but it’s another way for you to extend the functionality of NGINX for things like the Perl module.

As we’ve grown, as the capability of the company has extended, we’ve started other open source initiatives at NGINX. I’d like to share three of them with you.

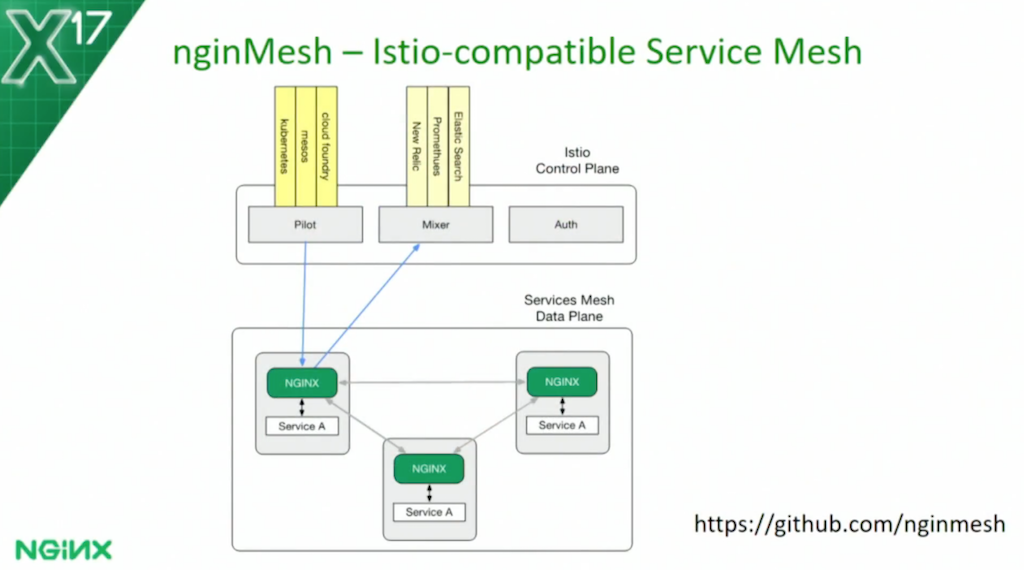

The first one – for those of you who were lucky enough to catch A.J. Hunyady and Sehyo Chang’s talk yesterday – is a project called nginMesh. nginMesh arose because of the Istio project – a joint collaboration between Google, IBM, and Lyft to build a service mesh platform on top of container orchestration platforms like Kubernetes.

Networking is hard for service‑mesh applications. We’re working with the Istio team to build the connectors necessary to configure and manage NGINX as a sidecar inside each container, driven by Istio’s pilot and mixer services, and to realize the vision of a service mesh.

It’s an open source project. It’s work in development, but you can see it on GitHub.

[Editor – NGINX is no longer developing or supporting the nginMesh project, which is now sponsored by the community. To learn about our current, free service mesh solution, visit NGINX Service Mesh. To learn about F5’s Istio‑based service mesh solution, visit Aspen Mesh.]

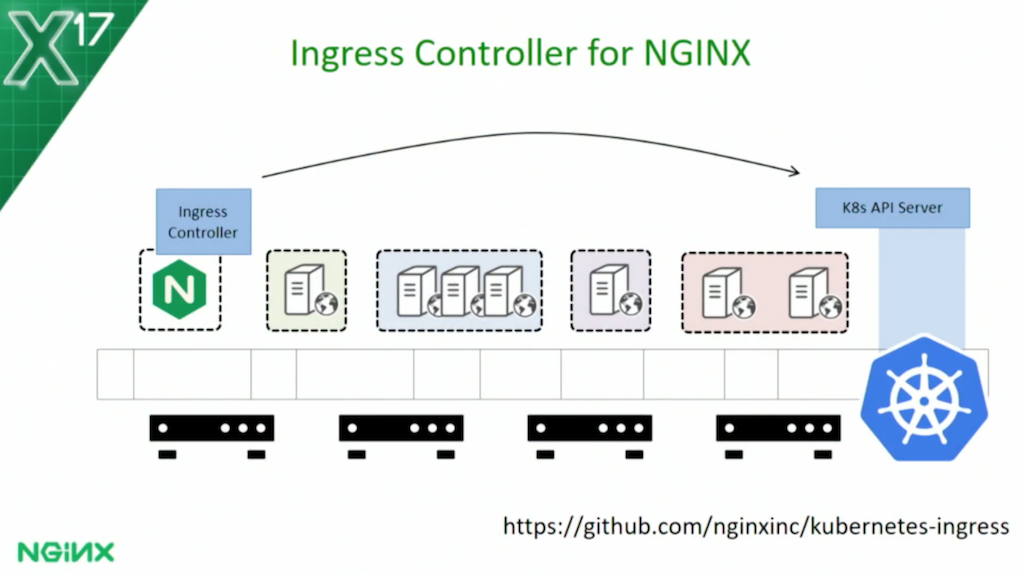

Another open source project that we shared with you yesterday – and I hope many of you got to the talk – was work around an Ingress controller solution for NGINX running on Kubernetes. Kubernetes is a container orchestration platform. It takes a set of physical or virtual servers, arranges them into a cluster, and then slices and dices the resources presented by those servers to create an environment where you can deploy pods or containers.

Deploying applications in containers is well and good, but containers have unpredictable IP addresses and ports. It’s difficult getting traffic in. The Kubernetes project includes the concept of an Ingress resource. A container can declare that it needs external connectivity. The Kubernetes infrastructure accumulates that data and makes it available to other services.

An Ingress controller is a piece of software, typically a daemon that probes for Ingress resources and is responsible for configuring a load balancer to implement the appropriate load‑balancing policies. We’ve been working on an open source Ingress controller implementation that works out of the box with both NGINX and NGINX Plus.

We’re proud to say that this week, we’ve announced it as a fully supported, fully committed NGINX project.

As a user of NGINX open source, you can hit our GitHub repo. You can follow the instructions to build a container containing NGINX and our Ingress controller software, and deploy that within a Kubernetes environment. That gives you an enterprise‑ready, supported, and maintained solution from us to manage incoming traffic into your environment, no matter how you change and re‑architect your application.

OpenShift is next. OpenShift router technology works in a similar fashion. You can use the Ingress controller solution directly on OpenShift if you expose the Kubernetes interfaces. But, we’re committed to supporting a broad range of platforms, and we’ll be developing a similar approach for the OpenShift router.

What I’d like to do now is move on to what is one of the most exciting projects to come from the NGINX team in the last five years. We teased a little bit of information about it yesterday: NGINX Unit, the application server that speaks your language. The technology: next‑generation serving for cloud‑native applications.

People have said, as we’ve been sharing this concept, that Unit feels like a small, diminutive, almost insignificant name. Why is that? NGINX software is known for being small, lightweight, unassuming, and efficient, but also incredibly powerful. Unit is no different. It embodies those values.

Our hope is that the Unit open source project will form the building blocks for your future applications, allowing you to scale out in a distributed fashion and a multi‑service fashion, building rich, sophisticated, complex applications.