API lifecycle management begins with planning, designing, and defining APIs. This blog spotlights how easy the NGINX Controller API Management Module makes it to define APIs and publish them to NGINX Plus API gateways.

Many API management tools require you to create a separate definition of a given API for each different deployment environment. With the NGINX Controller API Management Module, you define an API just once and publish it to as many environments as you want. This “create once, publish many” approach eliminates user errors as well as saving time and effort, especially if you have to define a lot of APIs.

The API Management Module provides a logical framework for defining APIs and the associated configuration. Let’s go through each logical entity.

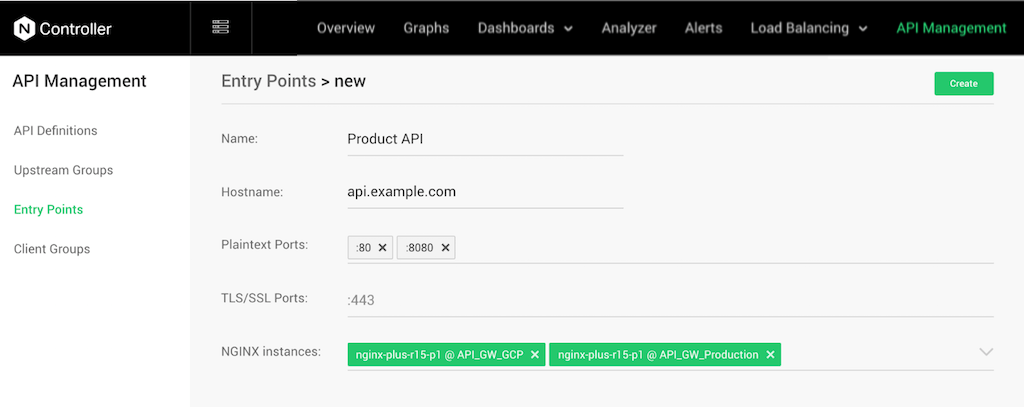

Defining the Entry Point

The entry point for an API (or set of APIs) is the first element of the URL that API consumers use to access it. Specifically, it’s a hostname or domain name, along with one or more port numbers.

It’s a best practice to make the entry point name descriptive of the information exposed by the API. The organization’s domain name is usually included.

As part of the entry point definition, you also you specify one or more NGINX Plus instances that act as the API gateways for the API: they handle all API calls directed to the hostname.

The screenshot shows an entry point with hostname api.example.com and name Product API (the name is used when defining an environment). Two NGINX Plus instances, nginx-plus-r15-p1@API_GW_GCP and nginx-plus-r15-p1@ API_GW_PRODUCTION, serve as the API gateways.

Defining the Upstream Group

An upstream group is the set of backend servers to which the NGINX Plus API gateways route API calls that consumers send to the entry point. You specify whether traffic between the API gateways and the backend servers is protected by HTTPS, and set the appropriate port number on the backend servers.

The screenshot shows an upstream group named Product Info containing two upstream servers that use HTTPS (as for the entry point, the name is used when defining an environment). All calls to api.example.com (the entry point defined above) are routed to these upstream servers, as specified by the environment.

Creating the API Definition

The API definition specifies the full URL path to the APIs (the elements that come after the entry point), and the resources (information types) exposed by the API.

The base path is the set of elements that follow the entry point (the first or root element) in the URL and are shared by all APIs accessed via the entry point. The base path must start with a forward slash (/).

The final element in an API URL is a resource, a collection of information exposed by an API. There can be multiple resources, each corresponding to a file, a document, an image, or the output of a function call that processes complex business logic. As with entry points, it is a best practice to make resource names descriptive of the information they expose.

The screenshot shows two resources – inventory and pricing – that API consumers can access at api.example.com/api/v1/product.

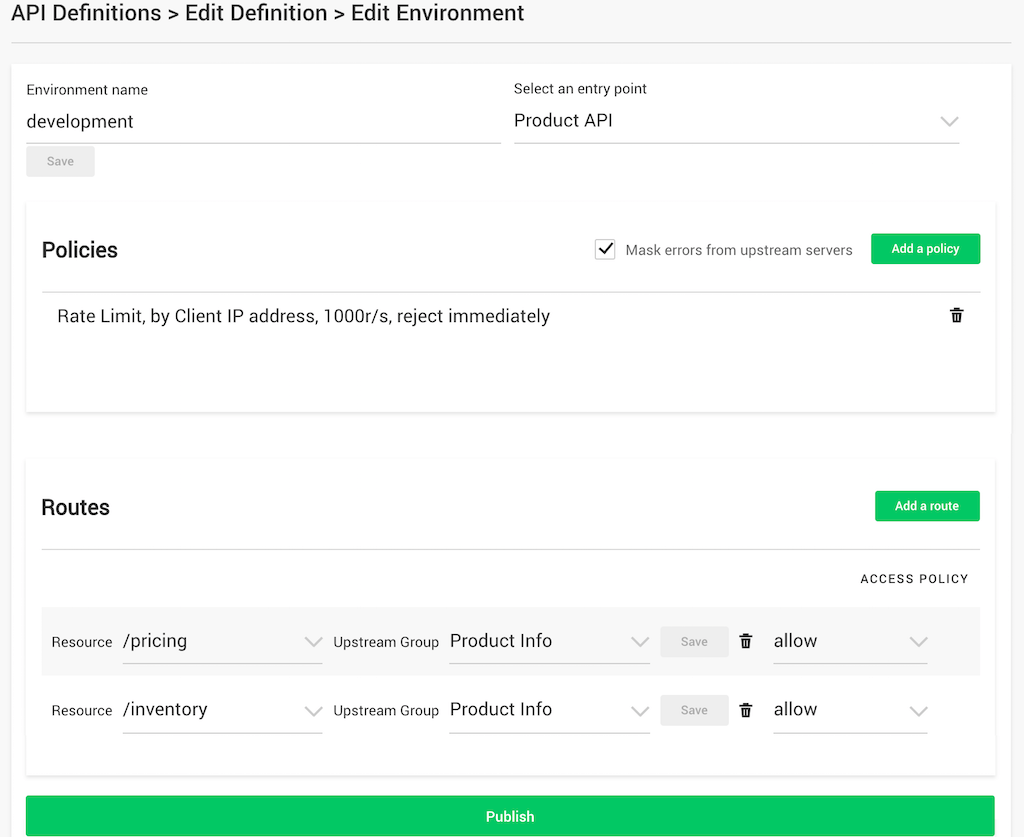

Defining the Deployment Environment

The final component in an API definition is the deployment environment, such as “development” or “production”. An environment is where you associate an entry point (and by implication its API gateways) with the API definition. You create environment‑specific policies for rate limiting, authentication, and authorization. You also associate each resource with an upstream group, and create fine‑grained conditional access control policy on a per‑resource basis (based on request header or JWT claims). You then publish this configuration to the NGINX Plus API gateway or gateways.

The screenshot shows an environment called development for the Product API entry point. A rate‑limiting policy has been defined to accept up to 1000 API calls per second from each client. The servers in the Product Info upstream group handle all requests to the pricing and inventory resources.

Define Once, Publish To Many Environments

As previously mentioned, many API management tools require you to re‑create the definition of an API for each deployment environment. With the NGINX Controller API Management Module, you define the API just once and publish it to as many environments as you want. You still have the flexibility to set different rate limiting or authorization policies for different environments. For instance, you might set a rate limit for a production environment but not for a test environment.

Rate limiting and authentication/authorization policies are crucial to securing your APIs and backend services and components. I will cover API security in detail in my next blog. In the meantime, request a free 30‑day trial of NGINX Controller.