you have to secure the components

A shift is happening in the tech industry: monolithic web applications are being decomposed into microservices, and new web applications are being developed using microservices from scratch.

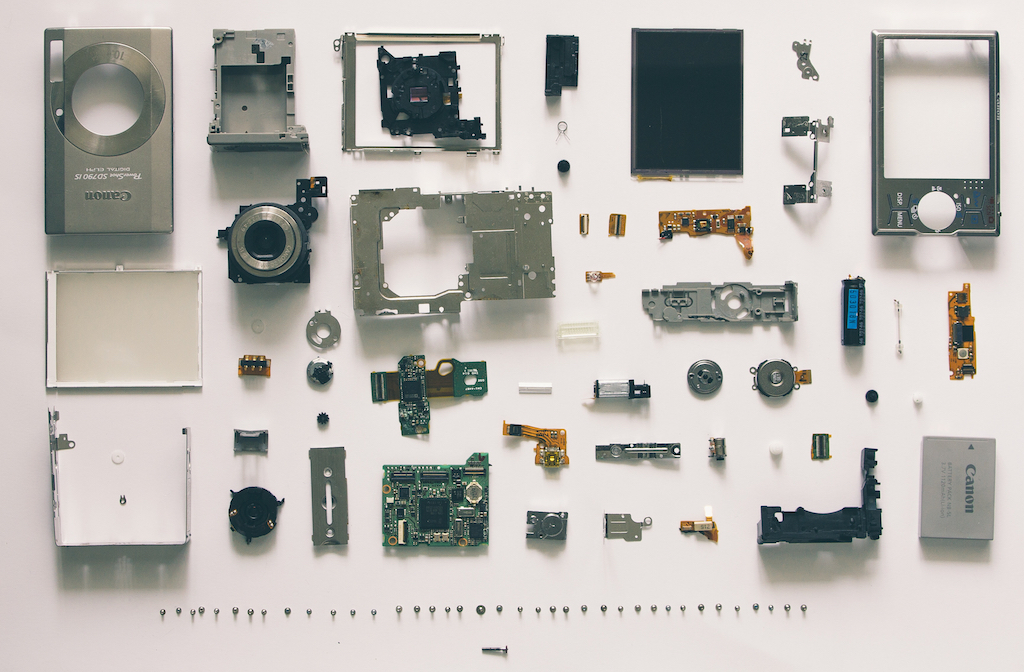

By breaking up applications into small, decoupled services, developers are able to independently change components of their application, no matter how extensive it is overall. This architectural approach can have a ripple effect that drastically shrinks total time to deliver changes, reducing risk at the same time.

Microservices pulls apart once‑dependent systems and allow teams to move faster in all stages, from development to runtime operations. The switch to microservices impacts development, performance, monitoring and – as we will see – security.

Generally, the move to microservices isn’t done in a vacuum and is paired with the cultural shifts of agile and DevOps adoption. These cultural movements, which have filtered through many organizations, attempt to break down silos that previously impaired delivery.

The move to microservices spells good things for the web, as the majority of microservices are being delivered over HTTP/HTTPS. While there may be more suitable protocols than HTTP, there is no denying that as an industry we know how to optimize and deliver using this protocol better than any other. In terms of the best experiences delivering over HTTP, NGINX is often the web server of choice for delivering web services, and it’s easy to see why.

NGINX for Microservices

It’s easy to love NGINX for microservices. One of the reasons (much loved by web operations engineers) is its straightforward configuration. For microservices, it’s no different because you can get NGINX up and running quickly as a web server for your API gateway with simple configuration changes.

Another reason why NGINX is the preferred web server for microservice implementations and API gateways is its speed. NGINX is fast and performs well under web‑scale load. NGINX is asynchronous by design and uses nonblocking threads, which means that as traffic increases, things won’t slow down.

Over a third of the web uses NGINX, with August 2017 estimates at 34.6%; however, when you isolate to just the biggest sites, NGINX is used by 58.4%. This high and increasing adoption helps to show how valuable NGINX is to web application development.

Lastly, operations engineers generally appreciate the flexibility of NGINX to operate either as a web server, a proxy, or a load balancer. This means NGINX can be used interchangeably throughout an architecture. NGINX also delivers rock‑solid HTTPS performance, but securing this new landscape requires more than just a secure protocol.

Security Concerns for Microservices

On the one hand, security is measurably better in microservices just by the very nature of the architecture pattern. By decoupling services, you are not only adding resiliency, but there are new boundaries between portions of the system. Each API can have its own built‑in throttling and limits, used to detect error conditions or attempts to overwhelm or abuse the system.

While microservices can be more secure, the move to microservices can also create new attack vectors. Microservice architectures add in new attack surfaces, as what were once internal calls within an application – inside the monolith – are now delivered across the network, and sometimes across the Internet, to other services.

In this context, application security is one of the largest gaps of microservices. Now that microservices are running via HTTP, the security concerns of traditional application security translate directly to microservices. Data injection attacks, cross‑site scripting, privilege escalation, and command execution are all still relevant. Additionally, if the microservices don’t have sufficient monitoring in place, or defenses built in, business logic attacks could go undetected.

Signal Sciences with NGINX Defends Microservices

Signal Sciences provides a web protection platform that runs as a module in all the major web servers, and most prominently in NGINX. Signal Sciences takes a unique approach to web application security. The platform identifies common web application attack vectors, like SQLi, XSS, and other OWASP Top Ten attacks; however, it doesn’t stop there. Using the Signal Sciences custom signals, users can detect business logic flaws, user account takeovers, or monitor any application flow that they desire. Whatever you need to watch more closely, you can easily do so with Signal Sciences.

Signal Sciences spans the breadth of your applications to pinpoint application logic flaws and problems based on your unique business logic. One of our customers, Jon Oberheide, co‑founder and CTO of Duo Security, says it best: “The Signal Sciences approach gives us situational awareness about where and how our applications are attacked so that we can best protect ourselves and our customers.”

Like NGINX, Signal Sciences is extremely lightweight and fast. Some of the largest web‑scale companies trust Signal Sciences and run the web protection platform on their production traffic. Currently, Signal Sciences evaluates two to three billion requests per day that flow through NGINX and the Signal Sciences NGINX module.

This includes web and API traffic, and many of our customers are using a microservices architecture pattern. Customers include household names such as Adobe, Etsy, and Vimeo. If you are delivering microservices via NGINX, and security and performance are important to you, then contact us to explore how Signal Sciences can be the right fit for you.