“Sensitive data exposure” by APIs is #3 on the OWASP Top 10 Application Security Risks list, and there’s no shortage of real‑world examples. In July 2018, Salesforce revealed that an update to its Marketing Cloud service introduced an API bug that might have caused API calls to retrieve or write data from one customer’s account to another’s. At Venmo, a popular payment application owned by PayPal, a poorly secured public API allowed a massive data leak, exposing more than 207 million transactions. According to Gartner, by 2022 API abuses will be the leading attack vector for data breaches within enterprise Web applications.

Security is a key element of API lifecycle management. Given that weak API security can leave you exposed to critical vulnerabilities, API security must be built into the API architecture from the beginning – it cannot be an afterthought.

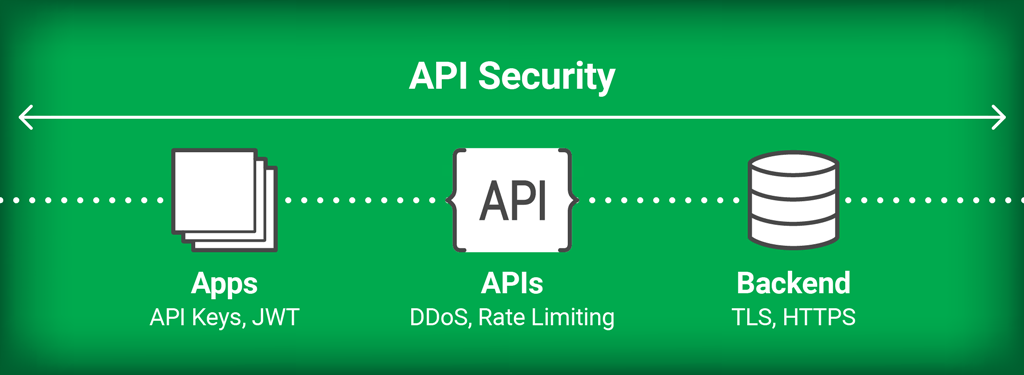

Securing the API environment involves every API touchpoint – authenticating and authorizing API clients (third‑party applications and developers), rate limiting API calls to mitigate distributed denial-of-service (DDoS) attacks, and protecting the backend applications that process the API calls. Let’s take a look at how to secure each touchpoint using the NGINX Controller API Management Module.

Authenticating and Authorizing Your API Clients

How does the API Management Module reliably validate the identity of API clients (third‑party applications and developers)? How does it determine which privileges and resources are granted to each caller? The API Management Module currently supports two mechanisms for determining client identity and scope of access – API keys and JSON web tokens (JWTs).

Using API Keys for Authentication and Authorization

An API key is a long string of characters that uniquely identifies an API client. The client presents the key to identify itself when it makes a request. By default, the API Management Module generates the key when you create a client, but you can also import existing keys.

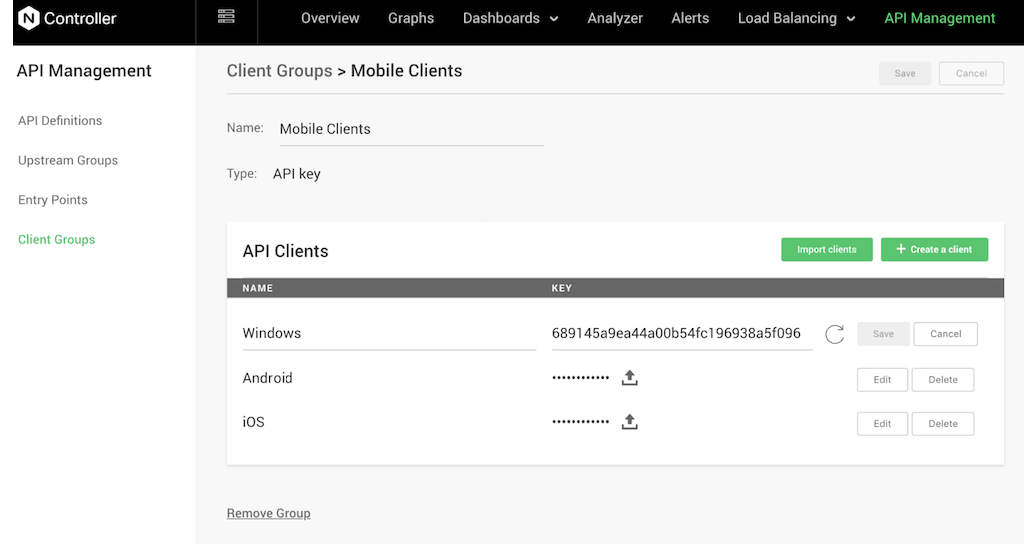

The screenshot shows a client group called Mobile Clients with API keys for Windows, Android and iOS mobile clients.

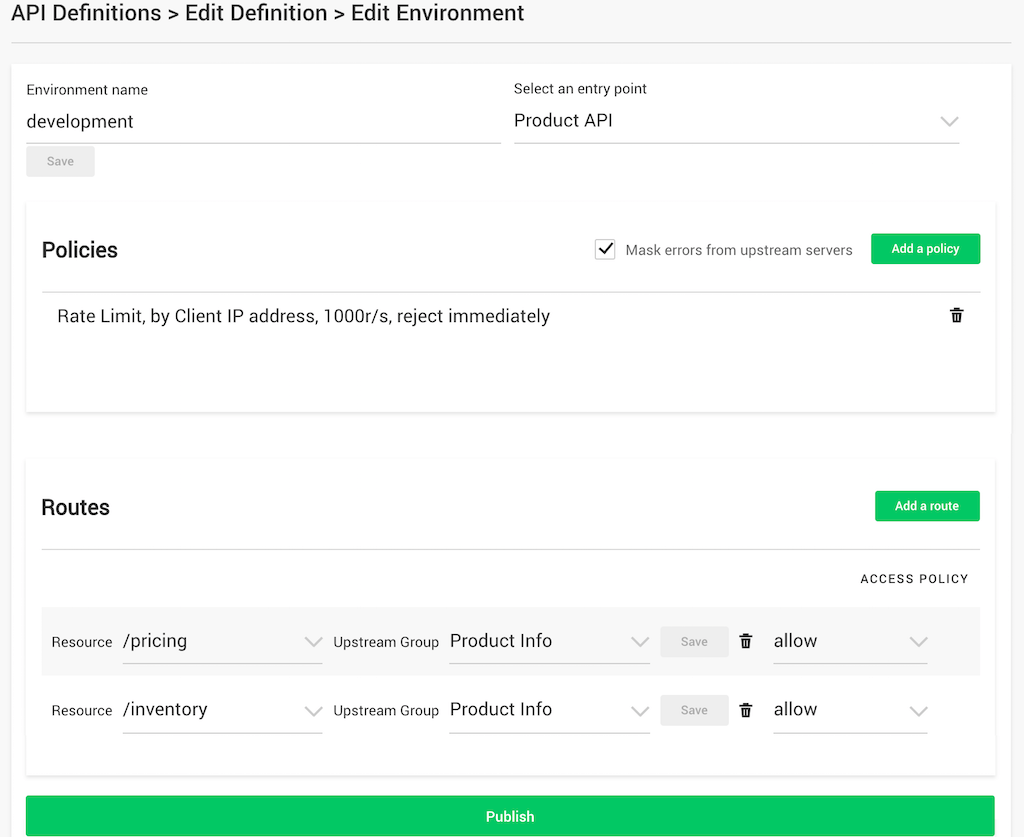

You then need to specify that an API can be accessed using the API key, by creating a policy in a deployment environment associated with the API. In our previous post on defining APIs with the API Management Module, we created a deployment environment called development for an API with entry point Product API.

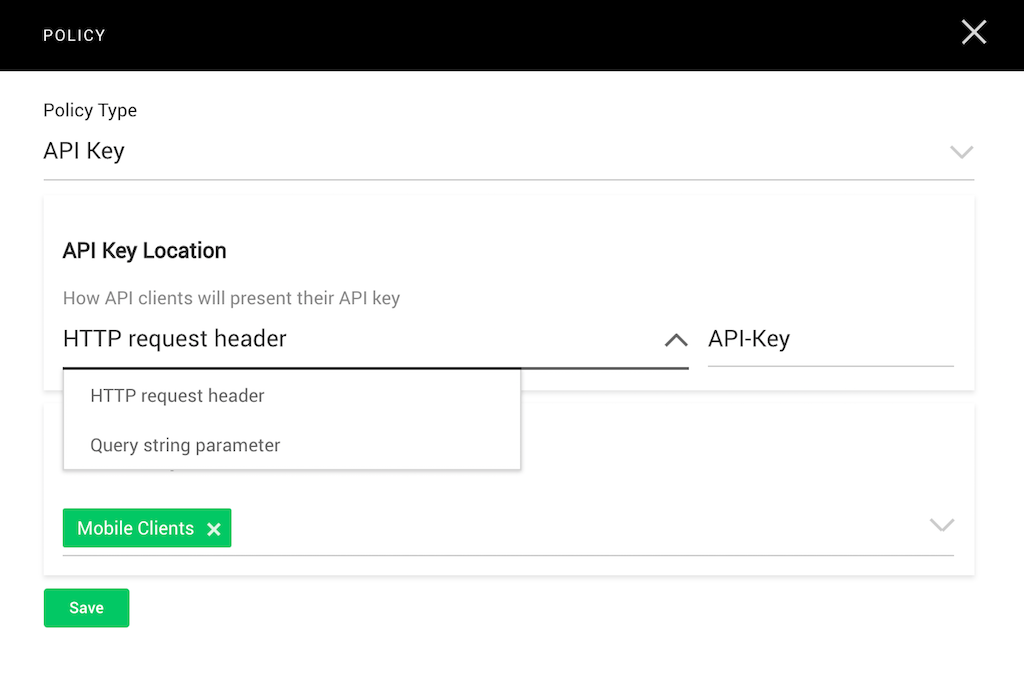

As shown in the screenshot, we previously created a rate‑limiting policy for the deployment environment, and now we add another policy to grant access to API clients that present an API key. Clients can present the key either in a specified HTTP request header or as a parameter in the query string.

The policy in the screenshot specifies that when members of the Mobile Clients client group call the API, the API Management Module extracts the API key from the HTTP request header called API-Key.

This type of policy applies to all resources (routes) defined for the API. You can also create policies for individual resources, for example either /pricing or /inventory in our sample environment.

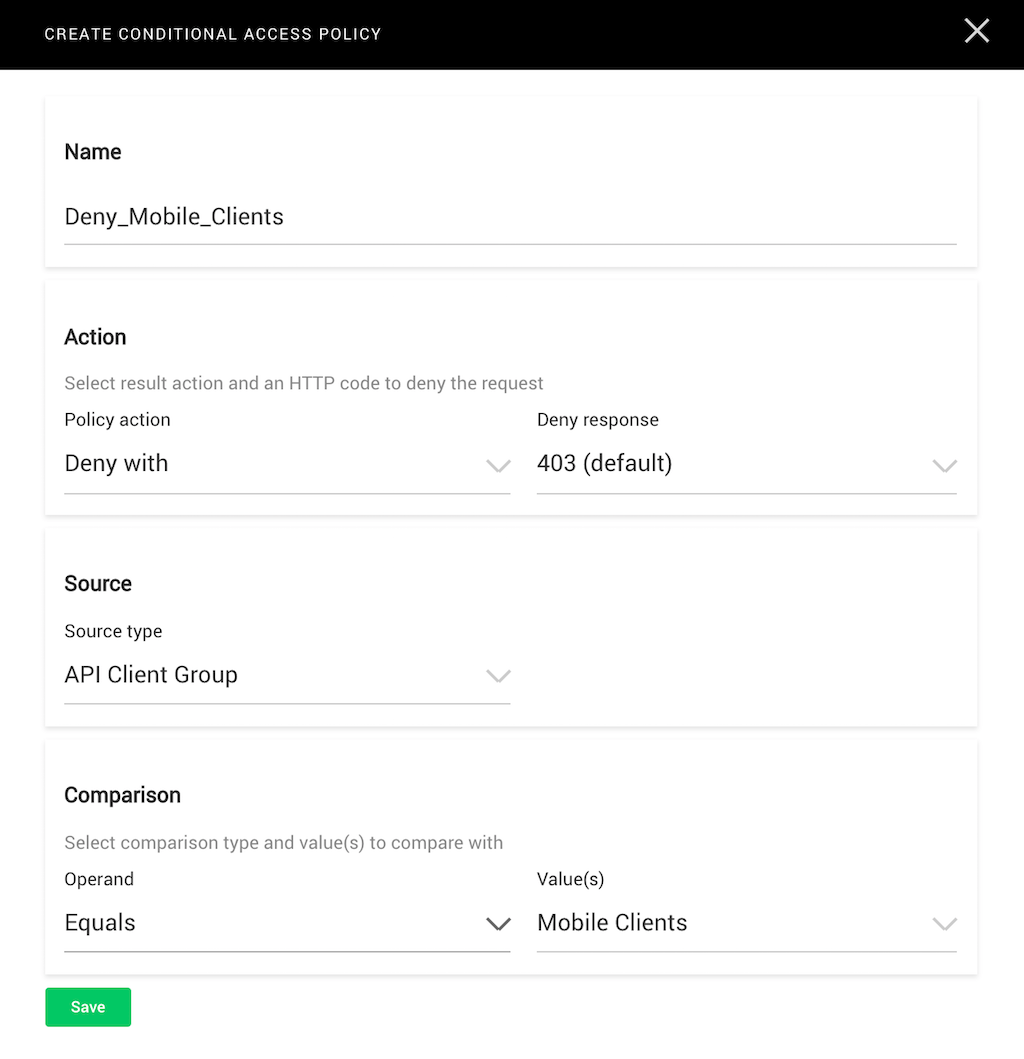

The policy in the screenshot denies access to members of the Mobile Clients client group. When they request information from the resource associated with this policy (say /pricing), they instead get HTTP error code 403 Forbidden.

Using JWTs for Authentication and Authorization

JSON Web Tokens (JWTs, pronounced “jots”) are a more advanced means of exchanging identity information. The JWT specification has been an important underpinning of OpenID Connect, providing a single sign‑on token for the OAuth 2.0 ecosystem. JWTs are pieces of information about the client (such as having administrative privilege) or the JWT itself (such as an expiration date). When the client presents a JWT with its request, the API gateway validates the JWT and verifies that the claims in it match the access policy you have set for the resource the client is requesting.

As an example, the JWT presented by a client app might include a claim authorizing access to only one specific resource. If the client app attempts to access any other resources, HTTP error code 403 Forbidden is returned.

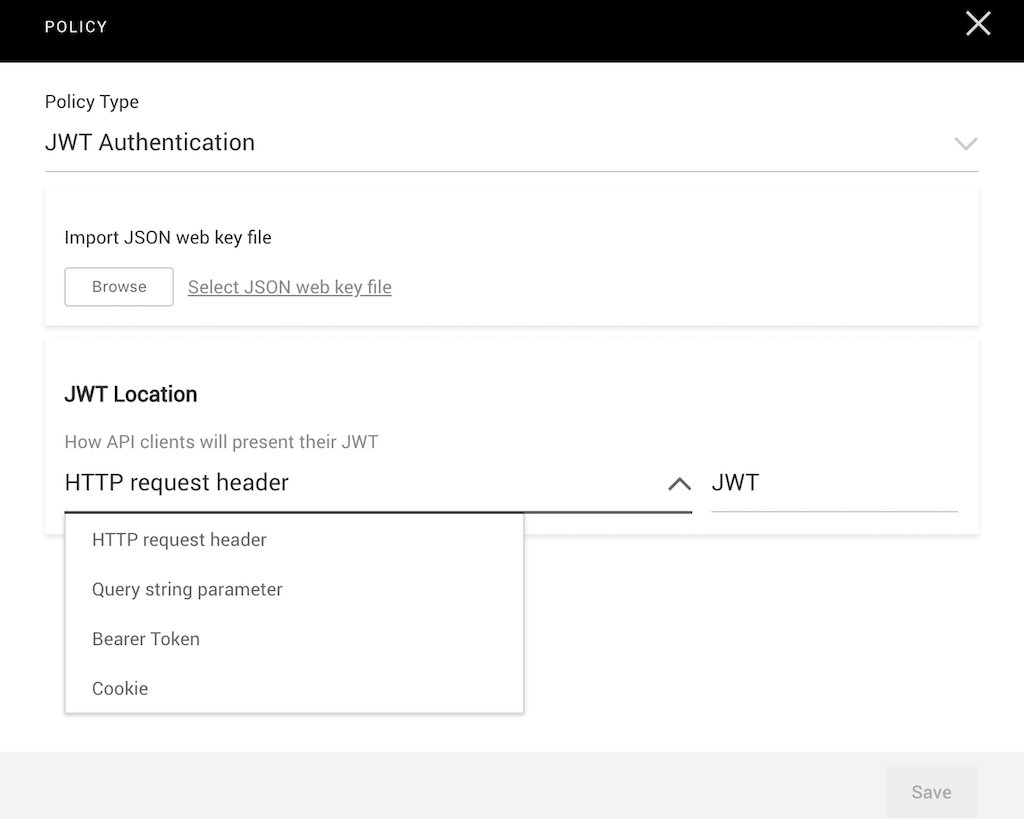

As with API keys, you can create policies that grant access to all resources associated with an API deployment environment, or only to specific resources. When defining an all‑resource policy, you specify how the client needs to present its JWT: in an HTTP request header, query string parameter, bearer token, or cookie.

The screenshot shows an JWT authentication policy which specifies that the API Management Module extracts the client’s JWT from the HTTP request header called JWT.

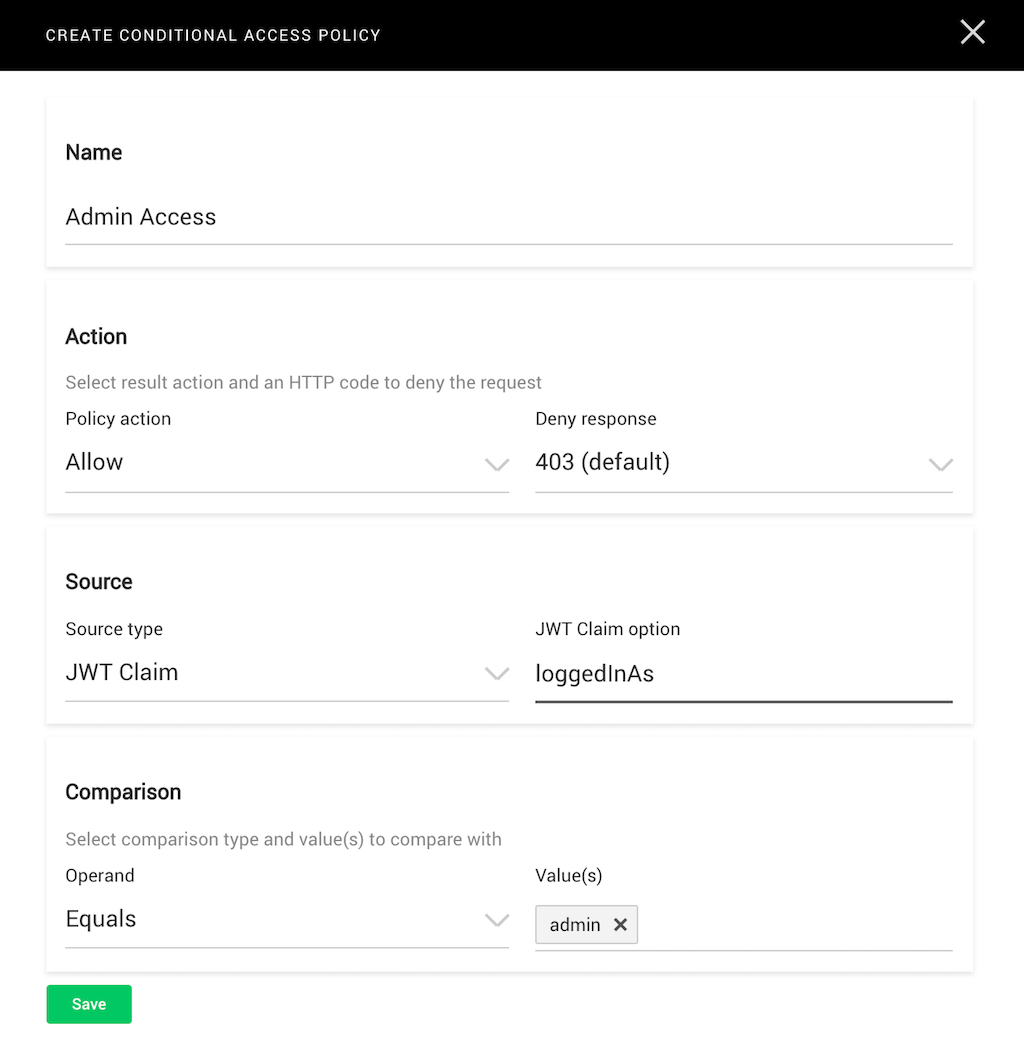

When you create a fine‑grained access policy specific to a resource, you specify the value that a particular claim must have for the policy to apply.

The policy in this screenshot grants access to the associated resource when the JWT claim loggedInAs has the value admin. If it does not, access is not granted.

Choosing Between API Keys and JWTs

API keys are a good choice for developer‑specific use cases. Because they are very easy to generate and use, they’re suitable for quickly prototyping APIs in dev and test environments. However, API keys can also be easily stolen when shared or stored in plaintext by anyone who has access to the authentication server.

JWTs are superior to API keys, especially in production environments, because they’re stateless and so don’t need to be shared across a cluster of API gateways. Claims are encapsulated in the token itself and thus can be validated by each gateway without performing a lookup.

Protecting Your APIs with Rate Limits

API entry points are the first point of contact between your APIs and your API clients. Consequently, you need to take utmost care when specifying entry points, to avoid introducing new vulnerabilities into your API infrastructure. One way the API Management Module helps you in this regard – unlike some other API management solutions – is by prohibiting wildcards in entry point names (for example, *.example.com). Wildcards expose a large attack surface, making it easy for hackers to launch a distributed denial‑of‑service (DDoS) attack.

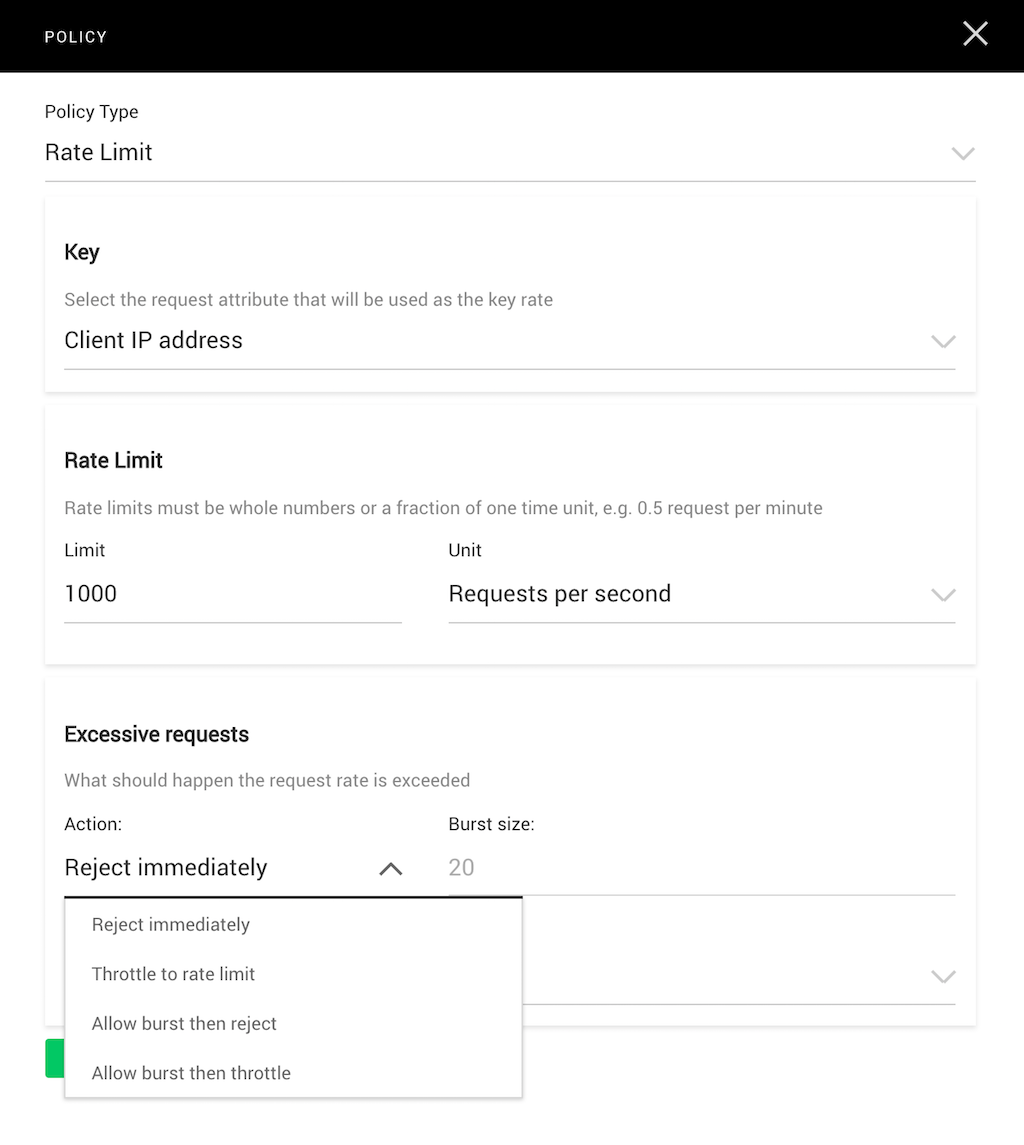

You can mitigate DDoS attacks by creating a rate‑limiting policy that sets a threshold on the number of requests the API gateway accepts each second (or other time period) from a specified source, such as a client IP address. You also define what happens when the threshold is reached – additional requests can be rejected or throttled to the rate limit, either immediately or after a burst of defined size.

The screenshot shows the rate‑limiting policy defined in the previous blog; it accepts up to 1000 requests per second from each client IP address.

Protecting Your Backend Applications

It’s a best practice to use HTTPS for traffic between the API gateway and upstream servers. You specify HTTPS when defining the group of servers for a resource, as shown in the following screenshot.

Is your API environment secure? Have you suffered any attacks or breaches because of poorly secured APIs. We’d love to hear from you in the comments below. In the meantime, request a free 30‑day trial of NGINX Controller.