Talking with our customers over the past year, we’ve noticed a few recurring themes that motivate them to evaluate orchestration products such as NGINX Controller:

- Complexity – Managing, maintaining, validating, and applying large sets of individual configuration objects from different sources isn’t easy.

- Fragility – Scaling up can happen quickly, often in response to an event. Sometimes it’s strategic, but as is often the case in every ops department, it’s unplanned and organic.

- Safety – Avoiding errors and catching problems before deployment to production are cornerstones of agile methodology. They also help mitigate complexity and fragility.

These are not concerns that customers generally face when first deploy an application. Rather, they crop up as time passes and the application becomes successful and needs to scale up. We find that scaling – or more precisely, unplanned scaling – is the common root cause of the concerns listed above.

Fortunately, the NGINX Controller configuration object model address all three concerns:

- It reduces deployment complexity by gathering in a single location all the configuration components that might impact a specific set of NGINX Plus instances.

- It reduces fragility by enabling teams to validate and test configurations before placing them into production, making it easier to fail fast and ensuring that production user traffic keeps flowing.

- It promotes safety because it’s highly flexible. Instead of forcing you to adjust your processes to fit the model, the configuration object model is designed to align with your business workflows and division of responsibilities – you can grant various business units appropriate ownership over configuration elements as appropriate.

Controller Configuration Objects

Let’s dive a little deeper into some key configuration objects to give you a better idea of how the Controller model works.

Environments

In most organizations, software passes through multiple stages before release: development, user acceptance, pre‑production, production, and other stages of quality checking. These stages correspond to the NGINX Controller object called an Environment.

An Environment is an isolation zone for configuration elements within Controller. It’s commonly the level where role‑based access control (RBAC) is defined. Environments can carry identical configuration artifacts through the various stages while substituting minimal changes for things such as target servers, data centers, and other infrastructure objects that commonly differ between Environments.

Gateways

A Gateway is a configuration object within an Environment, often used as the top level for defining how applications are delivered to customers – settings such as hostname, protocol, and TLS/SSL behavior – though such settings can also be made at a lower level. In practice, network operations (NetOps) teams, the folks who manage edge network appliances or the DMZ, most commonly own Gateway objects. Gateways also employ the concept of “Placement,” which is how you link Controller and the NGINX Plus instances that receive the configurations and do the actual work.

Apps

The next level down is the App configuration object, where you begin to model applications and group traffic‑shaping behaviors. You can use as many or as few Apps as needed to meet the needs of your organization. The only requirement is that an App must be unique within an Environment.

Components

Within an App, Components describe the desired traffic‑shaping behavior for the App. In the simplest case, all the traffic for a given pathname is sent to the same group of servers. But Components also control more advanced shaping like header manipulation, URL rewriting, backend load‑balancing behaviors, cookie handling, and other settings. Hostname and TLS/SSL behavior can also be defined at this level.

Tying It Together

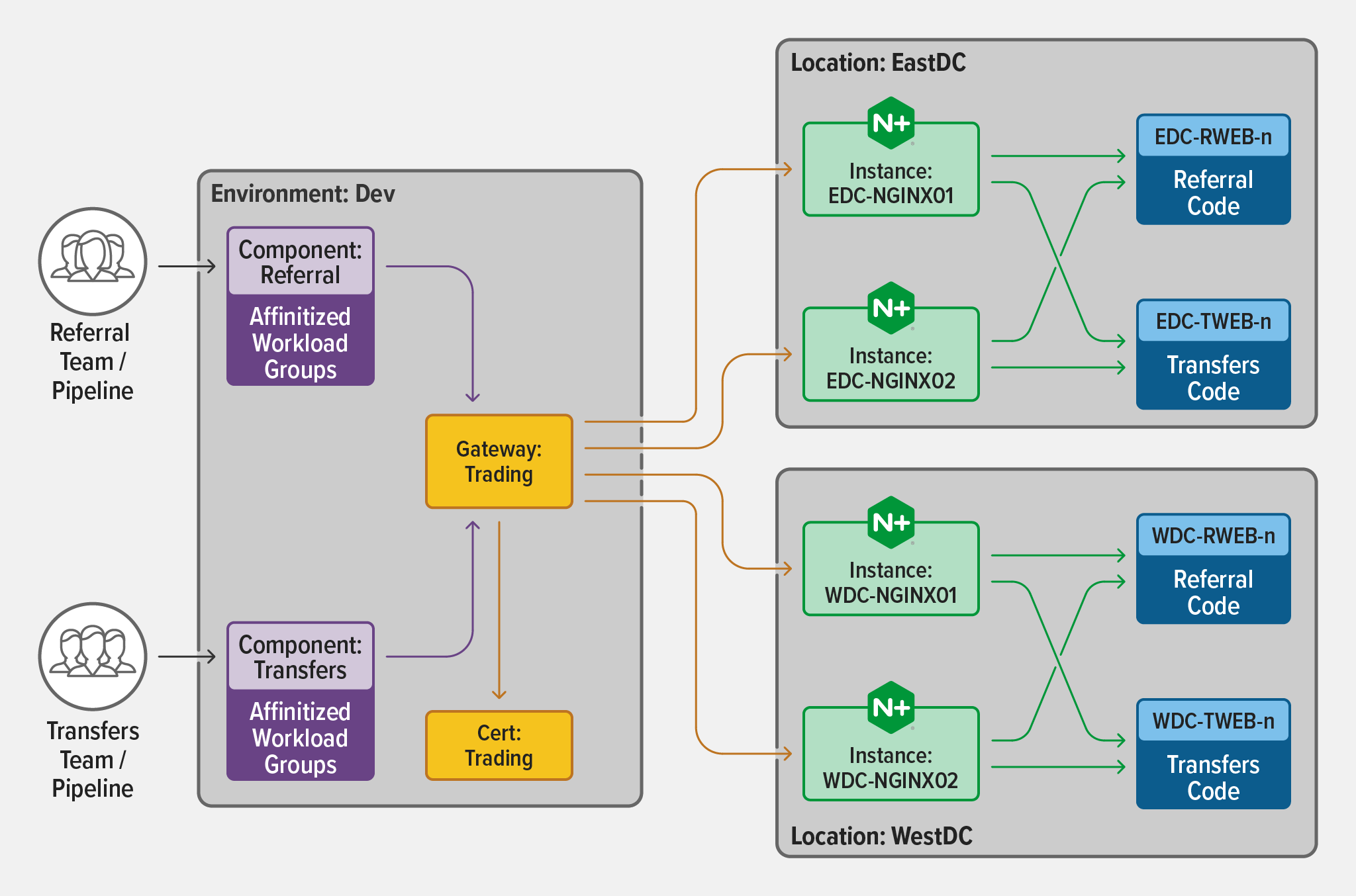

Here is a visual representation of the relationship between the configuration objects:

Notice that there are only two groups interacting with Controller – in this case two development teams, Referral and Transfers. In reality, we know the number of people involved with application delivery and security is likely much larger, spanning networking and security (Platform Ops), DevOps, app development, and more. The teams with the most knowledge can set policies and build self‑service workflows that align to best practices and provide guardrails for those teams working with Apps, Components, and Gateways within the “Dev” Environment which spans the organization’s East and West data center locations.

Let’s look at this in a slightly different way, more from a line-of-business (LOB) perspective. LOB owners are increasingly making application decisions for modern apps.

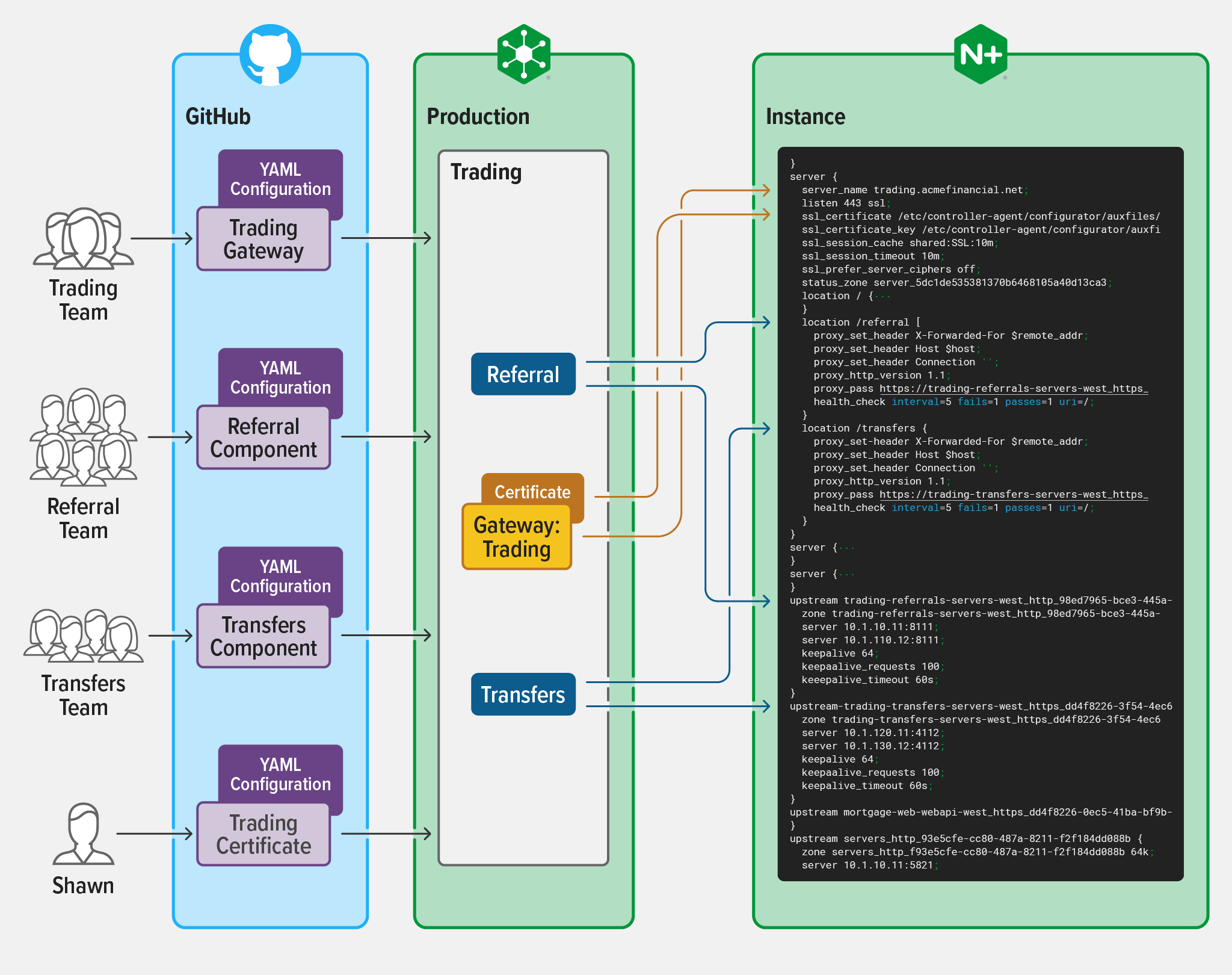

The following diagram illustrates a more true-to-life scenario that includes a much larger pool of users that includes various LOB teams and Shawn, the individual who manages all the certificate renewals.

Now, we have more individual actors and pipelines impacting the resulting data flow.

As each individual pipeline moves through the various object changes from source control (like GitHub) to Controller, the configuration objects are validated on the Controller side, then combined with any related objects before changes are pushed down to the NGINX Plus instances.

This is how Controller can help to make configuration management safer – by making sure that the latest changes are compatible with previous settings and settings from other application and LOB owners.

We realize that in practice, all the configurations that are applied to a single NGINX Plus instance must be possible – but settings must not conflict, overlap, or clash with each other. And it is important to catch conflicts up‑front and inform the folks that are providing the configuration of each individual component.

In the example above, when the Referral Component tries to use the same regex pattern that the Transfers Component already implemented, a path conflict is caught right away, and the Referral team can be notified long before they attempt to deploy this configuration in production – avoiding the many headaches associated with a misconfiguration.

Regarding certificates, Shawn is enabled – via Controller’s RBAC functionality – to immediately implement certificate changes. The maintainers of the Gateway and Components only need to reference the correct certificates and Shawn can manage those with confidence on their own lifecycle. If Shawn updates a certificate in Controller, it is pushed out to the correct instances, without the panic associated with a certificate expiration.

Now that NGINX Controller has all your configuration objects and a Placement association to one or more NGINX Plus instances, it can perform the last steps: applying the configuration.

Safety in Practice

In the end, the goal of configuration management with Controller is applying the correct configuration on the correct NGINX Plus instances in the correct location – ensuring that Apps, Components, Gateways, and all the associated certificates and instances are configured correctly results in safer, more scalable deployments.

This final verification is the last part of the configuration management process that Controller makes easier and safer. The entire configuration is verified as it is applied to an NGINX Plus instance that had some associated object change – such as session persistence in a web workload group for NGINX Plus deployed as a proxy – ensuring that the instance is compatible with the desired configuration. If that condition is met, the configuration is applied. And for the last bit of safety, if anything fails while applying the configuration, Controller reverts to a previous configuration.

In addition to ensuring configurations pass muster, Controller can also take advantage of advanced NGINX capabilities, such as session draining, when a new configuration is applied – guaranteeing that sites maintain availability and performance through changes.

For operations teams, the concepts and workflow designs outlined above contribute to the safety that NGINX Controller offers around configuration management. These safeguards help align workflows and the various teams that work with modern apps, while also supporting the needs of the business.

To try NGINX Controller, start your free 30-day trial today or contact us to discuss your use cases.