Software is becoming more and more capable, and more and more complex. Software, as Marc Andreessen famously said, is eating the world.

As a result, approaches to application development and delivery have shifted significantly in the past few years. These shifts have been tectonic in scope, and have led to a set of principles that are very useful when building a team, implementing a design, and delivering your application to end users.

These principles can be summarized as keep it small, design for the developer, and make it networked. With these three principles, you can design a robust, complex application that can be delivered quickly and securely, scaled easily, and extended simply.

Each of these principles has its own set of facets that we will discuss, to show how each principle contributes to the end goal of quickly delivering robust applications that are easy to maintain. We will contrast each principle with its antithesis to help clarify what it means when we say something like, “Make sure you design using the small principle”.

We hope this blog post encourages you to adopt a set of principles for building modern applications that provides a unified approach to engineering in the context created by the modern stack.

By implementing the principles you’ll find yourself taking advantage of the most important recent trends in software development, including a DevOps approach to application development and delivery, the use of containers (such as Docker) and container orchestration frameworks (such as Kubernetes), microservices (including the NGINX Microservices Reference Architecture), and service mesh architectures for microservices applications.

What Is a Modern App?

Modern applications? Modern stack? What does “modern” mean exactly? Most of us have a sense of what makes up a modern application, but it’s worth positing a definition for the sake of the discussion.

A modern application is one that supports multiple clients – whether the client is a UI using the React JavaScript library, a mobile app running on Android or iOS, or a downstream application that connects to the application through an API. Modern applications expect to have an undefined number of clients consuming the data and services it provides.

A modern application provides an API for accessing that data and those services. The API is consistent, rather than bespoke to different clients accessing the application. The API is available over HTTP(S) and provides access to all the features and functionality available through the GUI or CLI.

Data is available in a generic, consumable format, such as JSON. APIs represent the objects and services in a clear, organized manner – RESTful APIs or GraphQL do a good job of providing the appropriate kind of interface.

Modern applications are built on top of a modern stack, and the modern stack is one that directly supports this type of application – the stack helps the developer easily create an app with an HTTP interface and clear API endpoints. It enables the app to easily consume and emit JSON data. In other words, it conforms to the relevant elements of the Twelve‑Factor App for Microservices.

Popular versions of this type of stack are based on Java, Python, Node, Ruby, PHP, and Go. The NGINX Microservices Reference Architecture (MRA) provides examples of the modern stack implemented in each of these languages.

Keep in mind that we are not advocating a strictly microservices‑based application approach. Many of you are working with monoliths that need to evolve, while others have SOA applications that are being extended and evolved to be microservices applications. Still others are moving toward serverless applications, and some of you are implementing a combination of all of the above. The principles outlined in this discussion can be applied to each of these systems with some minor tweaks.

The Principles

Now that we have a shared understanding of the modern application and the modern stack, let’s dive into the architectural and developmental principles that will assist you in designing, implementing, and maintaining a modern application.

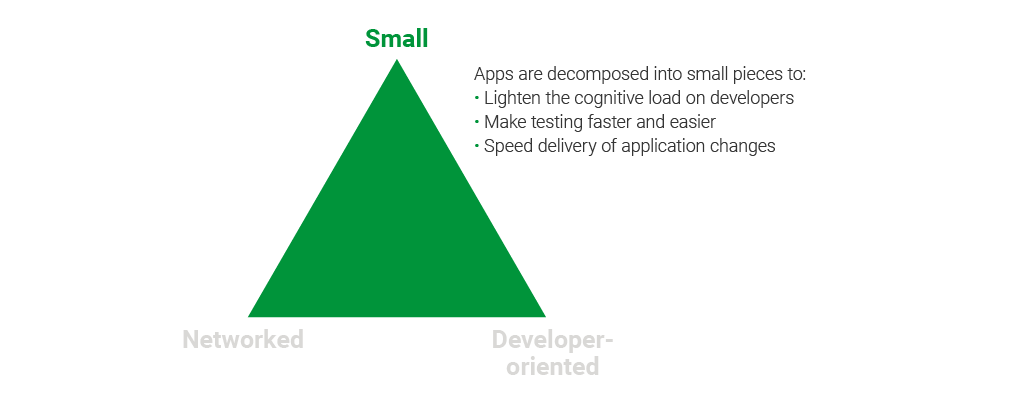

One of the core principles of modern development is keep it small, or just small for short. We have applications that are incredibly complex with many, many moving parts. Building the application out of small, discrete components makes the overall application easier to design, maintain, and manage. (Notice we’re saying “easier”, not “easy”.)

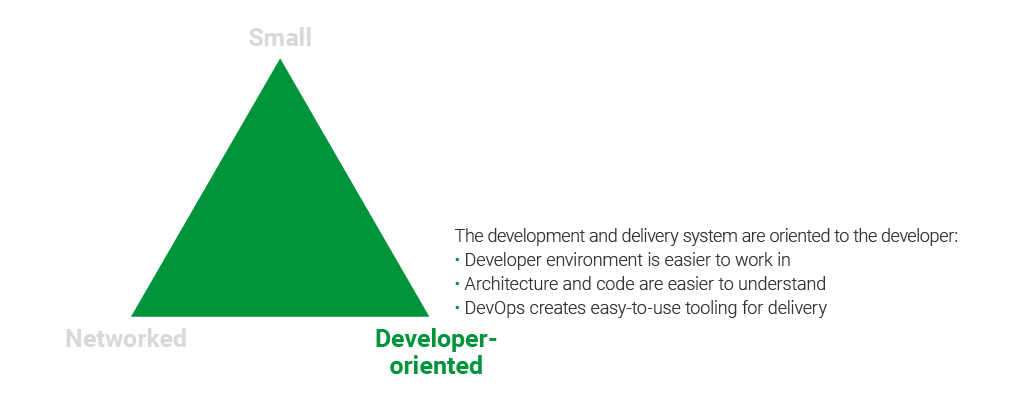

The second principle is that we can maximize developer productivity by helping them focus on the features they are developing and freeing them from concerns about infrastructure and CI/CD during implementation. So, our approach is developer‑oriented.

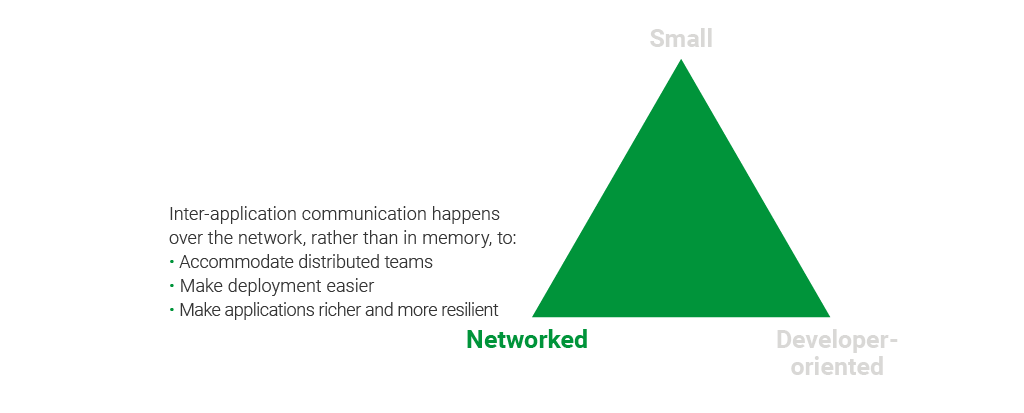

Finally, everything about your application should be networked. As networks have gotten faster, and applications more complex, over the past 20 years, we’ve been moving toward a networked future. As discussed earlier, the modern application is used in a network context by multiple different clients. Applying a networking mindset throughout the architecture has significant benefits that mesh well with small and developer‑oriented.

If you keep the principles of small, developer‑oriented, and networked in mind as you design and implement your application, you will have a leg up in evolving and delivering your application.

Let’s review these principles in more detail.

Principle 1: Small

The human brain has difficulty trying to consume too much information. In psychology, cognitive load refers to the total amount of mental effort being used to retain information in working memory. Reducing the cognitive load on developers is beneficial because it means that they can focus their energy on solving the problem at hand, instead of maintaining a complex model of the entire application, and its future features, in their minds as they solve specific problems.

There are a few ways to reduce the cognitive load that a developer must maintain, and it is here that the principle of small comes into play.

Three ways to reduce cognitive load on your development team are:

- Reduce the timeframe that they must consider in building a new feature – the shorter the timeframe, the lower the cognitive load

- Reduce the size of the code that is being worked on – less code means a lower cognitive load

- Simplify the process for making incremental changes to the application – the simpler the process, the lower the cognitive load

Short Development Timeframes

Back in the day, when waterfall development was the standard development process, timeframes of six months to two years for developing, or updating, an application were common. Engineers would typically read through relevant documents, such as the product requirements document (PRD), the system reference document (SRD), and the architecture plan, and start melding all of these things together into a cognitive model from which they would write code. As requirements changed, and the architecture and implementation shifted to keep up, the effort to keep the team up to speed and to maintain an updated cognitive model would become burdensome to the point of paralysis.

The biggest change in application development processes has been the adoption of agile development processes. One of the main features of an agile methodology is iterative development. This in turn has resulted in a reduction of the cognitive load that an engineer has to carry. Rather than requiring engineering teams to tackle the application in one fell swoop, over a very long period of time, the agile approach has enabled them to focus on small, bite‑sized chunks that can be tested and deployed quickly, eliciting useful feedback from customers. The cognitive load of the app shifted: from a six‑month to two‑year application timeframe, with a lot of detailed specifications, to a very specific two‑week feature addition or change, targeting a blurrier notion of the larger application.

Shifting the focus from a massive app to a feature that can be completed in a two‑week sprint, with at most the next sprint’s features also in mind, is a significant change, and one that has allowed engineers to be more productive and less burdened with a cognitive load that was constantly in flux. In agile development, the idea that an application will shift from the original conception is assumed, so the final instantiation is necessarily ambiguous; only the specific deliverables from each sprint can be completely clear.

Small Codebases

The next step in reducing an engineer’s cognitive load is reducing the size of the codebase. Modern applications are typically massive – a robust, enterprise‑grade application can have thousands of files and hundreds of thousands of lines of code. The interrelationships and interdependencies of the code and files may or may not be obvious, based on the file organization. Tracing the code execution itself can be problematic, depending on the code libraries used, and how well debugging tools differentiate between libraries/packages/modules and custom code.

Building a working mental model of the application code can take a significant amount of time, and again puts a significant cognitive load on the developer. This is particularly true of monolithic code bases, where the code base is large, interactions between functional components are not clearly defined, and separation of concerns is often blurred when functional boundaries are not strongly enforced.

One very effective way to reduce the cognitive load on engineers is to shift to development using microservices. With microservices, each service has the advantage of being very focused on one set of functionality; the service domain is typically very defined and understandable. The service boundaries are also very clear – remember, communication with a service can only happen via API calls – and effects generated by the internal operations of one service can’t easily leak over to another service.

Interaction with other services is also typically limited to a few consumer services and a few provider services, using clear and easily understood API calls via something like REST. This means that the cognitive load on an engineer is greatly reduced. The biggest challenge is understanding the service interaction models and how things like transactions occur across multiple services. Overall, the use of microservices reduces cognitive load significantly by reducing the total amount of code, having sharp and enforced service boundaries, and establishing clear relationships between consumers and providers.

Small Incremental Changes

The final element of the principle of small is managing change. It is very tempting for developers to look at a codebase (even – and perhaps especially – their own, older code) and declare, “this is crap, we should rewrite the whole thing”. Sometimes, that is the right thing to do, but often it is not. It also puts the burden of a massive model change on the engineering team, which, in turn, leads to a massive cognitive load problem. It’s better to get engineers to focus on the changes that they can affect in a sprint and deliver those over time, with the end product resembling the change originally envisioned, but tested and modified along the way to match customer need.

When rewriting large sections of code, it is sometimes not possible to deliver a feature because of dependencies on other systems. In order to keep the flow of changes moving, it is OK to implement feature hiding. Basically, this means deploying a feature to production, but making it inaccessible through an env-var or some other configuration mechanism. As long as this code is production quality and has passed all quality processes, then it is ok to deploy in a hidden state. However, this strategy only works if the feature is eventually enabled. If it is not, then it constitutes cruft in the code, and adds to the cognitive load that the developer must endure to get useful work done. Managing change, and keeping modifications incremental, each help keep the cognitive load on developers to a reasonable level.

Engineers have to deal with a lot of complexity in simply implementing a feature. As an engineering lead, removing extraneous cognitive load helps your team focus on the critical elements of the feature. The three things you can do as an engineering manager to help your development team are:

- Use the agile development process to limit the timeframe that a team must focus on it order to deliver a feature.

- Implement your application as a series of microservices, which limits the scope of features and enforces boundaries that keep cognitive load down during implementation.

- Promote incremental change over wholesale change, keeping changes in smaller, bite‑sized chunks. Allow feature hiding, so that changes can be implemented, even if they aren’t exposed immediately after they are added.

If you approach your development process with the principle of small, your team will be happier, more focused on implementing the features that are needed, and more likely to deliver higher‑quality code faster. This is not to say that things can’t get complex – indeed, implementing a feature that requires modifying multiple services can actually be more complex than if it were done in a monolith. However, the overall benefits of obeying the small principle will be worth it.

Principle 2: Developer-Oriented

The biggest bottleneck to rapid development is often not the architecture or your development process, but how much time your engineers spend focusing on the business logic of the feature they are working on. Byzantine and inscrutable code bases, excessive tooling/harnessing, and common, social distractions are all productivity killers for your engineering team. You can make the development process more developer‑oriented – that is, you can free developers from distractions, making it easier for them to focus on the task at hand.

To get the best work out of your team, it is critical that your application ecosystem focuses on the following:

- Systems and processes that are easy to work with

- Architecture and code that are easy to understand

- DevOps support for managing tooling

Developer Environment

If your developer’s environment embodies these principles, you will have a productive team that can fix bugs, create new features, and move easily from one feature to the next without getting bogged down.

An example of an easy-to-work-with development environment:

- A developer clones a GitHub repo

- He or she runs a couple of commands from a makefile

- Tests run

- The application comes up and is accessible

- Code changes are apparent in the running application

In contrast, development environments that require significant effort to get all the components up and running, including setting up systems like databases, support services, infrastructure components, and application engines, are significant barriers to productivity.

Architecture and Code

Once the system is up and running, having a standard way to interface with application code is also critical. Although there is no formal standard for RESTful APIs,, they typically have a few qualities that make them easy to work with:

- Endpoints are expressed as nouns, for example /image for an endpoint that provides access to images

- Create, read, update and delete (CRUD) operations use HTTP verbs:

GETfor retrievingPOSTfor creating new componentsPUTfor adding or updating a componentPATCHfor updating a subelement in a componentDELETEfor deleting a component

- HTTP(S) as the protocol for accessing the API

- Data in JSON format

- An OpenAPI (better known as Swagger) UI, or similar, to see API documentation, examples of how to use the API, and fields to test the API

These are typical standard elements of a RESTful API and mean that developers can use their existing knowledge and tools (browsers, curl, etc.) to understand and manipulate the system. Contrast this with using a proprietary binary protocol using RPC‑like calls: developers would need new tools (if they can find them), the API could be a mix of nouns and verbs, API calls might be overloaded with options and have unclear side effects, the data returned could be binary/encoded/compressed/encrypted or otherwise indecipherable.

Of course, there are other standards emerging, such as GraphQL, which address some of the shortcomings of RESTful APIs – specifically, the ability to access and query across multiple objects – but focusing on getting API clarity with REST is a good start for an application.

A microservices application typically has the following characteristics: components and infrastructure are containerized (for instance, in Docker images), the APIs between services are RESTful, and their data is formatted in JSON. With the proper instrumentation, this system is fairly easy for a developer to work with.

Easy-to-understand is a corollary to the above concept of easy-to-work-with. But what does easy-to-understand really mean? There are many ways in which code can be difficult to understand – the algorithms can be quite intricate, the interactions between components can be convoluted, or the logical model can be multidimensional. All of these are intrinsically complex aspects of your code and cannot be filtered out – usually, this type of complexity is what you want your developers to be focusing on.

The keys to making your code and architecture easy to understand have to do with having clear separation of concerns. A user‑management service should focus on managing user information. A billing‑management service should focus on billing. And while a billing‑management service may need user information to do its job, it should not have the user‑management service bound into its code. Having a clear separation of concerns is critical to making the code and architecture clear.

Another key way to making your code and architecture easy to understand is to have a single mechanism for interacting with your system services: meaning, a singular interface for accessing data and functions. When the data managed by a service can be modified in a variety of ways, for example through method calls or by directly modifying the database, making changes becomes challenging, because it is not always clear how a change in the database schema will impact other parts of the app. Having a single way to access data and functions clarifies all these issues.

Implementing application services as microservices provides both a clear interaction model and a clear separation of concerns. Microservices, by definition, are focused on specific tasks. When they are sized correctly, they provide a good mechanism for separating concerns. Microservices also present a single interface for accessing data and utilizing functions – typically a RESTful API. Engineers who are familiar with RESTful APIs and the HTTP verbs that drive them can readily understand how to use these microservices and become productive quickly.

Contrast this with a monolith, where engineers have access to all the layers of the application code – from APIs to data structures, methods/functions, and object‑related mapping (ORM) and/or data layers for data access. Without strict management of coding standards and data/function access, it is very easy for components to overlap and interfere with other parts of the application.

It is very common, for example, to make a local optimization where one part of the app writes data directly to a table in a database in order to accomplish something specific. This code often sits apart from the main part of the service that manages the table and so is not considered in a later refactoring. In microservices, this kind of mistake becomes much less likely.

DevOps Tools

Beyond making your app easy to understand and easy to work with, one of the ways to improve productivity on an engineering team is to reduce the time developers spend on their own infrastructure. Developers who don’t have environments that are easy-to-work-with from the start must invariably spend time making the environment easy-to-work-with-for-them. Bogging engineers down with responsibility for getting the system up and running, maintaining scripts, writing makefiles, and maintaining a CI/CD pipeline are all great ways to have them get lost in a labyrinth that should be the domain of DevOps.

As a more desirable alternative, having DevOps embedded with the engineering team means that there is a person or group dedicated to managing the more complex aspects of the development infrastructure. Especially when working with complex systems like microservice applications, it is critical to have someone focused on managing the development environment infrastructure. DevOps can focus on ensuring various desiderata:

- The makefile installs all components

- Every service builds properly

- Orchestration files load the containers in the right order

- Data stores are initialized

- Up-to-date components are installed

- Secure practices are being followed

- Code is tested before deployment

- Code is built and packaged for production

- Development environments mirror production as much as possible

By shifting infrastructure management from the engineers to DevOps, you can keep your engineers focused on developing features and fixing bugs rather than yak shaving.

Principle 3: Networked

Application design has been shifting over time. It used to be that applications were used and run on the systems that hosted them. Mainframe/minicomputer applications, desktop applications, and even Unix CLI applications ran in a local context. Connecting to these systems via a network interface gradually became a feature, but was often thought of as a necessary evil, and was generally considered to be “slower”.

However, as applications have become larger, both development and delivery have become more and more distributed. In response to the increasing speed and reliability of networks of all kinds, applications have become more and more networked: first by shifting from a single server to a three‑tier architecture, then to a service‑oriented architecture (SOA), and now to microservices. But the concern about networking applications “slowing things down” has persisted.

Even given that networks are slower than communication in a local context – though not to the degree they used to be – applications have been getting more and more networked. The reason for this is that networking your application architecture makes it more resilient, as well as making deployment and management easier.

Now, before diving into the benefits of networking, it is worth addressing the concerns about networking your application architectures.

One of the biggest concerns around networking has been the concern around speed – accessing a component over a network is still an order of magnitude slower than accessing that same component in memory. However, modern data centers have high‑speed networking between virtual machines which is infinitely faster than previous generations of networking. And companies like Google are working hard to make the latency for networking requests closer to that for in‑memory requests.

Even accessing third‑party services, once very slow, is now much faster, with peering connections that are significantly faster than 1 Gbps. With the most popular third‑party services hosted in POPs across the globe, services are typically only a few network hops away. And, if you are hosting your application in a public cloud such as AWS, you get the benefit of many other services running in the same data centers as your application. Speed is not the issue that it once was, and can be an optimized significantly with techniques like query optimization and multiple levels of caching.

One of the other concerns about networking has been that network protocols are opaque. Networking protocols commonly used in the past were often proprietary, application‑specific, or both, making them difficult to debug and optimize. The ubiquity of HTTP, and the greater power and accessibility added in its latest versions, have made HTTP networking very powerful, yet still accessible to anyone who has a browser or can issue a curl command. Engineers know how to connect, send data, modify headers, route data, and load balance HTTP connections. With the wide distribution of HTTP, networking has become accessible to the common man. With SSL/TLS and compression, you also get a secure and binary‑efficient protocol that is easy to use (harkening back to making your architectures developer‑oriented).

Now that we have dealt with the elephants in the room of speed and opacity, let’s review the benefits of a networked architecture: it makes your application more resilient, easier to deploy, and easier to manage.

More Resilient

By incorporating networking deeply in your architecture, you make it more resilient, especially if you design using the principles described in the Twelve‑Factor App for Microservices. By implementing twelve‑factor principles in your application components, you get an application that can easily scale horizontally and that is easy to distribute your request load against.

This means that you can easily have multiple instances of all of your application components running simultaneously, without fear that the failure of one of them might cause an outage of the entire application. Using a load balancer like NGINX, you can monitor your services, and make sure that requests go to healthy instances. You can also easily scale up the application based on the bottlenecks in the system that are actually being taxed: you don’t have to scale up all of the application components at the same time, as you would with a monolithic application.

Easier to Deploy

By networking your application, you also make deployment simpler. The testing regime for a single service is significantly smaller (or simpler) than for an entire monolithic application. Service testing is much more like unit or functional testing than the full regression‑testing process required by a monolith. If you are embracing microservices, it means that your application code is packaged in an immutable container that is built once (by your trusted DevOps team), that moves through the CI/CD pipeline without modification, and that runs in production as built.

Easier to Manage

Networking your application also makes management easier. Compared to a single monolith which can fail or need scaling in a variety of ways, a networked, microservices‑oriented application is easier to manage. The component parts are now discrete and can be monitored more easily. The intercommunication between the parts is conducted via HTTP, making it easy to monitor, utilize, and test.

Monitoring tools like NGINX Controller or NGINX Amplify effectively provide quantified data about your services and the request loads moving among them.. It is also easy to scale a networked application; using tools like Kubernetes, you can scale individual services rather than having to deploy entire application monoliths.

Networked applications provide many benefits over monolithic applications, and some of the concerns around networked applications have proven to be unfounded in the modern application environment. Networked applications are more resilient because, with proper design, they provide high availability from the get‑go. Networked applications are easier to deploy because you are typically only deploying single components and don’t have to go through the entire regression process when deploying a single service. Finally, networked applications are easier to manage because they are easier to instrument and monitor. Scaling your application to handle more traffic typically becomes a process of scaling individual services rather than entire applications. And the concerns around performance, especially given modern data center hardware, network optimization, and service peering, are reduced, if not entirely eliminated.

How NGINX Fits In

There are a number of tools that facilitate modern application development. One is containers, with deployment of Docker containers becoming standard practice for much application development and deployment. Another is the cloud and cloud services, with public cloud providers like Amazon Web Services (AWS), Google Cloud Platform, and Microsoft Azure skyrocketing in popularity.

NGINX is another of these tools, and, like the others, it’s used in both development and deployment. NGINX, Docker, and public cloud have all grown together, with NGINX, for instance, being the most popular download on Docker Hub, and NGINX software powering more than 40% of deployments on AWS. AWS directly supports a popular load‑balancing implementation that combines the AWS Network Load Balancer (NLB) and NGINX.

But how did NGINX become so popular, and how does it fit with our core principles of modern application development? We’ll tell that story here as best we can, though all NGINX users have their own reasons for adopting it.

NGINX Open Source first became available in 2003, with the commercial version, NGINX Plus, first released in 2013. The use of NGINX software has grown and grown, and NGINX is now used on most of the web’s most popular websites, including nearly two‑thirds of the 10,000 busiest.

This period of growth parallels almost exactly the emergence of modern application development and its principles: small, developer‑oriented, and networked. Why has NGINX grown so fast during this period?

In this case, correlation is not causation – at least, not entirely. Instead, the underlying reason for the growth of modern application development, and the increased use of NGINX, is the same: the incredibly rapid growth of the Internet. Roughly 10% of US retail commerce is now conducted online, and online advertising affects the vast majority of purchases. Nearly all of the great business success stories of the last few decades have been Internet‑enabled, including the rise of several of the most valuable companies in the world, the FANG group – Facebook, Apple, Netflix, and Google (now the core of the Alphabet corporation). Amazon and the recent, rapid growth of Microsoft are additional Internet‑powered success stories.

NGINX benefits from the growth of the Internet because it’s incredibly useful for powering busy, fast‑growing sites that provide very rapid user response times. There are two use cases. In the first, NGINX replaces an Apache or Microsoft Internet Information Server (IIS) web server, leading to much greater performance, capacity, and stability.

In the second use case, NGINX is placed as a reverse proxy server in front of one or more existing web servers (which might be Apache, Internet Information Server, NGINX itself, or nearly anything else). This way, the reverse proxy server handles Internet traffic – much more capably than most web servers – and the web server only has to handle application server and east‑west information transfer duties. As a reverse proxy server, NGINX also provides traffic management, load balancing, caching, security, and more – offloading even more duties from the application and other internal servers. The entire infrastructure now works better.

Both use cases are more attractive to busier, more successful websites than to smaller sites. That’s why it’s the busiest sites, such as Netflix, that tend to use NGINX more, with most of the world’s large websites running NGINX.

There’s also an additional, complementary use case: the use of NGINX at the core of publicly available content distribution networks (CDNs), as shown by this panel discussion from last year’s NGINX conference, as well as internal CDNs created for the private use of large websites. These largely NGINX‑powered CDNs have made an additional contribution to the performance, capacity, and stability of the entire Internet.

These uses of NGINX – as web server, as reverse proxy server, and at the heart of many CDNs – have contributed immeasurably to the growth of the Internet. Because of NGINX, the Internet, as used by people every day, is faster, stabler, more reliable, and more secure.

But NGINX has also grown, in part, directly because of its support for our core principles of application development:

- Small. NGINX works well with small, focused services, because NGINX itself is very small. It’s also very fast, hardly slowing down under heavy loads. This encourages the deployment of small application services, API gateways – some API management tools use NGINX at their core – and, of course, microservices. NGINX is widely used in different microservices network architectures, as an Ingress controller for Kubernetes, and as a sidecar proxy in our own Fabric Model and in service mesh models.

- Developer‑oriented. This is a huge reason for the growth of NGINX. Among other benefits, with NGINX, developers can manage load balancing directly, throughout the development and deployment of their apps. This applies whether the app is on‑premises, in the cloud, or both. Hardware load balancers – also called application delivery controllers (ADCs) – are typically managed by network personnel, who have to follow extensive, time‑consuming procedures before implementing a change. With NGINX, developers can make changes instantly, gaining flexibility and retaining control. This isn’t the only reason that NGINX is popular with developers – all of the other reasons for the growth of NGINX given here apply as well, as well as a fair amount of admiration for the solid work of the core NGINX development and support teams. But accessible, inexpensive load balancing capability may be the single largest reason.

- Networked. As applications have put more and more of their interservice traffic over the network, the way in which NGINX works to connect services has become a huge part of its popularity with developers. NGINX has always allowed a very high degree of control over HTTP, and has more recently led with support for SPDY, the predecessor to HTTP/2, and HTTP/2 itself. TCP and UDP support has also been added.

Doing all this, while bringing a small memory footprint, speed, security, and stability in all of the many use cases where it’s applied, has made NGINX a very large part of the growth of the Internet and a strong supporting force in the emergence of modern application development.

Conclusion

As we have seen, the principles of building a modern application are pretty simple. They can be summarized as keep it small, design for the developer, and make it networked. With these three principles, you can design a robust, complex application that can be delivered quickly, scaled easily, secured effectively, and expanded simply. NGINX software is a widely used tool for implementing these principles.