It’s been a while since our previous update, but by no means were we sitting on our hands. In today’s blog post, we discuss the most notable features of NGINX Unit released this autumn. If you stop reading this post after the first paragraph, here’s the key takeaway: now, you can run ASGI apps, use multithreaded request processing with applications, and include regular expressions in your configuration! That being said, let’s proceed in a more orderly fashion, reviewing the new features in NGINX Unit 1.19.0 through 1.21.0.

Python: ASGI Support

The first major upgrade revolves around Asynchronous Server Gateway Interface (ASGI), a (relatively) new programming interface that aims to bring coherence and uniformity to servers, frameworks, and applications that use Python’s async capabilities.

The interface was envisioned as a successor to the widely popular WSGI. However, unlike HTTP‑specific WSGI, ASGI is protocol‑agnostic. For example, it enables running WebSocket apps just as smoothly as HTTP‑based ones; this is covered in more detail below.

NGINX Unit’s history with ASGI started at version 1.20.0, which introduced support for ASGI 3.0. To use it, point NGINX Unit to an ASGI‑compatible callable in your Python app’s module.

NGINX Unit 1.21.0, still hot from the oven, extends the support to legacy ASGI implementations that rely on the two‑callable scheme. Despite the discrepancy between the two versions of the interface, the configuration JSON stays the same; for a legacy ASGI with two callables, you need to reference the first, one‑argument callable in your configuration. This is achieved by inferring the interface version (WSGI, ASGI 2.0, ASGI 3.0) from the callable signature. If the inference fails, you can use the new protocol option to drop a hint at what to expect, which is especially useful if the app uses some complex ASGI implementation logic that interferes with NGINX Unit’s attempts:

We won’t be delving into the nitty‑gritty details of ASGI itself. Instead, let’s wrap this part up with a compact Python web app that given a JSON array of URLs returns a JSON array of their response codes:

The app uses the asyncio and aiohttp modules; the former is a part of Python’s standard library, while the latter builds on it to provide asynchronous HTTP functionality. Let’s plug the module into NGINX Unit and see what happens:

# curl -X PUT --data-binary @asgi_config.json --unix-socket /var/run/control.unit.sock http://localhost/config

{

"success": "Reconfiguration done."

}So far, so good. Now, here’s the input for a test run:

# curl -X PUT --data-binary @urls.json http://localhost

{

"http://google.com": "200",

"http://nginx.com": "200",

"http://twitter.com": "200",

"http://aliensstolemycow.com": "connection error"

}Above we mentioned the protocol‑agnostic nature of ASGI. The ASGI specification provides details about the WebSocket connection scope that can be used to implement a WebSocket-over-ASGI approach. Let’s illustrate this with a barebones version of the online chat from Apache Tomcat’s WebSocket examples:

Even stripped of all non‑essentials, this is a bit lengthy, so we’ll briefly discuss what it does:

- The script contains both the Python code for the ASGI server and the JavaScript/HTML code for the client page.

- The server has two ASGI connection scopes:

httpandwebsocket. When you connect via HTTP, the client page is served by the app itself, which suits our purpose here. In general, though, static content is better served with NGINX Unit’sshareoption as that avoids calling the app at all. - The client code establishes a WebSocket connection to the server and runs a messaging event loop of sorts, displaying the updates it receives from the server.

- The WebSocket portion of the server code accepts new connections, broadcasts new user notifications to existing users, and receives user messages and broadcasts them to all users.

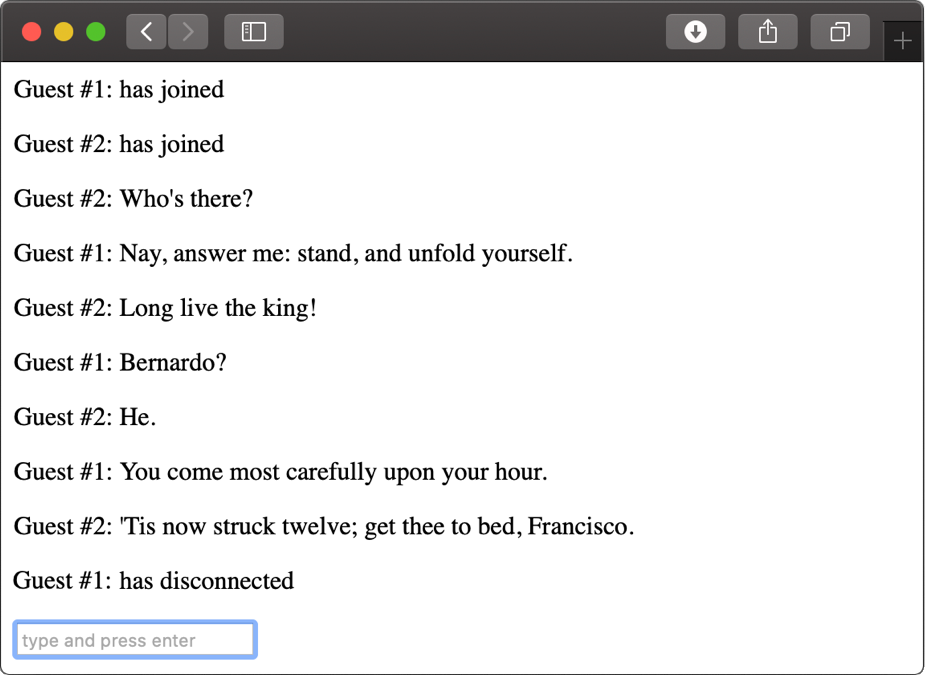

Finally, let’s see how this all looks:

Works fine for these two guys, apparently. (The Shakespeare lovers among you might recognize them as the sentries at Elsinore Castle who open Hamlet with these lines. Async or not async? That’s not even a question.)

Java, Perl, Python, Ruby: Multithreaded Request Handling

Another massive enhancement is NGINX Unit’s new support for scaling applications in threads, not just processes. With the release of NGINX Unit 1.21.0, you can fine‑tune your language runtime’s performance by running its instances in several threads within an application process. So far, this mechanism is supported only for Java, Perl, Python, and Ruby; other languages may follow suit.

The threading behavior of the application processes is controlled by the app‑specific threads configuration option:

The option sets the number of worker threads per application process, so the configuration above yields 2×6=12 threads total, and each thread works asynchronously. Note that NGINX Unit does not scale the number of threads automatically (which it can do for app processes). The value you set remains static throughout the entire lifetime of a process.

An additional customization called thread_stack_size is available for Java, Perl, and Python; exactly as its name suggests, it sets the size (in bytes) of an application thread’s stack.

PCRE-Style Regular Expressions for Routing

NGINX Unit 1.21.0 introduced what may be the longest‑awaited feature on our list – regular expressions (regexes). Currently, regexes are supported only where needed most: in routing conditions. Remember that routes funnel incoming requests through steps that comprise optional matching conditions and respective actions. That’s where regexes come into play.

NGINX Unit previously supported only the minimum necessary wildcards and matching modifiers, but those days are over. Now, you can unleash the full power of regexes on the unsuspecting request properties that NGINX Unit makes available, with the notable exception of source, destination, and scheme. The last one doesn’t need regex support because it has only two possible settings: http and https. The first two properties are IP address‑based, so applying regexes to them probably doesn’t readily come to mind anyway.

Let’s see how regex support allows you to streamline your NGINX Unit configuration. Consider this example:

This snippet is taken from a real app configuration that predates NGINX Unit 1.21.0. Most of it is self‑explanatory: if the request URI matches these patterns (with a few exceptions prefixed by exclamation marks), return the 403 response code. Straightforward? Yes, but quite redundant and unwieldy.

A route step that uses regexes to achieve the same goal looks like this:

You can see that regexes make the matching rule much more compact. Moreover, you can combine this approach with glob‑like patterns where appropriate (as in the "!/data/webdot/*.png" pattern here).

A few words about the syntax of the regexes themselves: behind the scenes, NGINX Unit relies on Perl Compatible Regular Expressions (PCRE) to implement regular expressions (unless you tinker with the compilation options), so the grammar is well established. However, the patterns are written as JSON strings, so they must be properly escaped (note the double backslashes in the example above). Also, notice that the property values are normalized prior to matching: NGINX Unit interpolates percent‑encoded characters, adjusts case, coalesces slashes, and so on.

Other Improvements

Automatic Mounting of Directories when the Filesystem Root Changes

NGINX Unit’s process isolation feature enables you to change the filesystem root with the rootfs option. To ensure the application remains operational after the filesystem is pivoted, several directories are mounted automatically by default; they include the app language runtime’s dependencies such as Python’s sys.path directories, as well as procfs and tmpfs directories.

If the app being isolated doesn’t need such behavior, you can disable these mountpoints with the new automount option:

PHP: Support for fastcgi_finish_request()

NGINX Unit 1.21.0 also introduced support for the PHP fastcgi_finish_request() function. It enables async processing in PHP by flushing the response data and finalizing the response before proceeding to tasks that might take a while but produce no output:

Here, the fastcgi_finish_request() call follows the user‑visible response, finalizing the response before a potentially time‑consuming invocation; thus, we notify the users without keeping the connection waiting.

HTTP Headers Can Include Extended Characters

Built with security in mind, NGINX Unit previously restricted valid HTTP request header fields to alphanumeric characters and hyphens, which, as it eventually turned out, interfered with the few apps that rely on unconventional header field names. In NGINX Unit 1.21.0 this behavior is controlled by a new configuration option called discard_unsafe_fields. By default, it is set to true so that NGINX Unit removes such header fields from requests as before, but you can disable it as follows:

Python: New callable Option

The new callable option for Python apps explicitly names the callable to launch. Previously NGINX Unit required the callable to be specifically named application; this was a pain point for some users, especially when third‑party apps were involved. Starting in NGINX Unit 1.20.0, you can specify a different name (app in this example). You can omit the callable option if your callable is named application.

Configuration Variables for Listener and Route Steps

NGINX Unit 1.19.0 enhanced the request routing mechanism in a minor but powerful way: now, a listener or a route step can be defined using configuration variables. Currently, there are three of them: $host, $method, and $uri. The names speak for themselves, so let’s focus on how they affect NGINX Unit’s routing mechanics.

For now, variables can only occur in pass destinations. At runtime, NGINX Unit replaces them with normalized Host header fields, HTTP method names, and URIs respectively, thus enabling simple yet versatile routing schemes. Perhaps this is best illustrated with an example configuration that relies on the $host variable, populated from the incoming request, to implement a simple routing scheme that chooses between local (localhost) and remote (www.example.com) queries:

Conclusion

This was a brief review of NGINX Unit’s most recent enhancements. The story goes on, though: among the things that we’re working on right now are an operational metrics API and keepalive connection caching for upstreams.

As always, we invite you to check out our roadmap, where you can find out whether your favorite feature is going to be implemented any time soon, and rate and comment on our in‑house initiatives. Feel free to open new issues in our repo on GitHub and share your ideas for improvement.

For a complete list of the changes and bug fixes in releases 1.19.0, 1.20.0, and 1.21.0, see the NGINX Unit changelog.

NGINX Plus subscribers get support for NGINX Unit at no additional charge. Start your free 30-day trial today or contact us to discuss your use cases.