Running and managing microservices applications in containers at scale across a cluster of machines is a challenging task. Kubernetes helps you meet the challenge by giving you a powerful solution for container orchestration. It includes several important features, such as fault tolerance, autoscaling, rolling updates, storage, service discovery, and load balancing.

In this blog post we explain how to use NGINX Open Source or NGINX Plus with Ingress, the built‑in Kubernetes load‑balancing framework for HTTP traffic. Ingress enables you to configure rules that control the routing of external traffic to the services in your Kubernetes cluster. You can choose any load balancer that provides an Ingress controller, which is software you deploy in your cluster to integrate Kubernetes and the load balancer. Here we show you how to configure load balancing for a microservices application with Ingress and the Ingress controllers we provide for NGINX Plus and NGINX.

[Editor – The previously separate controllers for NGINX and NGINX Plus have been merged into a single Ingress controller for both.]

In this blog post we examine only HTTP load balancing for Kubernetes with Ingress. To learn more about other load‑balancing options, see Load Balancing Kubernetes Services with NGINX Plus on our blog.

Note: Complete instructions for the procedures discussed in this blog post are available at our GitHub repository. This post doesn’t go through every necessary step, but instead provides links to those instructions.

Ingress Controllers for NGINX and NGINX Plus

Before we deploy the sample application and configure load balancing for it, we must choose a load balancer and deploy the corresponding Ingress controller.

An Ingress controller is software that integrates a particular load balancer with Kubernetes. We have developed an Ingress controller for both NGINX Open Source and NGINX Plus, now available on our GitHub repository. There are also other implementations created by third parties; to learn more, visit the Ingress Controllers page at the GitHub repository for Kubernetes.

For complete instructions on deploying the NGINX or NGINX Plus Ingress controller in your cluster, see our GitHub repository.

The Sample Microservices Application

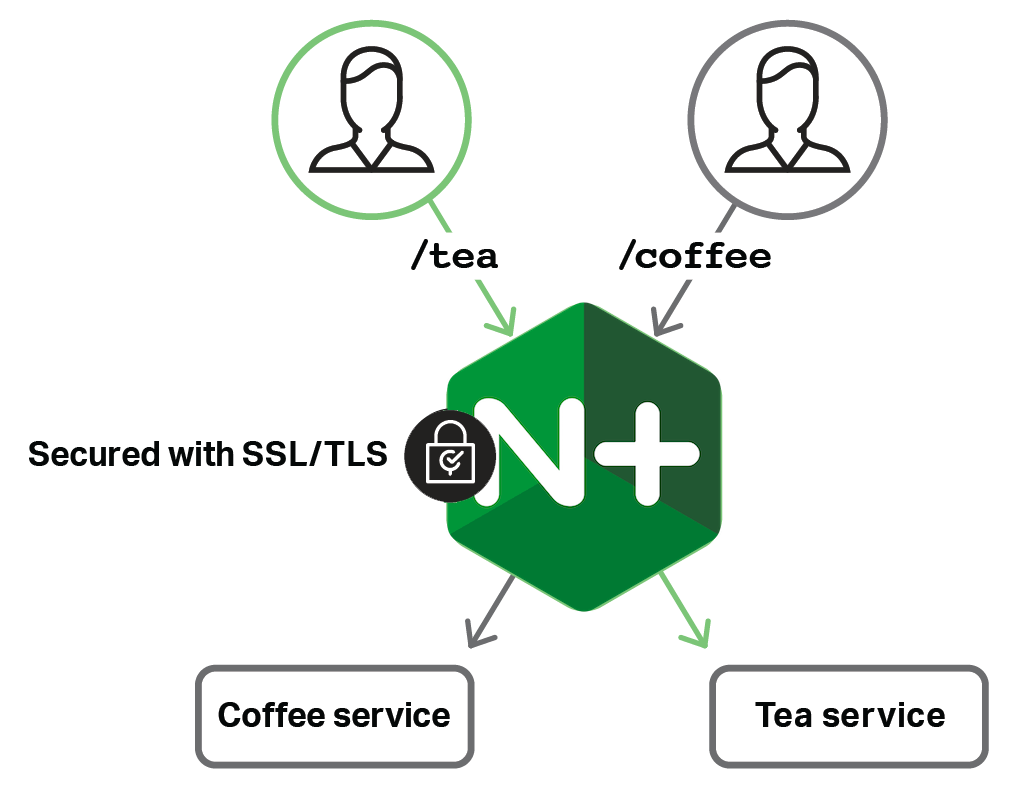

Our sample application is a typical microservices web application that consists of multiple services, each deployed separately. The application, called cafe, lets you order either tea via the tea service or coffee via the coffee service. You indicate your drink preference with the URI of your HTTP request: URIs ending with /tea get you tea and URIs ending with /coffee get you coffee. Connections to the application must be secured with SSL/TLS.

The diagram below conceptually depicts the application, with the NGINX Plus load balancer playing the important role of routing client requests to the appropriate service as well as securing client connections with SSL/TLS.

To deploy the application in your cluster, follow the instructions on our GitHub repository.

Configuring Kubernetes Load Balancing via Ingress

Our cafe app requires the load balancer to provide two functions:

- Routing based on the request URI (also called path‑based routing)

- SSL/TLS termination

To configure load balancing with Ingress, you define rules in an Ingress resource. The rules specify how to route external traffic to the services in your cluster.

In the resource you can define multiple virtual servers, each for a different domain name. A virtual server usually corresponds to a single microservices application deployed in the cluster. For each server, you can:

- Specify multiple path‑based rules. Traffic is sent to different services in an application based on the request URI.

- Set up SSL/TLS termination by referencing an SSL/TLS certificate and key.

You can find a more detailed explanation of Ingress, with examples, on the Ingress documentation page.

Here is the Ingress resource (cafe‑ingress.yaml) for the cafe app:

Examining it line by line, we see:

- In line 4 we name the resource cafe‑ingress.

-

In lines 6–9 we set up SSL/TLS termination:

- In line 9 we reference a Secret resource by its name cafe‑secret. This resource contains the SSL/TLS certificate and key and it must be deployed prior to the Ingress resource.

- In line 8 we apply the certificate and key to our cafe.example.com virtual server.

- In line 11 we define a virtual server with domain name cafe.example.com.

-

In lines 13–21 we define two path‑based rules:

- The rule defined in lines 14–17 instructs the load balancer to distribute the requests with the /tea URI among the containers of the tea service, which is deployed with the name tea‑svc in the cluster.

- The rule defined in lines 18–21 instructs the load balancer to distribute the requests with the /coffee URI among the containers of the coffee service, which is deployed with the name coffee‑svc in the cluster.

- Both rules instruct the load balancer to distribute the requests to port 80 of the corresponding service.

For complete instructions on deploying the Ingress and Secret resources in the cluster, see our GitHub repository.

Testing the Application

Once we deploy the NGINX Plus Ingress controller, our application, the Ingress resource, and the Secret resource, we can test the app.

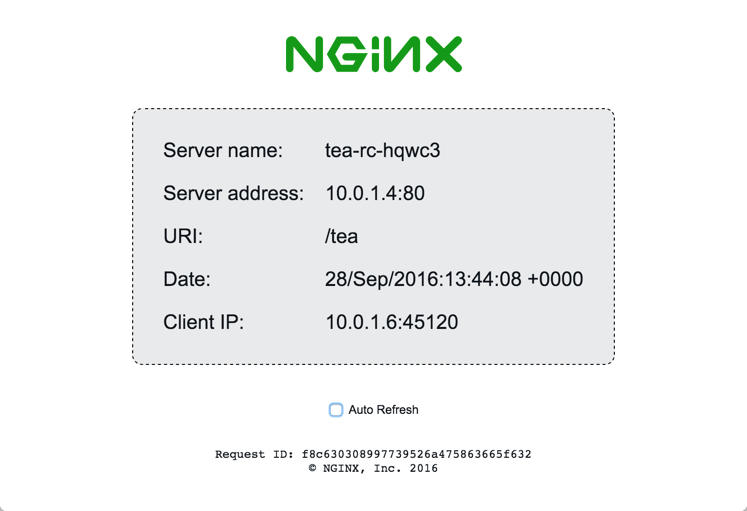

When we make a tea request with the /tea URI, in the browser we see a page generated by the tea service.

We hope you’re not too disappointed that the tea and coffee services don’t actually give you drinks, but rather information about the containers they’re running in and the details of your request. Those include the container hostname and IP address, the request URI, and the client IP address. Each time we refresh the page we get a response from a different container.

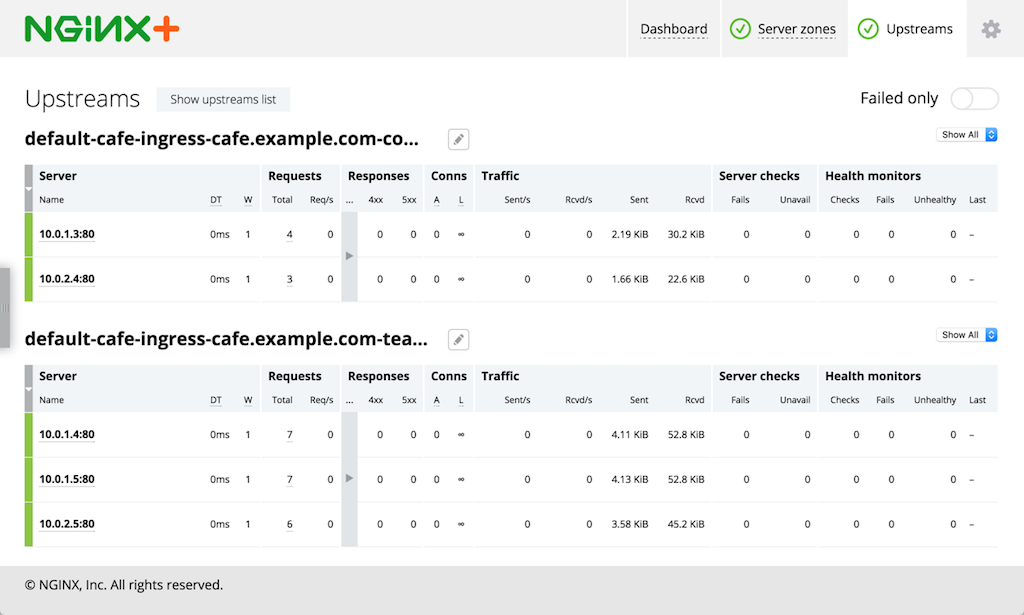

We can also connect to the NGINX Plus live activity monitoring dashboard and see real‑time load balancing metrics from NGINX Plus and each container of our application.

Ingress Controller Extensions

Ingress provides basic HTTP load‑balancing functionality. However, it is often the case that the load‑balancing requirements for your applications are more complex and thus not supported by Ingress. To address some of those requirements, we have added a number of extensions to the Ingress controller. This way you can still take an advantage of using Kubernetes resources to configure load balancing (as opposed to having to configure the load balancer directly) but leveraging the ability to utilize advanced load‑balancing features.

For a complete list of the available extensions, see our GitHub repository.

In addition, we provide a mechanism to customize NGINX configuration by means of Kubernetes resources, via Config Maps resources or Annotations. For example, you can customize values of the proxy_connect_timeout or proxy_read_timeout directives.

When your load‑balancing requirements go beyond those supported by Ingress and our extensions, we suggest a different approach to deploying and configuring NGINX Plus that doesn’t use the Ingress controller. Read Load Balancing Kubernetes Services with NGINX Plus on our blog to find out more.

Benefits of the NGINX Plus with the Controller

With NGINX Plus, the Ingress controller provides the following benefits in addition to those you get with NGINX:

- Stability in a highly dynamic environment – Every time there is a change to the number of pods of services being exposed via Ingress, the Ingress controller needs to update the NGINX or NGINX Plus configuration to reflect the changes. With NGINX Open Source, you must change the configuration file manually and reload the configuration. With NGINX Plus, you can use the dynamic reconfiguration API to update the configuration without reloading the configuration file. This prevents the potential increased memory usage and overall system overloading that can occur when configuration reloads are very frequent.

- Real‑time statistics – NGINX Plus provides advanced real‑time statistics, which you can access either through an API or on the built‑in dashboard. This can give you insights into how NGINX Plus and your applications are performing.

- Session persistence – When you enable session persistence, NGINX Plus makes sure that all the requests from the same client are always passed to the same backend container using the sticky cookie method.

Summary

Kubernetes provides built‑in HTTP load balancing to route external traffic to the services in the cluster with Ingress. NGINX and NGINX Plus integrate with Kubernetes load balancing, fully supporting Ingress features and also providing extensions to support extended load‑balancing requirements.

To try out NGINX Plus and the Ingress controller, start your free 30-day trial today or contact us to discuss your use cases.