This post is adapted from a presentation by Nick Sullivan of CloudFlare at nginx.conf 2015 in September. You can view a recording of the presentation on NGINX, Inc. YouTube channel.

Table of Contents

0:00 NGINX + HTTPS 101 Overview

As an overview, this is what I’m going to cover in this talk. What is HTTPS? Everybody might recognize HTTPS from the browser, and have a good sense of what it is. I’ll go over the basics again and go into a little bit more detail about what protocol versions there are, what cipher suites there are, and the whole point of this talk is how to configure NGINX to set your site up for service with HTTPS.

Beyond that there’s the question of using HTTPS as a proxy; so, if your NGINX is in front of another application, how do you set NGINX up to act as an HTTPS client.

From there I’ll go into some ways you can check your configuration to see that it is the most secure and the most up‑to‑date, as well as some bonus topics that help you get the A+ you need in security.

1:00 What is HTTPS?

![Section title slide reading "What is HTTPS?" NGINX configuration to keep your website secure [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide2_https-card.png)

So, as you might guess, HTTPS is HTTP plus S, and S stands for security. In the case of the web, there are two protocols, called SSL and TLS. They’re kind of used interchangeably; I’ll go into that a little bit later. But, [a security protocol] is the security layer that sits on top of your communication. If you think of the OSI stratum, it sits below Layer 7 in something called Layer 6, the presentation layer.

![HTTPS is HTTP plus SSL or TLS for data encryption and server authentication [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide3_https.png)

What it provides to you, between a client and the server, is confidentiality of data. So, everything that’s sent from the client to the server and back is fully encrypted so that the only two people that know how to read it are the client and the server. It also provides a form of authentication where the client can know that the server is exactly who it says it is. These are all intertwined in the concept of the handshake.

2:02 SSL Handshake (Diffie‑Hellman)

This is your SSL/TLS handshake. It’s a little complicated; there’s a lot of moving parts but essentially, if you take a step back it’s an extra one or two round trips between the client and the server that send cryptographic information. In this case, you have several options right here – there’s server randoms, there’s client randoms; this is all kind of deep stuff you don’t really need to know.

All you need to know is that the server itself sends a public key, and the client and server establish a shared secret that they can use to encrypt the communication. So, all the communication between the visitor and the server is encrypted with a symmetric key, meaning both parties have the same key. There’s also an integrity key, so in this case an HMAC, but I’ll skip over this diagram for now and go to the more salient question of “why set up HTTPS?”.

3:05 Why Set Up HTTPS?

![Reasons to set up HTTPS with NGINX include user privacy, improved SEO rankings, protection of services that can't handle encryption, and as a general good practice [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide5_https-set-up.png)

Well, the main reason is user privacy. In the case of the service communication, it’s privacy of information – how much do you trust the networks you’re using to transmit this information? Do you trust these networks to not inject things into your traffic and to be able to read what gets transmitted over? Also recently, if you’re using this for a public website, it provides an SEO advantage so Google will more highly rank sites that support HTTPS than those that do not.

Another thing you can do for HTTPS (this is one of the main use cases for NGINX) is to put it in front of services that don’t necessarily support HTTPS natively or don’t support the most modern, up‑to‑date version of SSL and TLS. So, what you get with NGINX is the best, state‑of‑the‑art implementations of all the crypto algorithms that you don’t really need to think about, and in general it’s good practice.

So, if someone’s going to a site, they like to see that little happy lock icon. In this case this is nginx.com, which has HTTPS enabled as well as HSTS (a feature I’ll talk about later). You can’t really go to the regular HTTP version of the site anymore; the browser knows to always go to HTTPS. Another thing you see here is there’s a nice NGINX, Inc with the [US] that just shows this is an Extended Validation Cert – essentially, a certificate that says NGINX is who it says it is. They paid a little bit extra for that and did some vetting.

4:51 What Are the Downsides?

![Downsides of configuring HTTPS with NGINX are greater complexity, extra latency because of the handshake, and CPU cost [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide6_https-downsides.png)

But it’s not all roses; there are some downsides. Specifically, there is a little bit of operational complexity. You have to manage certificates and you have to make sure that they continue to be up‑to‑date. You need to have administrators who are trusted to hold onto the private key material.

When you connect to a site over HTTPS, the first time it can be a little bit slower; if you’re not necessarily physically close, then on top of the TCP handshake, there is the SSL handshake, which [as] I mentioned adds at least two round trips. So there is a slight hurt to latency, but this can be mitigated by several more advanced features of SSL such as SPDY and HTTP/2, which I won’t go into.

In the end HTTPS can be as fast as HTTP, but sometimes it isn’t. There’s also the cost on your servers to actually do the crypto; this was brought up as a reason to not do HTTPS for a long time, but it’s less and less applicable. The latest generation of Intel servers can do the type of crypto you need for HTTPS very quickly with almost no cost. So, actually encrypting data in transit is basically free in modern hardware.

6:15 What You Need to Set Up HTTPS

![To set up HTTPS, you need to decide which protocols and ciphers you support, and must obtain a certificate and private key signed by a trusted Certificate Authority in order to achieve website security through NGINX and HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide7_https-need.png)

So if you want to set up HTTPS for your service or website, you need to make a couple choices and obtain a couple things. The first [decision] is which protocols you want to support, the second is which ciphers you want to support (I’ll go into what that means), and also [you need to obtain] a certificate and a corresponding private key. This is an important part, [issued] by a third‑party certificate authority that your clients trust. I’ll go into that a little bit later, but first let’s talk about protocol versions.

6:52 Protocol Versions

![Section title slide reading "Protocol Versions" that help achieve website security with NGINX through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide8_protocol-card.png)

So a bit of history – HTTPS is HTTP plus the S and the S has been changing; it’s something that’s evolved over time. Originally SSL v1.0 was a protocol invented at Netscape. There is a famous anecdote where Marc Andreessen was presenting it at MIT and someone in the audience broke it with a pencil description of how you break the cryptographic algorithm.

![Several revisions of SSL v2.0 have been released since its debut in 1995: SSL v3.0 in 1996 with major fixes, TLS v1.0 and v1.1 in 1999 and 2006 with minor tweaks, and TLS v1.2 in 2008 with improved hashes [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide9_protocol-history1.png)

So, that one didn’t last very long and eventually they released SSL v2.0 in 1995 and this was essentially the start of the encrypted web. This is what enabled e‑commerce and people to be able to submit passwords and credit cards online and be at least reasonably comfortable with doing so.

SSL v3.0 followed very soon, and this was a complete rewrite by Paul Coker and others. This was a pretty solid protocol. In fact, the IETF took SSL v3.0 and kind of mulled it over and worked it into what what was not a Netscape‑specific algorithm, but something for the wider audience called TLS (Transport Layer Security). SSL stands for Secure Socket Layer and that’s kind of the concept that people had at the time, but Transport Layer Security is what they call it now.

A little confusing – TLS v1.0 is essentially exactly the same as SSL v3.0, it [has] just one or two little tweaks that the IAF made to standardize it. If you actually deeply look into the protocol itself, the version number in TLS v1.0 is actually SSL v3.1; so you can kind of think of this as a continuum.

But eventually the IETF came up with new versions: v1.1 (which was just a few minor tweaks) and then v1.2, which introduced some new cryptographic topics. But looking at this you think, “OK, well, some clients support these, and some clients support more modern ones depending on where they come out.” But in terms of a security sense, most of these have been broken in one significant way or another.

9:08 A Bit of History

![Each version of SSL and TLS up through TLS v1.1 has been broken or weakened by hacker attacks [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide10_protocol-history2.png)

So, SSL v2.0 is really not recommended: it was broken a long time ago, it’s not cryptographically safe. SSL v3.0 – people have been using it all the way up until just under a year ago, when a cryptographic attack that was essentially ten years old got rediscovered and was found to break all of SSL v3.0.

TLS v1.0 and v1.1 are generally safe. And the latest one, v1.2, is the only one that doesn’t have any known attacks against it. TLS has a muddled history and doesn’t really have the best security record, but it’s the best that we have when it comes to interoperability with browsers and with services and it’s built into almost everything. So, TLS v1.2 is the way to go.

10:00 Client Compatibility for TLS v1.2

![Browsers that support the more secure TLS v1.2 for HTTPS account for 75% of Internet traffic [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide11_protocol-tls12.png)

If you look on the web right now in terms of percentage of visitors, there’s only certain versions that introduced v1.2. These are actually pretty recent versions and it works out to be about 75% of traffic. So, if you’re gonna set up your server and you choose to go v1.2 only, you’re really eliminating a lot of your audience. It’s not necessarily the best move unless you are super security‑conscious and okay with letting wide swaths of people who aren’t using (say_ Internet Explorer 11 or later to use it.

On the other side of the coin, certain platforms are starting to prefer to use v1.2. So OS 9 just came out with something called app transport security and it requires the server to support TLS v1.2. So the industry is moving forward towards the standard.

11:00 Client Compatibility for TLS v1.0

![TLS v1.0, the oldest protocol that's actually secure, works with everything newer than Windows XP to provide website security through HTTPS with NGINX [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide12_protocol-tls10.png)

In any case, TLS v1.0 (the last one that’s really secure) basically works with everything except for Windows XP Service Pack 2. There’s not much you can say about this; it’s end of life, but it’s still used in different parts of the world. You might want to consider using SSL v3.0 if you really need to reach this audience or corporate environments that have this very old version of Windows.

11:32 Configuration Options

![Each choice of SSL/TLS protocol version involves a trade‑off between security and compatibility with browsers to provide HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide13_protocol-config.png)

So, there’s this site called SSL Labs that can rate your site. When you’re configuring SSL, if you choose TLS v1.2 only, you get an A. If you have only TLS, you get an A. If you go back to supporting SSL v3.0, it’s risky – you get a C. So this is what you have to consider when choosing your protocols.

11:58 Cipher Suites

![Section title slide reading "Cipher Suites" that help provide website security through HTTPS and NGINX [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide14_cipher-card.png)

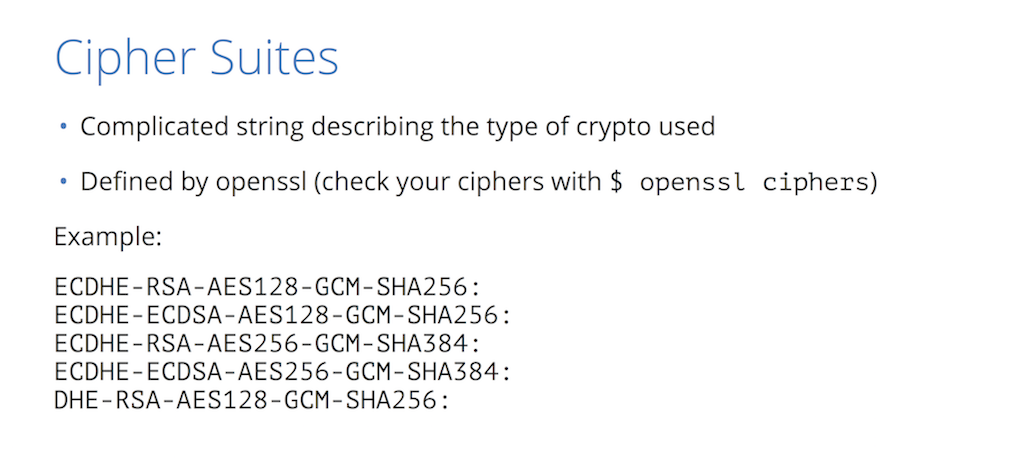

Now let’s go into cipher suites. What is a cipher suite? There are various cryptographic algorithms that SSL, TLS, and HTTPS use to establish connections and it’s really an alphabet soup. What do they mean?

12:19 Cipher Suites Breakdown

![A cipher suite has four parts: the key-exchange algorithm, the type of public key, the cipher used to encrypt data in transfer, and the anti-tampering checksum A cipher suite is a set of algorithms that together determine the variety of cryptography used for and SSL/TLS connection [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide16_cipher-parts.png)

Essentially, the first term is the key exchange, an algorithm that the two parties use to exchange keys. In this case, it’s based on the Diffie‑Hellman algorithm.

The second term is what type of key is in your certificate. So every certificate has a public key of a certain type. In this case it’s RSA.

The third piece is your transport cipher, the encryption algorithm used to encrypt all the data. There’s a lot of different pieces that go into this, but AES‑GCM is the most secure cipher; this is actually something that Intel processors do at almost zero cost. So this is a pretty cheap and solid cipher to use.

The last one is integrity. As I mentioned, messages have a cache that goes along with them to make sure they haven’t been tampered but if it’s encrypted, and with integrity, you can go from there.

13:17 Server Cipher Suites

![A server decides which SSL/TLS cipher suite to use by comparing its list of supported suite with the list presented by the client, and choosing its preferred option from the intersection [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide17_cipher-server.png)

Now, not all browsers and servers have the same list of ciphers they support. This is called protocol flexibility and essentially what a server does is: the client says, “Hey, this is all I support”. The server says “Okay, of those I know about five of them. I’ll pick my favorite”.

13:43 Cipher Suite Negotiation

![Slide depicts negotiation of the SSL/TLS cipher suite between client and server to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide18_cipher-negotiation.png)

So for example, if the client says, “These are the ones I support in this order”, the server only supports two and then it’ll just pick its favorite.

13:52 Recommended Cipher Suites

![CloudFlare and Mozilla each publish a list of recommended cipher suites to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide19_cipher-recommended.png)

This is not a really big matter of debate, but there’s a lot of options that you have out there. CloudFlare uses this cipher suite list and you can find it here. We have an NGINX configuration format and these are the ones we recommend. These are the ones that all sites that are on CloudFlare end up using.

So, there’s a cool new cipher called ChaCha 20. It’s not supported in mainline NGINX yet but we’re pushing to do it; everything else here you can use. Mozilla also has their own recommendations on this, and you can go to their server‑side TLS site and they will generate SSL configuration for you for NGINX or whatever web server you might be using. So that’s cipher suites.

14:52 Certificates

![Section title slide reading "Certificates" that authorize websites to provide HTTPS with NGINX for website security [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide20_cert-card.png)

Now we have a protocol set and cipher suites set; now certificates. This is the most important part of HTTPS. This is what identifies you as a site to your customer. But, what’s in a certificate?

15:03 What Is a Certificate?

![A security certificate includes the organization's name, a public key, the issuer name, the validity period, and the issuer's digital signature, all to help provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide21_cert.png)

There’s your name (who you are), what domain names your site is valid for, when the certificate is valid, the public key (which the customer can use to validate anything that you sign with it), and then there’s a digital signature. The digital signature is a stamp from a public certificate authority that says it is a real certificate: the person actually owns this DNS name and is valid from these points.

![A graphic depiction of a certificate's contents: A security certificate includes the organization's name, a public key, the issuer name, the validity period, and the issuer's digital signature, all to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide22_cert-anatomy.png)

15:38 What Is a Trusted Certificate?

![A trusted security certificate is one issued by a certificate authority (CA) that browsers trust, such as Symantec, for website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide23_cert-trusted.png)

So what makes a certificate trusted? Well, this is a breakdown of all the Certificate Authorities (CAs) on the internet right now who most certificates are signed by. There are some common names in here. Symantec has bought several of these certificate authorities including GeoTrust, Verisign, and others.

These are big companies that are trusted and, most importantly, their certificates are trusted by browsers. So if Symantec says this certificate is good via the process of someone buying a certificate from them, then browsers will present the green lock.

16:24 How Do I Get a Certificate?

![To obtain a security certificate, create a private key and a certificate signing request (CSR) and present them to a CA, which usually charges a fee to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide24_cert-get.png)

You get a certificate by creating a private key or a pair of keys and then sending off your public key to the certificate authority to rubber‑stamp and turn into a certificate. This usually costs a bit of money (there are free ways to do it), but essentially the private key you have to keep private and the only entities that should get ahold of this are your administrator and your web server itself.

16:55 How Do I Create a CSR and Private Key?

![You can use cfssl or openssl commands to create the certificate signing request (CSR) and private key you present to the CA for website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide25_cert-create-csr.png)

There are several ways to create these key pairs. We have built a tool at CloudFlare called CFSSL, which is a way to generate these keys and a CSR (Certificate Signing Request). A CSR is essentially the way that you package your public key to the certificate authority to create a certificate from. There’s OpenSSL, CFSSL, several ways to do this.

17:18 How to Get a Free Certificate

![You can get a free security certificate at StartSSL.com to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide26_cert-free.png)

If you want a free one, the current most well‑known site is startSSL.com. They will give a free site for your certificate, valid for a year. It’s looking like getting a certificate will be something that is less costly as time goes forward. There are proposals for free certificate authorities. So right now, you can pay for a nice experience with Comodo, or DigiCert, etc, or you can go the free route.

18:02 Certificate Chain

![Most certificates are actually signed by an 'intermediate CA' in a 'certificate chain of trust' up to top-level CAs [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide27_cert-chain.png)

Certificates don’t get signed directly by the certificate authority; there’s this kind of chain of trust that gets built up. So, if you have a certificate, it’s usually signed by an intermediate certificate authority, and that intermediate certificate authority is signed by the real certificate authority.

So in this case, you can kind of think of it this way (this is CloudFlare but imagine this is a certificate authority): you get a certificate and you have this whole chain of certificates that you present. Not all browsers necessarily know what the next one in the chain is, and browsers are really only bundled with the top (the real offline root certificates). So when you get a certificate, you also need to have the whole chain of trust along with it.

There’s a tool we built called CFSSL Bundle that allows you to create this if your CA doesn’t give it to you. Typically your CA will give you this chain.

19:01 Configuring NGINX

![Slide reading "Configuring NGINX" to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide28_nginx-card.png)

So now the good part. How do you take these options and configure NGINX?

19:08 NGINX Configuration Parameters

![The NGINX directives for basic security configuration are ssl_certificate, ssl_certificate_key, ssl_protocols, and ssl_ciphers - all that help provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide29_nginx-config.png)

There are some basic primitives [directives] here that you can use: ssl_certificate, ssl_certificate key, ssl_protocols, and ssl_ciphers.

19:19 NGINX Configuration Parameters (OpenSSL)

![Before configuring NGINX for SSL/TLS, run the 'openssl version' command to determine the version of OpenSSL you are using for website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide30_nginx-openssl.png)

Before you start: the way NGINX does TLS is with OpenSSL, which is a library I’m sure you’ve heard about in the news. It was famous for Heartbleed and several other vulnerabilities that came out. It really is the most widely used crypto library built in. This is what NGINX uses for crypto.

So, one thing to do on your server is to check which version of OpenSSL you’re using. You probably don’t want to use one that’s, for example, [version] 0.9.8. Something in the 1.0.1p, or 1.0.2 range is where you want to be, because they’ve fixed a lot of bugs over the years. You never know when the next OpenSSL bug drops, but at least right now it’s pretty solid (1.0.1p). It also has all the modern crypto.

20:19 Certificate Chain and Private Key with NGINX

![Include the ssl_certificate and ssl_certificate_key directives to name the SSL/TLS certificate chain and private key to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide31_nginx-directives.png)

So, when you set up your server section in NGINX, the ssl_certificate is your chain of certificates. This is your certificate plus all of the chain of trust all the way up to the root. Then you also provide your private key.

20:40 Extra Options

![To configure SSL/TLS session caching, include the ssl_session_timeout and ssl_session_cache directives to help provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide32_nginx-extras.png)

There are also some extra options you can add having to do with session resumption. As I mentioned before, when you first establish a TLS connection, there are an extra two round trips because you have to do an entire handshake and exchange certificates. If you previously connected with a client and they’ve cached the key that is used for the session transport, you can just resume that session. This is a feature called session resumption.

You just need a timeout to say how long you want to keep sessions on your side, and then how big a cache for these sessions you can have. In this case, the default is a 50 MB session; that should last you for a long time. A shared cache is preferred because then you can share them between all your NGINX workers.

For example, if one of your workers was the one that originally made the connection and a second connection gets made to a different NGINX worker, you can still resume the connection. There’s also another option called session tickets. It’s only used in Chrome and Firefox, but essentially does the same thing. You have to generate a random 48‑byte file, but I would recommend sticking just with session caching for now.

22:14 Protocols and Ciphers with NGINX

![To specify the SSL/TLS protocols and ciphers that the NGINX server supports, include the ssl_protocols and ssl_ciphers directives [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide33_nginx-directives2.png)

As a pretty obvious next step, you have to list the protocols you want to support, and the ciphers. In this case, these are CloudFlare recommended ciphers, and the TLS protocol starting with v1.0 all the way up to v1.2.

22:32 Additional Fields

![To specify that the NGINX server's preferred cipher is used rather than the client's, include the ssl_prefer_server_ciphers directive [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide34_nginx-fields.png)

I mentioned how you negotiate which cipher you choose; you can prefer the client’s choice or the server’s choice. it’s always better to prefer the server’s choice. So there’s a directive here: ssl_prefer_server_ciphers – always turn this on.

22:55 Multiple Domains, Same Certificate

![It is possible to use the same certificate for multiple domains, such as both example.com and example.org [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide35_nginx-multiple-domains.png)

If you have multiple sites and you have them using the same certificate, you can actually break up your HTTP definition. you can have your SSL certificates on the top level and then different servers on the bottom levels. So the one thing you have to keep in mind here is: if you have example.com and example.org, you have to have one certificate that’s valid for both those names in order for this to work. That’s basically it for setting up NGINX for HTTPS.

23:26 Backend HTTPS

![Section title slide reading 'Backend HTTPS' [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide36_backend-card.png)

The more advanced topic is: how do you use NGINX as a proxy behind other HTTPS services?

23:37 Encryption on the Backend

![Backend encryption means using SSL/TLS to protect traffic between NGINX and backend servers [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide37_backend-encryption.png)

What we like to call this is backend encryption. So, your visitor comes to your NGINX server fully encrypted. What happens behind NGINX? NGINX has to act, in this case, as the browser to whatever your backend service is.

23:56 NGINX Backend Configuration

![To configure SSL/TLS for traffic between NGINX and backend servers, include proxy_ssl_* directives [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide38_backend-directives.png)

This can be configured in NGINX in a very similar way. There are similar directives to ssl_protocols and ssl_ciphers; in this case you put it under a proxy. The proxy_ssl_protocols and proxy_ssl_ciphers directives are the ones that you’re going to use as a client to NGINX.

I would recommend using the exact same set of ciphers and same set of protocols. The main difference here is that the client authenticates the server. So, in the case of a browser, you have a bundle of certificate authorities that you trust, and as NGINX (the client) you also need to have the set of certificate authorities that you trust.

24:46 Options for Trusted CAs

![For backend encryption, you can choose to act as your own CA (which means managing a public key infrastructure), or use a regular trusted CA to provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide39_backend-trusted-ca.png)

There are two different philosophies that you can use to approach this, and one is to create your own internal certificate authority and manage it in‑house. This is a little bit trickier, but it is cheaper and easier to manage because you can issue a certificate for any one of your services and have them issued to a certificate authority that you own and have full control over. In that case, this proxy_ssl_trusted_certificate would be set to your certificate authority.

Alternatively, you can do the same technique that I described for NGINX. You can buy certificates for all of your services and then if your NGINX needs to trust them, it can trust the same set of certificate authorities that the browsers trust.

For Ubuntu, there’s a list on disk that holds all these certificates for basically every platform. But if you are building a large set of services that are going to need to talk to each other, it is hard to get certificates issued for these domains. You have to prove ownership to the certificate authority to actually get the certificate.

I recommend the internal CA mechanism. The tough part about this is – how do you keep the certificate authority safe? How do you keep the private key of that certificate authority safe? You can do it by having an offline computer and a special administrator, but in either case there are some challenges.

26:33 Checking your Configuration

![Section title slide reading "Checking your configuration" to help provide website security through HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide40_check-card.png)

So you have NGINX set up with HTTPS. How do you check that it’s configured correctly?

26:39 SSL Labs

One of the favorite tools for people checking websites is SSL Labs. SSL Labs is a site run by Qualys; you just type in your domain and it will run a full suite of every type of browser, every type of SSL connection, and it will tell you what you have set up correctly and what you have not.

In this case, we checked a site called badSSL.com, which essentially enumerates all the different ways that you can mess up your HTTPS configuration. You can scan each one of those with SSL Labs and it’ll tell you what’s wrong with each one. In this case, the grade given was C because it supports SSL v3.0.

There’s also several other things that it mentions here that you can fix up, but in the description of how I set up NGINX here in my talk, you’re basically gonna get an A if you set it up that way. That means the certificate protocol support, key exchange, and cipher strength are all top notch.

27:58 CFSSL Scan

This works great for public websites; if you have services that are behind a firewall or behind an NGINX, we built this tool called CFSSL scan. You can use it inside the internal infrastructure; its open source, and it’s on GitHub. It will do essentially the same thing that SSL Labs does, but inside your infrastructure. It’ll tell you what’s right and what’s wrong with your configuration.

28:32 Bonus: Configuring HSTS

![Section title slide reading "Bonus: Configuring HSTS" to help provide website security with your NGINX configuration [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://www.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide43_hsts-card.png)

So this is how you get an A, but what about an A+? It turns out that SSL Labs does give an A+ every once in awhile, and that’s when you have a feature called HSTS (Hypertext Strict Transport Security).

28:49 What is HSTS?

![Hypertext Strict Transport Security (HSTS) is a server-side configuration that tells clients to access your site only via HTTPS [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide44_hsts.png)

Essentially, what this is, is an HTTP header you can add on your requests that tells the browser to always reach this site over HTTPS. Even if they originally reached it over HTTP, always redirect to HTTPS.

However, it’s actually a little bit dangerous because if your SSL configuration breaks or a certificate expires, then there’s no way for visitors to go to your plain HTTP version of the site. There’s also some more advanced things you can do. That’s adding your site to a preload list. Both Chrome and Firefox have a list baked into the browser, so if you sign up for this then they will never ever access my site over HTTP.

29:51 Why?

![The main reason to configure HSTS is to prevent hijacking of connections, which plain HTTP is vulnerable to [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide45_hsts-why.png)

This will give you an A+ if everything else is correct on SSL Labs. You need to have HSTS set correctly with includeSubdomains (which means it applies to all subdomains) and it has to have at least a six‑month expiration period which makes it very risky. This is because If you change your configuration, browsers are going to remember this for six months. So you really have to keep your HTTPS configuration working.

The reason that this is a good thing is because it prevents anyone from modifying it in the middle. With HSTS, the browser will never have a chance to even go to your HTTP side so people can’t mess with your site in that regard. So HSTS is a pretty solid thing to do.

30:56 Risks

![Because HSTS prevents access to your site via plain HTTP, if your HTTPS configuration is broken you site becomes unreachable [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide46_hsts-risks.png)

As I mentioned there are several risks.

31:01 HSTS Configuration with NGINX

For example: to set it up, just add a header in your server configuration in NGINX that says Strict-Transport-Security and give it a max age. In this case, it is set to six months (that’s the minimum you need for the preload list). You can also add other directives here such as: includeSubdomains and preload, which means that it’s acceptable to take this and add it to a preload list. So that’s how you get the A+.

31:31 Double Bonus: Configuring OCSP Stapling

![Section title slide reading 'Double Bonus: Configuring OCSP Stapling' [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide48_ocsp-card.png)

Here’s another bonus feature that some people like to use, and it can help actually speed up the connection.

31:41 What is OCSP Stapling?

![The Online Certificate Status Protocol (OCSP) is a mechanism for informing a browser that a certificate is revoked; OCSP stapling means the server provides the saved OSCP response [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide49_ocsp.png)

As I mentioned before, there are quite a few back‑and‑forths, so you need to set up a TLS connection. What I didn’t mention was that these certificates can not only expire to go bad, they can be revoked.

So, if you lose track of your private key, there’s a breach, or someone hasn’t managed to own your private key, then you have to go to your certificate authority and revoke this key. There are several mechanisms for telling a browser that a certificate is revoked; they’re all a little sketchy, but the most popular one is OCSP (Online Certificate Status Protocol).

What happens is: when the browser receives a certificate, it also has to check to see if it’s been revoked or not. So it contacts the certificate authority and says “Hey, is this certificate still good?” and they’ll say “yes” or “no”. This in itself is another set of connections, so you have to look up the DNS of the CA, you have to connect to the CA, and it’s an additional slowdown for your site.

So not only is it three round trips to do HTTPS, you have to get the OCSP. So OCSP stapling allows the server to grab this proof that the certificate has not expired for you. In the background, fetch this OCSP response that says, “Yes, the certificate is good”, and then put it inside the handshake. Then the client doesn’t have to actually reach out to the CA and get it.

33:17 How Much Faster?

![OCSP stapling makes establishing the connection to a site 30% faster for clients [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide50_ocsp-faster.png)

This can save around 30% in terms of connecting to a site.

33:34 OCSP Stapling Configuration with NGINX

![To configure OCSP stapling for NGINX, include ssl_stapling_* directives [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide51_ocsp-directives.png)

This is pretty easy to set up with NGINX as well. There’s a directive for OCSP stapling. Stapling verify means that you verify the certificate after you staple it. As I mentioned before, with the proxy you have to trust the CA. You can just get a file from your CA to add into this through the trusted certificates section.

34:00 Questions?

So that brings us basically to the end of this session. This is how you configure NGINX and OCSP stapling, HSTS, and SSL proxying. So, any questions?

Q1: Do you have a link to your slides?

I will add the slides online and provide a link. You can go to my Twitter handle here, within a few days I’ll put these slides up. Yeah, I realize there’s a bunch of directives on the slides you probably want to copy down and that taking a photo is not the most accurate, most efficient way of doing so. Any other questions?

Q2: Is this how it’s gonna be, or is it gonna be changing over time?

That’s a really good question because, as i mentioned, TLS v1.2 is the latest and greatest. This was in 2008. They’re coming out with a new version, TLS v1.3. This is probably going to be coming out within the next year, so this applies to right now.

HTTPS is a changing landscape, so threats that we didn’t know about two years ago have completely changed the way that you would configure something now than you would have two years ago. So I would expect this to change going forward and for people setting up HTTPS to be aware of the changes in the industry. If there are big attacks, then learn what the new best practices are. So it’s constantly changing, but for now this is the way to go. Okay, thank you very much.

![To establish secured communication, the visitor to a website and NGINX perform an SSL handshake, with details shown here for Diffie‑Hellman encryption [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide4_ssl-handshake.png)

![You can get a rating of your site's security (based on your certificate, protocol, key-exchange algorithm, and cipher) at ssllabs.com [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide41_check-ssllabs.png)

![You can get a rating of the security inside your infrastructure from CloudFlare, at cfssl.org/scan [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide42_check-cfssl.png)

![To set the duration that HSTS applies, include the max-age parameter to the 'add_header Strict-Transport-Security' directive [presentation by Nick Sullivan of CloudFlare at nginx.conf 2015]](https://cdn.wp.nginx.com/wp-content/uploads/2016/08/Sullivan-conf2015-slide47_hsts-max-age.png)