With the increased availability of dedicated cloud storage, such as Amazon S3, websites are moving assets to the cloud. The benefits are clear: near infinite scalability, usage‑based costs, and the simplicity of storage‑as‑a‑service. But cloud storage also raises some important questions, such as how do you apply access control for assets stored on one service when your application runs elsewhere – on a compute cloud or in a local, on‑premises data center? Also, how do you mask the details of asset location from clients?

NGINX Plus enables you to separate your access control logic from your asset storage. Your access control application performs the authentication operations (checking a cookie, verifying credentials, and so on) and has the metadata it needs to instruct NGINX Plus to serve a login form, an access‑denied form, or the requested resource as appropriate. Resources are proxied by NGINX Plus at all times and their actual URLs never revealed to the client, so a devious user has no way of circumventing NGINX Plus and accessing the resource directly.

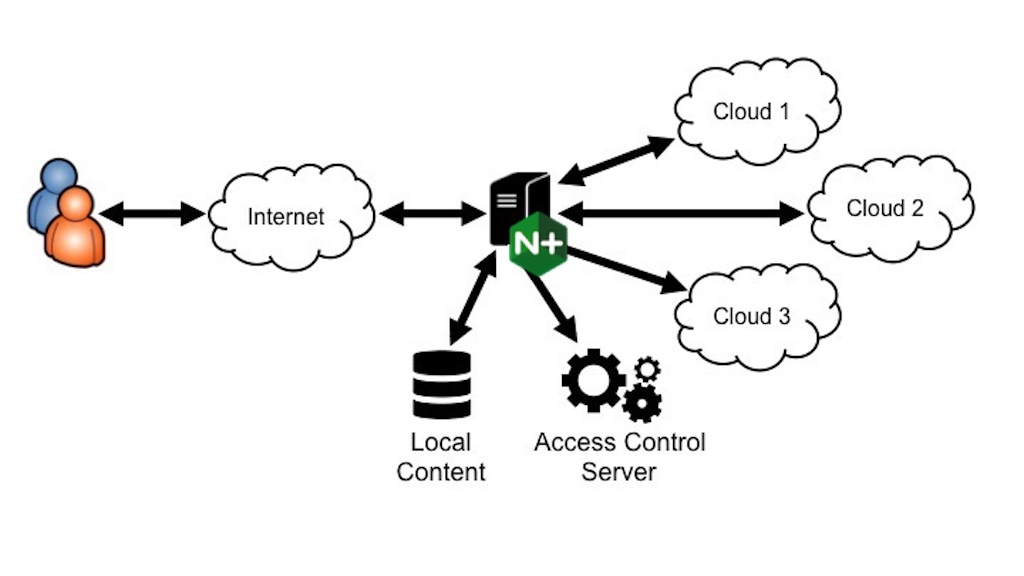

This idea can be applied to even more sophisticated use cases, such as when the assets are stored in a hybrid or multicloud architecture. Using multiple services enables companies to find the best price on data storage, avoid vendor lock‑in, and prepare for disaster recovery. For example, some assets can be stored in a local on‑premises data center and served directly by NGINX Plus, while other assets are stored on multiple public or private cloud services. Here’s an example where NGINX Plus and the access control application are running in the local on‑premises data center, while assets are distributed across local storage and three different cloud storage services:

NGINX Plus supports this functionality through the use of the X-Accel-Redirect response header. This header is set by servers belonging to an upstream group (defined by an upstream configuration block), functionality also sometimes known as XSendfile. The value set by the upstream server in the X-Accel-Redirect header provides the actual URL for the asset to be served by NGINX Plus and causes an internal redirect, so that the asset cannot be accessed directly by clients. To better understand how this works, let’s look at the request/response flow:

- The client makes a request to download a file. NGINX Plus receives the request and passes it to an upstream server hosting the access control application.

- The upstream server checks to see if the user is logged in and authorized to access the requested resource being requested. If not, the user is redirected to a login or access‑denied form.

- If the user is logged in and is authorized to access to the resource, the resource’s internal URL is recorded in the

X-Accel-Redirectheader. - NGINX Plus uses the URL from the

X-Accel-Redirectheader to perform an internal redirect and serves the content, either locally or from a cloud server, discarding the actual response body returned by the access control server.

This flow allows for a division of effort – NGINX Plus performs the functions it is designed for, such as delivering static content and reverse‑proxy load balancing, and the application executes its logic freed from the details of delivering static content. This also allows the URLs used by clients to be unrelated to the actual location of the resource. The content can therefore be moved from cloud to cloud without requiring changes to the URLs advertised to clients or reconfiguring NGINX Plus. The mapping of external URLs to the actual location of the resource is handled by the application. NGINX Plus handles the redirect specified in the X-Accel-Redirect header automatically, simplifying the NGINX Plus configuration.

Configuring NGINX Plus to Serve Local Files or Externally Hosted Files

Serving Local Files

Here is an snippet of NGINX Plus configuration and the PHP code for handling a simplified version of the use case discussed above, where files are being served by NGINX Plus locally. In this example, when a client accesses the URL /download/filename, NGINX Plus directs it to /download.php?path=filename. The download.php program sets the X-Accel-Redirect header to /files/filename which causes NGINX Plus to do an internal redirect and serve the file /var/www/filename from the /files location. To keep the example simple, we have omitted the authorization logic as well as the processing that determines the internal URL to return.

The NGINX Plus configuration:

server {

listen 80;

server_name www.example.com

location ~* ^/download/(.*) {

proxy_pass http://127.0.0.1:8080/download.php?path=$1;

}

location /files {

root /var/www;

internal;

}

}The PHP program:

<?php

# Get the file name

$path = $_GET["path"];

# Do an internal redirect using the X-Accel-Redirect header

header("X-Accel-Redirect: /files/$path");

# PHP will default Content-Type to text/html.

# If left blank, NGINX Plus sets it correctly

header('Content-Type:');

?>Suppose that the PHP program is available at 127.0.0.1:8080, the file abc.jpg is in the /var/www/files directory on the NGINX Plus server, and www.example.com is mapped to the IP address of the NGINX Plus server. Given this sample configuration, when a user accesses http://www.example.com/download/abc.jpg, the contents of abc.jpg are displayed in the browser and the URL remains set to http://www.example.com/download/abc.jpg even though the file is actually served from the /files directory.

Serving External Files

Here is a snippet of NGINX Plus configuration and the PHP code for handling another use case: retrieving resources from an external server, in this case Amazon S3. In this example, the client still enters the URL path /download/filename and NGINX Plus still directs this to /download.php?path=filename, but the download.php program sets the X-Accel-Redirect header to /internal_redirect/s3.amazonaws.com/nginx-data/filename, which causes NGINX Plus to do an internal redirect and proxy the request to Amazon S3. Again, to keep the example simple we have left out the authorization logic as well as the processing that determines the internal URL to return.

The NGINX Plus configuration:

server {

listen 80;

server_name www.example.com;

location ~* ^/download/(.*) {

proxy_pass http://127.0.0.1:8080/download.php?path=$1;

}

location ~* ^/internal_redirect/(.*) {

internal;

resolver 8.8.8.8;

proxy_pass http://$1;

}

}The PHP program:

<?php

# Get the file name

$prefix = "s3.amazonaws.com/nginx-data/";

$path = $_GET["path"];

# Do an internal redirect using the X-Accel-Redirect header

header("X-Accel-Redirect: /internal_redirect/$prefix$path);

# PHP defaults Content-Type to text/html.

# If left blank, NGINX Plus sets it correctly

header('Content-Type:');

?>Using this configuration, when a client accesses http://www.example.com/download/30-banner, the NGINX Plus AMI banner page is displayed in the browser. The URL remains set to http://www.example.com/download/30-banner even though it was served from Amazon S3. In a real‑world use case, the PHP program would include logic for deciding how NGINX Plus responds to the client’s request. Additional headers might need to be set by NGINX Plus and the PHP program and additional NGINX Plus directives used, but these examples have been simplified to show just the basics of what is required.

Summary

Using NGINX Plus and the X-Accel-Redirect header gives you the flexibility to run NGINX Plus and store your files anywhere in different places and to easily grow and change your environment. This means that NGINX Plus and your data can be stored in local data centers as well as public, private, and hybrid cloud environments, all while keeping your files secure and hiding the actual location of the resources from clients. As more and more companies move to utilize cloud computing, the need for this type of functionality will grow.

Further Reading

Additional examples of using the X-Accel-Redirect header with NGINX Plus:

- Use NGINX to proxy files from remote location using X-Accel-Redirect

- Using X-Accel-Redirect in NGINX to Implement Controlled Downloads

- X-Accel-Redirect From Remote Servers

- NGINX Fast Private File Transfer for Drupal using X-Accel-Redirect

More information on NGINX and NGINX Plus: