Microservices is the most popular development topic among readers of the NGINX blog. NGINX has been publishing regularly on microservices design and development for the past 2 years. To help you on your journey to adopting microservices, this post identifies some of the foundational posts we’ve published on the subject.

Many of our leading blog posts come from three series on microservices development. Our first series describes how Netflix adopted microservices enthusiastically, using NGINX as a core component of their architecture.

Netflix then shared their work with the open source community. The lessons they learned are described in three blog posts covering:

- Architectural design for microservices apps

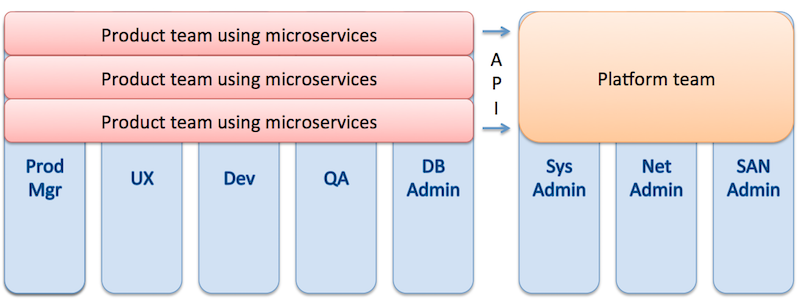

- Team and process design for implementing microservices

- The crucial role of NGINX at the heart of the Netflix CDN

microservices development. Source: Adrian Cockroft

Our second series is Microservices: From Design to Deployment, which is a conceptual introduction to microservices. The series addresses practical concerns, such as:

- Interprocess communication between services

- Service discovery, allowing services to find and communicate with each other

- Converting a monolithic application into a microservices app

These blog posts help you in building microservices applications and optimizing microservices performance.

We’ve pulled this seven-part series into an eBook with examples for NGINX Open Source and NGINX Plus. The principles described form the foundation for our webinar, Connecting and Deploying Microservices at Scale.

Our third series began as a microservices example, but grew into our NGINX Plus‑powered Microservices Reference Architecture (MRA). The MRA is a microservices platform, a set of pre-developed models for microservices applications:

- The Proxy Model puts a single NGINX Plus server in the reverse proxy position. From there, it can manage client traffic and control microservices.

- The Router Mesh Model adds a second NGINX Plus server. The first server proxies traffic and the second server controls microservices functionality.

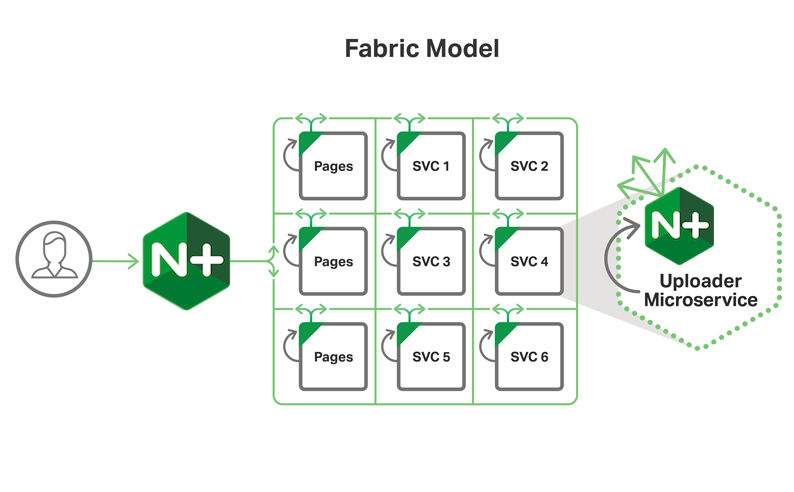

- The Fabric Model is the most innovative. There’s still one NGINX Plus server in front, proxying traffic. But, instead of a second server to control the services, there’s one NGINX Plus instance per service instance. With its own instance of NGINX Plus, each service instance hosts its own service discovery, load balancing, security configuration, and other features. The Fabric Model allows SSL/TLS support for secure microservices communications with high performance, because individual NGINX Plus instances support robust persistent connections.

Our strong focus on microservices will continue in 2017, beginning with an ebook detailing our Microservices Reference Architecture. To start experimenting with microservices yourself, start a free 30-day trial of NGINX Plus today. To stay up to date with our microservices content and more, sign up for the NGINX newsletter today.