The AWS re:Invent conference was held October 6–9, 2015 in Las Vegas.

Amazon Web Services (AWS), the leading provider of cloud‑based computing services, is a great resource and platform for web application development. AWS customers include big names such as Adobe, Airbnb, NASA, Netflix, Slack, and Zynga.

You can use AWS for prototyping, for mixed deployments (alongside physical servers that you manage directly), or for AWS‑only deployments. The performance you see on AWS can, however, vary widely, just as on any other public cloud – but you don’t have the same direct control of your AWS deployment that you do for servers that you buy and manage yourself. So AWS users have mastered a number of optimizations to get the most out of the environment.

Since its introduction in mid‑2013, NGINX Plus has become extremely popular on AWS; more than 40% of all AWS implementations use NGINX or NGINX Plus. A big part of the reason is performance. NGINX Plus is widely used for load balancing across instances, and other purposes; it handles spikes in traffic with better price/performance than many alternatives.

With these realities in mind, this blog post gives you tips you can use to get the best performance out of Amazon Web Services. We include specifics about how to implement these tips using NGINX Plus, where applicable. Use these tips to make immediate improvements in AWS performance, and to start a process of progressive improvement that will allow you to get the most from your AWS‑based efforts.

Note: For ideas on improving the performance for all your web applications, wherever they’re hosted, see 10 Tips for 10x Performance on our blog.

Tip 1 – Plan for Performance

Experts are using the term “cloud hangover” to describe some of the difficulties that can come with a cloud deployment, such as unexpected costs to maintain, support, and run your cloud‑based application.

There’s a place for tactical cloud adoption – experiments and “quick fixes” that get an app delivered quickly or cut costs. Use tactical efforts to gain experience and accumulate data about cloud‑based development and deployment.

As you grow your efforts, take the time to create a plan. You need to manage your suppliers, hire or train people to gain needed competencies, and understand future needs for app monitoring and management.

Also consider:

- Data movement – Identify what data are you accepting and delivering, what security is required – too little exposes you to data loss, too much will cost too much money – and your backup needs.

- High availability – Using a reverse proxy server, load balancing, and request routing all make your implementation more flexible, adaptable, and robust. Consider dispersing your NGINX and other server instances across multiple Availability Zones, for redundancy, and using Auto Scaling to handle spikes in traffic.

- Enhanced networking – The AWS enhanced networking capability offers higher performance (more packets per second) with lower latency. Enhanced networking is only available on specific instance types in specific configurations, so assess your networking and other needs before you commit to it. There is no additional cost.

- Storage types – Consider using EBS (solid state disk) storage by default. EBS storage is faster, has higher capacity, and your data and OS state are persistent – but costs are likely to be higher.

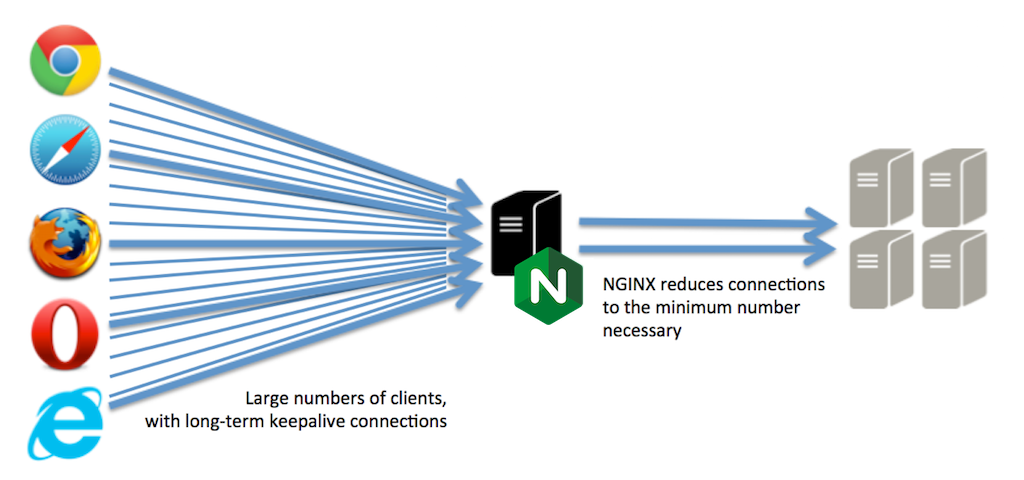

Include NGINX Plus in your early efforts. NGINX Plus is well suited for use as a reverse proxy server (to isolate request management from application processing), for load balancing (usually in combination with Amazon Elastic Load Balancing), and for request routing among heterogeneous application servers. NGINX Plus can support a very large number of client connections while greatly reducing traffic to application servers.

Plan for future implementations, using lessons you learn from your initial efforts to more realistically estimate resource needs and costs. Continue adjusting your plan as you implement it.

Tip 2 – Implement Load Balancing

Load balancing distributes requests across multiple application servers. Given that server performance is more variable in a cloud environment than when using servers you control directly, load balancing is even more important in the cloud than elsewhere.

The AWS Elastic Load Balancing (ELB) capability distributes application traffic across multiple Elastic Cloud Computing (EC2) instances. ELB provides fault tolerance and seamlessly adjusts to changes in the amount and sources of traffic. ELB is quite flexible and can work across Availability Zones. It supports AWS Auto Scaling and complex architectures.

For greater control, use one or more instances of NGINX Plus “below” ELB. NGINX Plus offers greater configuration control, precise logging, application health checks, session persistence, SSL termination, HTTP/2 termination, WebSocket support, caching, and dynamic reconfiguration of load‑balanced server groups. To learn more, download our ebook, Five Reasons to Choose a Software Load Balancer. You can get basic configuration instructions in Load Balancing with NGINX and NGINX Plus, Part 1.

Load balancing helps with several potential performance issues found in cloud environments:

- Running out of memory. – It’s all too easy to run out of memory on an instance. Use load balancing, along with the other tips listed here, to help you adjust the demands you make on each instance to run within its available memory.

- Inconsistent performance – Instances of the same type can vary widely in performance. Use load balancing to help you avoid bottlenecks on any one instance – whether that’s due to transient problems, continuing slow performance over time, or overloading.

- Bandwidth constraints – Cloud storage volumes may have less bandwidth than hardware disks. Use load balancing and the other tips listed here to help you spread bandwidth demands across instances.

NGINX Plus extends ELB with capabilities that include request routing, URL rewriting, URL redirecting, WebSocket support, SPDY and HTTP/2 support, advanced application health checks, connection limits, and rate limits. Auto scaling is only available through ELB, so use an integrated NGINX Plus/ELB implementation if you want to combine auto scaling with NGINX Plus capabilities.

For details, including a matrix comparing ELB, NGINX Plus, and ELB and NGINX Plus combined, see NGINX Plus and Amazon Elastic Load Balancing on AWS on our blog.

Tip 3 – Cache Static and Dynamic Files

Caching is a very widely used performance improvement, and there’s every reason to include robust caching support in your AWS‑based application. You use caching to put files closer to the end user and onto instances tailored to quickly deliver the specific type of file you’re caching. Caching mitigates the effects of latency and offloads application servers from the busywork of moving static files around.

Caching is a core application for NGINX Plus on AWS. The flexibility of AWS makes it easier for you to implement and compare different caching approaches, progressing toward an optimal caching implementation for each web application. You can cache files within AWS regions for optimal performance. With load balancing and caching in place, choose a mix of compute optimized, storage optimized, and other EC2 instances to meet your needs, which can help you reduce costs.

Much of the art of maximizing cloud hosting performance is matching instance types to specific needs for CPU power, memory, processing speed, storage amount, and bandwidth. Caching allows you to tune your requirements to match specific instance types, both for the instances that cache, and the instances that are offloaded by caching and thereby able to do more processing. This helps you avoid the performance problems – running out of memory, inconsistent performance, and bandwidth constraints mentioned in the previous Tip.

While NGINX Plus is a very fast, high‑performance caching server for static files, you can also usefully cache dynamic files. As requests increase rapidly, application servers can become a delivery bottleneck, with performance slowing disproportionately to traffic increases. To speed application performance, implement microcaching – caching newly produced files from the application server for a few seconds. Users still get fresh content, while a high proportion of requests are met by the cached copy. (If a file is cached for 10nbsp;seconds, and the server is receiving 10 requests per second, then 99% of requests are filled quickly by a cached copy.)

To learn more, see our blog post, A Guide to Caching with NGINX.

Tip 4 – Benchmark and Monitor App Performance

Cloud services are different enough from traditional implementations that it’s important to set standards for performance in advance, then revise them as you learn more. You will always want to be able to compare the price/performance of an app which is running in the cloud to the performance you could expect from the same app when run on servers that you own and manage directly.

Set standards for page load time, time to first byte appearing onscreen, transactions initiated, transactions completed, and other measurements used for monitoring web app performance. Be prepared to compare app development time and ongoing support requirements for cloud and directly managed server implementations. This way, you can manage not only specific apps, but a portfolio of apps across different kinds of implementations.

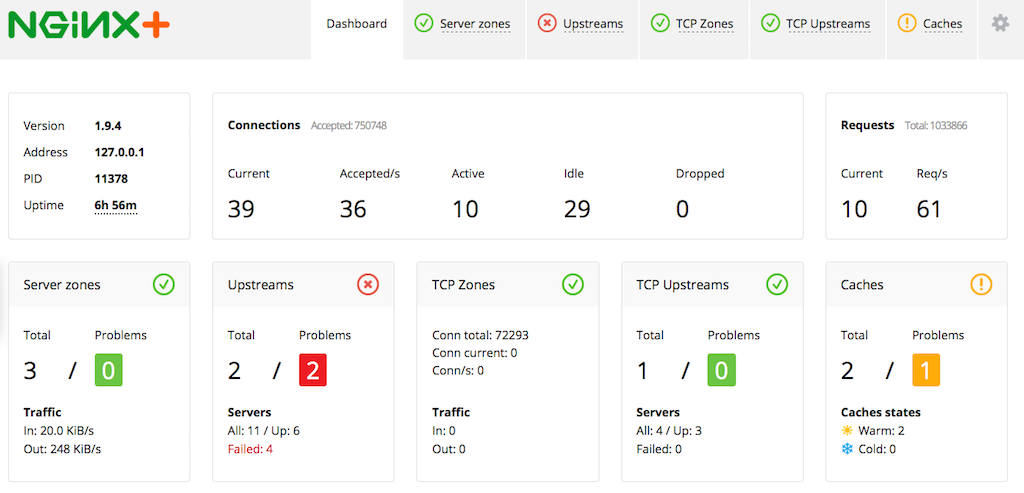

Amazon Cloudwatch provides integrated monitoring for applications running on AWS. You can monitor resource utilization, application performance, and perform simple health checks. NGINX Plus adds advanced health checks and sophisticated, live activity monitoring. You can view the dashboard in NGINX Plus directly or connect NGINX Plus statistics to other dashboards and third‑party tools. You get stats for connections, requests, uptime and more – per server, per group, and across your entire deployment.

Tip 5 – Use a DevOps Approach in the Cloud

Using a DevOps approach – where application development and operations practices are unified to meet technical and business goals – is the new standard for effective use of the web. DevOps is even sometimes referred to as DevOpsSec, to include security architecture and real‑time responsiveness to breaches in the mix.

The goal of DevOps has been described simply: to “create a high freedom, high responsibility culture with less process”. Cloud implementations are uniquely suited to support a DevOps approach due to their flexibility, scalability, and easy access to modern tools. You can try variations in your implementation quickly and easily, rapidly scale an app with nearly infinite resources as needed (very useful for testing), and use DevOps tools such as NGINX Pluss, Puppet, Chef, and Zookeeper. Difficult tasks such as comparing HTTP/1 performance to HTTP/2 performance are more easily accomplished in a cloud environment.

The key technique for supporting a DevOps approach to implementation is the use of a microservices architecture, which begins with refactoring monolithic applications into small, interconnected services. A microservices architecture is well suited to an AWS implementation, especially if you use load balancing, caching, ongoing monitoring, and a DevOps approach.

With AWS and NGINX Plus, you have robust monitoring tools (as described in the previous Tip); in any cloud implementation, you can quickly change code, tools, and implementation architecture. use this flexibility to implement a robust DevOps approach in your AWS environment.

You can use cloud implementations as a testbed for new approaches to application development and operations, pointing the way to changes for all the environments you use to deliver applications.

Conclusion

Getting the most out of your AWS implementation is hard work, but the results can be outstanding. By taking a thoughtful approach, you can reach goals that might be much harder to accomplish in a traditional implementation. And you can then flexibly use a combination of cloud and traditional flexibly to meet your long‑term goals.

NGINX Plus is an important part of leading AWS implementations and continues to grow in its role as a crucial tool for application delivery in the cloud. You can get started with NGINX Plus on AWS today.

We invite you to share your comments below, or tweet your observations with the hashtags #NGINX and #webperf.