This post is adapted from a webinar by Floyd Smith of NGINX, Inc. and Sandeep Dinesh and Sara Robinson of Google. It is the first of two parts and focuses on running NGINX Plus on Google Cloud Platform (GCP). The second part focuses on NGINX Plus as a load balancer for multiple services on GCP.

You can view the complete webinar on demand. For more technical details on Kubernetes, see Load Balancing Kubernetes Services with NGINX Plus on our blog.

Table of Contents

| 0:00 | Introduction |

| 1:01 | Application Delivery |

| 1:30 | Monoliths vs. Microservices |

| 1:40 | Monolithic Architecture |

| 2:46 | Microservices Architecture |

| 3:09 | NGINX Plus |

| 3:42 | NGINX Plus with Microservices |

| 4:13 | NGINX Plus and the Google Cloud Platform |

| 4:20 | Installing the NGINX Plus VM |

| 4:58 | Setting Up the NGINX Plus VM |

| 5:36 | HA NGINX Plus with GCP |

| 6:30 | Internal Load Balancing |

| 6:44 | NGINX Plus Integration with GCP |

| 7:09 | Load Balancing with NGINX and GCP |

| 8:28 | Google Cloud Platform |

| 8:59 | Google Cloud Platform Products |

| 10:03 | Running NGINX on GCP |

| 10:20 | Cloud Launcher: Setup in 3 Steps |

| 10:45 | Demo |

| Part 2 |

0:00 Introduction

![Photos of speakers: Floyd Smith of NGINX, Sarah Robinson and Sandeep Dinesh of Google Cloud Platform [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration and was broadcast 23 May 2016]](https://www.nginx.com/wp-content/uploads/2016/05/webinar-GCP-slide2_speakers.png)

Floyd: Hi, I’m Floyd Smith, Technical Marketing Writer at NGINX. And I’m here with Sarah Robinson and Sandeep Dinesh, who are both Developer Advocates for the Google Cloud Platform.

I’m going to give a quick overview of microservices. For those who want to find out more, there are many more resources available on our website.

1:01 Application Delivery

So, the core statement of DevOps is “Building a great application is half the battle, but delivering it is the other half”. Everyone has to work together to make that happen. You can’t do one thing in ignorance over the other. We need a new approach to application delivery.

1:30 Monoliths vs. Microservices

There is a movement now in DevOps to transition from traditional large, complex, monolithic applications to a microservices architecture. Some of you may already be on to this, in which case feel free to skip forward a bit, but for everyone else, here’s a very quick overview of what microservices are about.

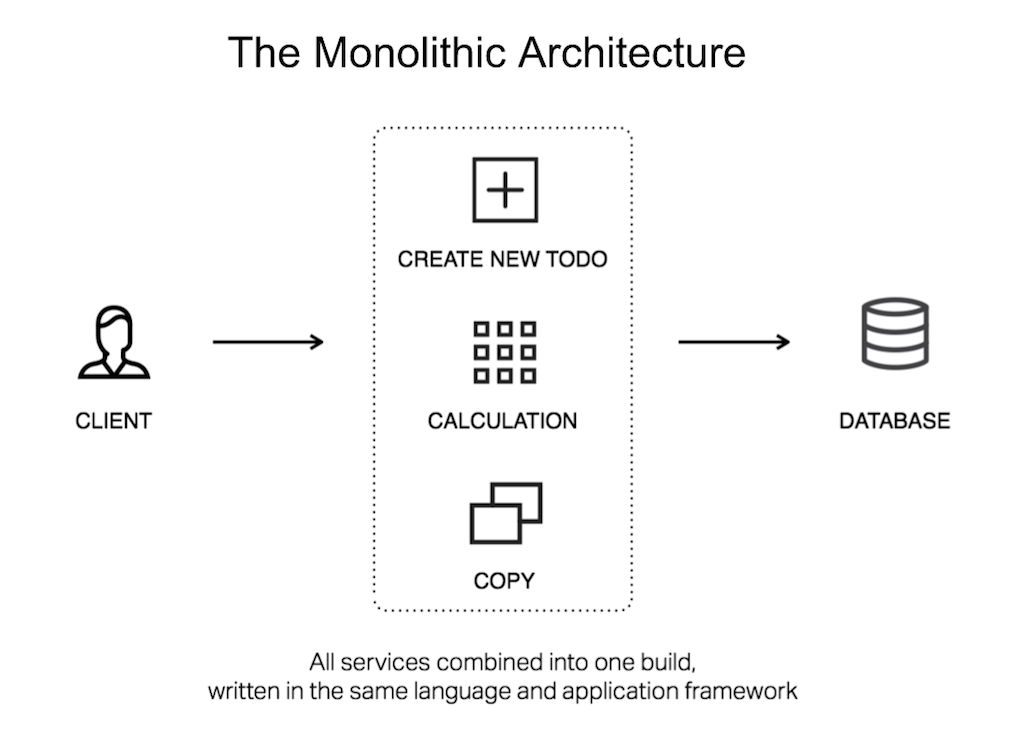

1:40 Monolithic Architecture

![Implications of the monolithic architecture: you must scale the entire monolith; all parts must be written with the same language and framework; to change one service, you must rebuild, retest, redeploy entire monolith [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/04/webinar-connecting-apps-docker-slide4_monolithic_architecture1.png)

With monolithic architectures, you’re often dealing with large, complex applications with huge amounts of interconnected code. Changing one thing could accidentally change another; somebody misuses a global variable and you can end up with strange bugs in completely different parts of the program.

If an application needs several different parts worked on at once, there’s a combinatorial explosion of difficulty when it comes to testing and developing new features. That leads to lengthy release cycles, where everyone is scared to commit.

Here’s what a monolithic architecture looks like. Everything’s in one place, which is convenient in some ways but also very limiting.

The way we scale this is to create multiple copies of the monolithic application. This can work, and NGINX Plus is great for helping make it work, but it has a lot of problems. For instance, maintaining state for a specific user across different copies of the monolith becomes a big challenge.

2:46 Microservices Architecture

With microservices we break application functionality down into services which are independent of each other. You can build and test independently.

Containers are a great tool for making microservices work. Once you start using containers, you’ll find that container orchestration becomes critical – scheduling containers, managing them, service discovery. All this is where Kubernetes comes in.

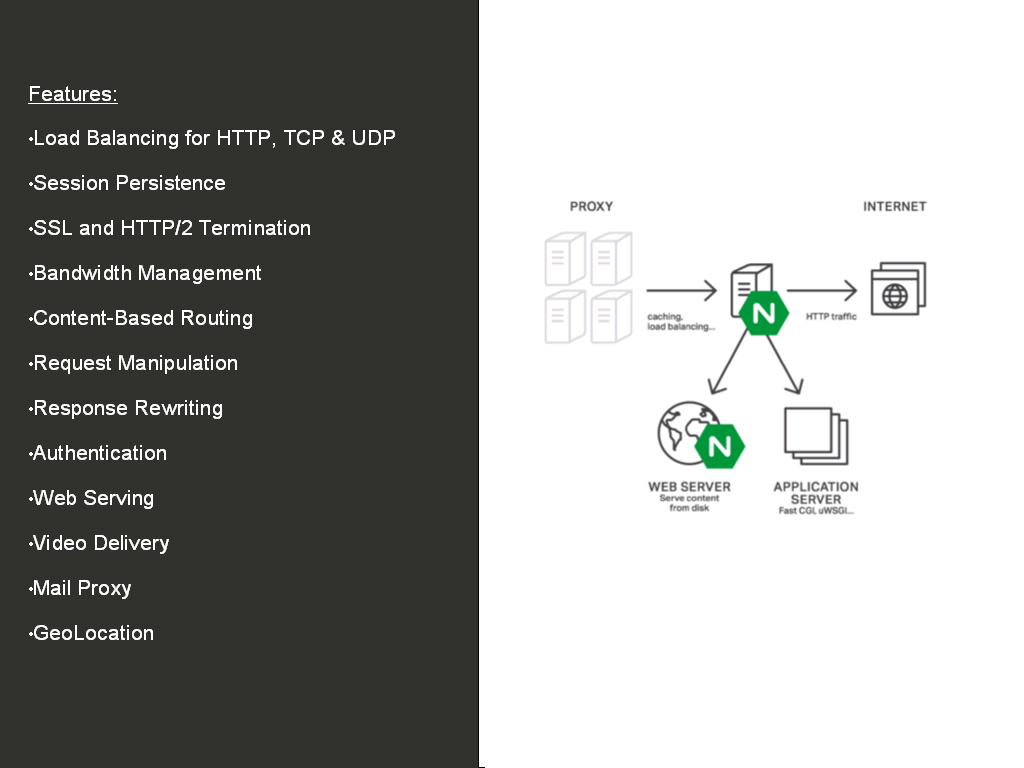

3:09 NGINX Plus

Here are some of the features NGINX Plus provides, whether you’re using a monolithic or microservices architecture, and a high level diagram showing where it fits in. Basically, NGINX Plus goes in front of all your other servers. And from there, it can route traffic to multiple copies of your monolith, but that’s really a transitional step. Like taking aspirin for your headache, it actually doesn’t solve the problem, it just makes you feel better. Moving to microservices is what actually solves the problem.

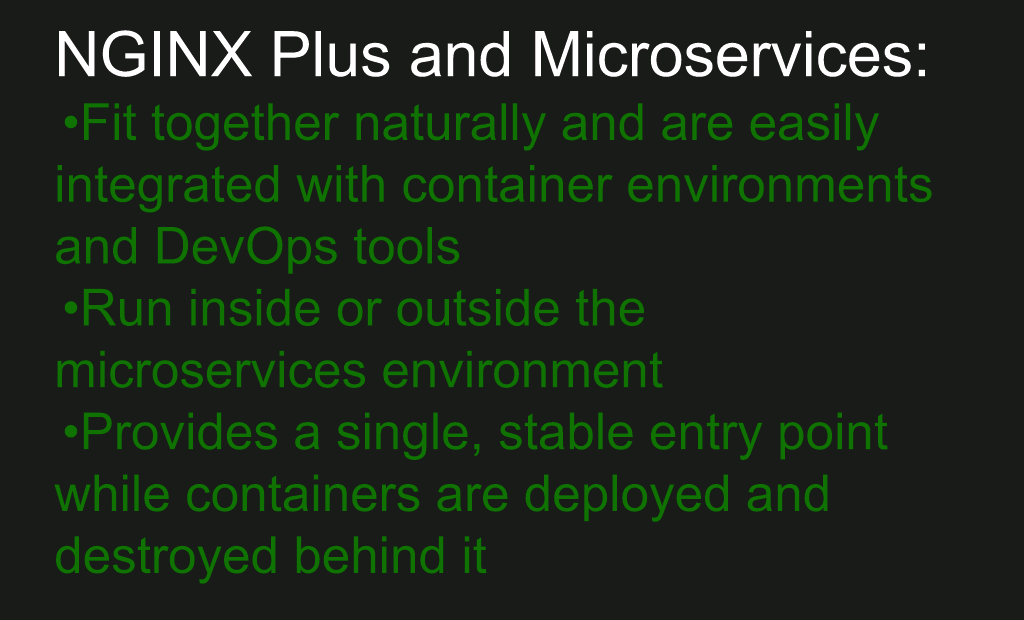

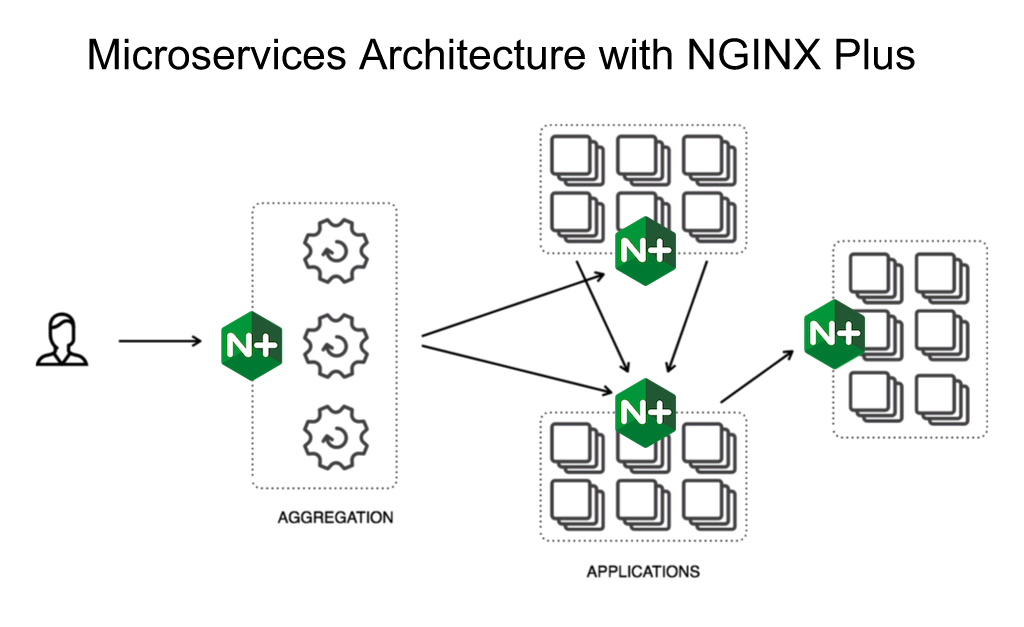

3:42 NGINX Plus with Microservices

NGINX Plus works very well with microservices. It’s a very popular image on Docker Hub. You can run NGINX inside the microservices environment and you can also run it in front.

So, here’s an example of where NGINX fits in an microservices architecture – handling customer‑facing traffic as an aggregation point, as well as interprocess communication on the back end.

4:13 NGINX Plus and the Google Cloud Platform

NGINX and NGINX Plus are very popular on Google Cloud Platform. I’ll give a quick preview of the set‑up process, which is super easy, and Sarah will give us a full demo later on.

4:20 Installing the NGINX Plus VM

![Screen shot of Cloud Launcher landing page for installing NGINX Plus on GCP [webinar titled 'Deploying NGINX Plus &a Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide11-installing-NGINX-Plus-VM.png)

This is the Cloud Launcher page for NGINX on Google Cloud Platform. [Editor – Google Cloud Launcher has been replaced by the Google Cloud Platform (GCP) Marketplace.] You can see NGINX Plus is billed per month. Even if you’re still in your development stage, it can be worth working with NGINX Plus. This gives you the full feature set during development, making the switch easier if you decide to go with NGINX Plus for production.

4:58 Setting up the NGINX Plus VM

![Screen shot of Cloud Launcher page for creating an NGINX Plus instance on GCP [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide12_setting-up-NGINX-Plus-VM.png)

Google Cloud Platform makes it very easy to get a virtual machine up and running with NGINX Plus. Everything’s set to common default settings, and yet it’s all still highly configurable through dialogs, fields, and even a command line interface.

For instance, as you see under Firewall, we have the options Allow HTTP traffic and Allow HTTPS traffic. There are actually a lot of details that go into that, which you don’t have to worry about because it’s all aggregated into these checkboxes for you; but if you want to do more detailed changes afterwards, you can.

5:36 HA NGINX Plus with GCP

![You can use Google Cloud Platform's Network Load Balancer to configure high availability for NGINX Plus [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide13-HA-NGINX-Plus-GCP.png)

Google Cloud Platform has an excellent network load balancer. Google handles a ton of traffic and part of the case for Google Cloud Platform is making that technology available at a low cost to you, because this is almost a marginal cost to Google on top of the network engineering they already have to do for their core applications.

We always recommend going to high availability which is easy to do with NGINX Plus on Google Cloud Platform and which also gives you greater capacity. Google Cloud Platform allows you to configure how many healthy servers you need to have running at one time, and the settings needed for getting another one up when an existing server fails.

So you have to think a little bit about your architecture going in, but then you’re protected from tons of problems after that.

6:30 Internal Load Balancing

![NGINX Plus can load balance internal services within a Google Compute Engine region [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide14_internal-LB.png)

With NGINX on GCP you can also create internal load balancing within a region, and you can take advantage of Google’s infrastructure resources worldwide. So, there’s a nice balance there. You can use a regional approach to offer customers a very low latency experience.

6:44 NGINX Plus Integration with GCP

NGINX Plus is well integrated with Google Cloud Platform. There is instant deployment with Cloud Launcher [now GCP Marketplace] as well as integration with GCP’s powerful Logging and Monitoring tools. NGINX Plus itself also has advanced monitoring features. Monitoring is a must‑have in these more complex environments and this way you can mix and match different features of these different tools.

7:09 Load Balancing with NGINX and GCP

Sarah: Hi, I’m Sarah, I’m a Developer Advocate on the Google Cloud Platform team, and so is my colleague Sandeep here.

We’re going to tell you all about how you can do load balancing with NGINX and Google Cloud Platform.

We’re going to do a demo of how you can deploy NGINX Plus on Google Cloud Platform with Cloud Launcher [now GCP Marketplace]. Then we’re going to do a deep dive on load balancing Kubernetes with NGINX Plus, including a live demo that we built, and then we’ll wrap up with some resources you can use to learn more.

8:28 Google Cloud Platform

![Google cloud services include search, Gmail, Google Drive, Google Maps, and Android [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide16_Google-Cloud-apps.png)

As many of you may know, Google runs a lot of applications in the cloud. I’m sure you’ve used many of these applications before, whether it be Google Search, Gmail, Drive, Maps, or Android.

With Google Cloud Platform, you as a developer can get access to the same infrastructure that Google uses to run Gmail, App, Drive, et cetera.

8:59 Google Cloud Platform Products

![Google Cloud Platform includes tools for big data, storage, computing, connectivity, management, development, and mobile [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide17_GCP-diagram.png)

Google Cloud Platform has many different products that can help you build and run your application.

We’ve got a whole suite of big data tools. If you want to run complex SQL queries on your data, you can check out Big Query. For processing data pipelines, we have a tool called Data Flow.

We have a bunch of different storage solutions. Whether you’re looking for file storage, structured data, we have Cloud SQL which is our structured relational database. We have two new SQL database offerings.

We also have a suite of compute services, then we also have management tools. So, if you want to log areas in your app or use cloud monitoring we have tools for that.

We have connectivity tools to access data in your applications, developer tools to host your code. You can use Cloud Source Repositories, and then we have Firebase, which is an awesome platform for building mobile and web apps.

Today we’re going to focus on the Google Cloud compute offerings. This is how you can use Google Cloud with NGINX Plus.

10:03 Running NGINX on GCP

![With Cloud Launcher you can spawn preloaded, customizable VMs to run on Google Compute Engine [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integratio]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide18_Cloud-Launcher.png)

Let’s look how you can run NGINX Plus on Google Cloud Platform using Cloud Launcher [now GCP Marketplace].

Cloud Launcher gives you access to a variety of different prepackaged solutions. It lets you spawn preloaded, customizable VMs running those on Google Compute Engine, with one click deployment.

10:20 Cloud Launcher: Setup in 3 Steps

![With Cloud Launcher, it's just three steps to set up NGINX Plus in a VM on Google Cloud Platform [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/06/webinar-GCP-slide19_Cloud-Launcher-setup.png)

As Floyd previewed for us before, to launch NGINX Plus on Google Compute Engine, we just need to open it up in Cloud Launcher [now GCP Marketplace], select our project, create our new VM instance, choose how big we want it to be, and then launch our virtual machine.

I’m going to share my screen right now and give a demo of how that works.

10:45 Demo

Note: The following section includes selections from a live demo. To see the full demo in context, visit the webinar recording here.

![Screenshot of Cloud Launcher landing page, showing multiple 'Infrastructure' projects available [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-demo-1.png)

Right now, I’m in Cloud Launcher [now GCP Marketplace] from my projects. As we can see, there’s a bunch of different solutions here – infrastructure, database, blogs.

We’re here for NGINX Plus, so I’m going to click on that.

![Screenshot showing deployment of NGINX Plus VM for the demo [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-2-.gif)

Now, I’m going to launch on Compute Engine and it will create a new deployment. I’m going to call it “NGINX Plus Demo”. For my zone, I’m going to use us-central1-f since that’s where the other resources in this project are located. For the machine type, I have a lot of options, but I’m going to just go with the default.

Now I’m going to click Deploy. And Cloud Launcher is going to take care of acquiring my NGINX Plus certificate and key for me.

The really cool thing is that there’s only going to be one bill. We saw that it estimated an average cost of about $200 a month. So, Cloud Launcher handles taking care of your NGINX bill and your Google Cloud bill, combining it into one so you don’t have to worry about that. And you’ll be getting the latest version of NGINX Plus on your virtual machine.

Once it’s deployed, we’ll actually be able to SSH into our VM and check out the default configuration file that we get with NGINX Plus.

![GIF showing access to Google Compute Engine 'VM instances' page for the nginx-plus-demo VM [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-3-1.gif)

Now that it’s been deployed, I’m going to go over to my Compute Engine dashboard and I can see that this is the VM that we’ve just deployed.

![GIF showing use of Cloud Shell to access the nginx-plus-demo VM via 'ssh', directly in a browser [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-4.gif)

Now I’m going to SSH into it. So, I’m doing this using Cloud Shell, which lets you SSH into your virtual machines on Google Compute Engine directly from the browser. Once I’m logged in, I’ll be able to check if NGINX is running.

In Cloud Shell, I can check my NGINX status by running /etc/init.d/nginx status, and as you can see, NGINX is running.

![GIF showing confirmation that the nginx-plus-demo VM is running [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-5.gif)

If click here on the external IP we can see that this also confirms NGINX is running, which is awesome.

![GIF showing contents of the nginx.conf configuration file for the nginx-plus-demo VM [webinar 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-6.gif)

Now, let’s take a quick look at the configuration file.

I’m going to cd into etc/nginx/conf.d, and I’m going to cat that default.conf file.

Here, we can see that we’re listening on port 80 and the most important thing here is this location block, which is just telling us where we’re serving up the static files, which you saw earlier over there in the browser.

We also get a status page with NGINX Plus, which we’ll talk about a bit later.

![GIF showing how change to NGINX Plus configuration appears immediately in the browser [webinar titled 'Deploying NGINX Plus & Kubernetes on Google Cloud Platform' includes information on how switching from a monolithic to microservices architecture can help with application delivery and continuous integration]](https://www.nginx.com/wp-content/uploads/2016/05/Webinar-GCP-Demo-7.gif)

Now, let’s just take a look at the directory where we are storing the static files.

I’ll just copy the path from the location block. We can cd into that, and then I’m going to open up index.html, make a quick change, and show you that it will be immediately reflected in the browser.

Now I’ll just add a bold tag here, I’ll say “NGINX+ and CGP are awesome!”, and then save it. And now if I refresh the page, I can see my update, which is pretty cool. I did this all through Cloud Shell while SSH’d into my VM.

So, that shows you how easy it is to get NGINX Plus deployed and configured on GCP using Cloud Launcher [now GCP Marketplace].