Node.js is the leading tool for creating server applications in JavaScript, the world’s most popular programming language. Offering the functionality of both a web server and an application server, Node.js is now considered a key tool for all kinds of microservices‑based development and delivery. (Download a free Forrester report on Node.js and NGINX.)

Node.js can replace or augment Java or .NET for backend application development.

Node.js is single‑threaded and uses nonblocking I/O, allowing it to scale and support tens of thousands of concurrent operations. It shares these architectural characteristics with NGINX and solves the C10K problem – supporting more than 10,000 concurrent connections – that NGINX was also invented to solve. Node.js is well‑known for high performance and developer productivity.

So, what could possibly go wrong?

Node.js has a few weak points and vulnerabilities that can make Node.js‑based systems prone to underperformance or even crashes. Problems arise more frequently when a Node.js‑based web application experiences rapid traffic growth.

Also, Node.js is a great tool for creating and running application logic that produces the core, variable content for your web page. But it’s not so great for serving static content – images and JavaScript files, for example – or load balancing across multiple servers.

To get the most out of Node.js, you need to cache static content, to proxy and load balance among multiple application servers, and to manage port contention between clients, Node.js, and helpers, such as servers running Socket.IO. NGINX can be used for all of these purposes, making it a great tool for Node.js performance tuning.

Use these tips to improve Node.js application performance:

- Implement a reverse proxy server

- Cache static files

- Load balance traffic across multiple servers

- Proxy WebSocket connections

- Implement SSL/TLS and HTTP/2

Note: A quick fix for Node.js application performance is to modify your Node.js configuration to take advantage of modern, multi‑core servers. Check out the Node.js documentation to learn how to have Node.js spawn separate child processes – equal to the number of CPUs on your web server. Each process then somewhat magically finds a home on one and only one of the CPUs, giving you a big boost in performance.

Tip 1 – Implement a Reverse Proxy Server

We at NGINX, Inc. are always a bit horrified when we see application servers directly exposed to incoming Internet traffic, used at the core of high‑performance sites. This includes many WordPress‑based sites, for example, as well as Node.js sites.

Node.js, to a greater extent than most application servers, is designed for scalability, and its web server side can handle a lot of Internet traffic reasonably well. But web serving is not the raison d’etre for Node.js – not what it was really built to do.

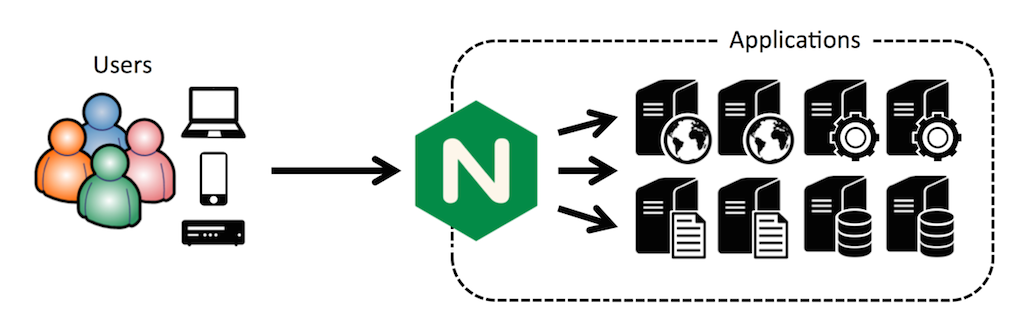

If you have a high‑traffic site, the first step in increasing application performance is to put a reverse proxy server in front of your Node.js server. This protects the Node.js server from direct exposure to Internet traffic and allows you a great deal of flexibility in using multiple application servers, in load balancing across the servers, and in caching content.

Putting NGINX in front of an existing server setup as a reverse proxy server, followed by additional uses, is a core use case for NGINX, implemented by tens of millions of websites all over the world.

There are specific advantages to using NGINX as a Node.js reverse proxy server, including:

- Simplifying privilege handling and port assignments

- More efficiently serving static images (see next tip)

- Managing Node.js crashes successfully

- Mitigating DoS attacks

Note: These tutorials explain how to use NGINX as a reverse proxy server in Ubuntu 14.04 or CentOS environments, and they are useful overview for anyone putting NGINX in front of Node.js.

Tip 2 – Cache Static Files

As usage of a Node.js‑based site grows, the server will start to show the strain. There are two things you want to do at this point:

- Get the most out of the Node.js server

- Make it easy to add application servers and load balance among them

This is actually easy to do. Begin by implementing NGINX as a reverse proxy server, as described in the previous tip. This makes it easy to implement caching, load balancing (when you have multiple Node.js servers), and more.

The website for Modulus, an application container platform, has a useful article on supercharging Node.js application performance with NGINX. With Node.js doing all the work on its own, the author’s site was able to serve an average of nearly 900 requests per second. With NGINX as a reverse proxy server, serving static content, the same site served more than 1600 requests per second – a performance improvement of nearly 2x.

Doubling performance buys you time to take additional steps to accommodate further growth, such as reviewing (and possibly improving) your site design, optimizing your application code, and deploying additional application servers.

Following is the configuration code that works for a website running on Modulus:

server {

listen 80;

server_name static-test-47242.onmodulus.net;

root /mnt/app;

index index.html index.htm;

location /static/ {

try_files $uri $uri/ =404;

}

location /api/ {

proxy_pass http://node-test-45750.onmodulus.net;

}

}This detailed article from Patrick Nommensen of NGINX, Inc. explains how he caches static content from his personal blog, which runs on the Ghost open source blogging platform, a Node.js application. Although some of the details are Ghost‑specific, you can reuse much of the code for other Node.js applications.

For instance, in the NGINX location block, you’ll probably want to exempt some content from being cached. You don’t usually want to cache the administrative interface for a blogging platform, for instance. Here’s configuration code that disables (or exempts) caching of the Ghost administrative interface:

location ~ ^/(?:ghost|signout) {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

proxy_pass http://ghost_upstream;

add_header Cache-Control "no-cache, private, no-store,

must-revalidate, max-stale=0, post-check=0, pre-check=0";

}For general information about serving static content, see the NGINX Plus Admin Guide. The Admin Guide includes configuration instructions, multiple options for responding to successful or failed attempts to find a file, and optimization approaches for achieving even faster performance.

Caching of static files on the NGINX server significantly offloads work from the Node.js application server, allowing it to achieve much higher performance.

Tip 3 – Implement a Node.js Load Balancer

The real key to high – that is, nearly unlimited – performance for Node.js applications is to run multiple application servers and balance loads across all of them.

Node.js load balancing can be particularly tricky because Node.js enables a high level of interaction between JavaScript code running in the web browser and JavaScript code running on the Node.js application server, with JSON objects as the medium of data exchange. This implies that a given client session runs continually on a specific application server, and session persistence is inherently difficult to achieve with multiple application servers.

One of the major advantages of the Internet and the web are their high degree of statelessness, which includes the ability for client requests to be fulfilled by any server with access to a requested file. Node.js subverts statelessness and works best in a stateful environment, where the same server consistently responds to requests from any specific clients.

This requirement can best be met by NGINX Plus, rather than NGINX Open Source. The two versions of NGINX are quite similar, but one major difference is their support for different load balancing algorithms.

NGINX supports stateless load balancing methods:

- Round Robin – A new request goes to the next server in a list.

- Least Connections – A new request goes to the server that has the fewest active connections.

- IP Hash – A new request goes to the server assigned to a hash of the client’s IP address.

Only one of these methods, IP Hash, reliably sends a given client’s requests to the same server, which benefits Node.js applications. However, IP Hash can easily lead to one server receiving a disproportionate number of requests, at the expense of other servers, as described in this blog post about load balancing techniques. This method supports statefulness at the expense of potentially suboptimal allocation of requests across server resources.

Unlike NGINX, NGINX Plus supports session persistence. With session persistence in use, the same server reliably receives all requests from a given client. The advantages of Node.js, with its stateful communication between client and server, and NGINX Plus, with its advanced load balancing capability, are both maximized.

So you can use NGINX or NGINX Plus to support load balancing across multiple Node.js servers. Only with NGINX Plus, however, are you likely to achieve both maximum load‑balancing performance and Node.js‑friendly statefulness. The application health checks and monitoring capabilities built into NGINX Plus are useful here as well.

NGINX Plus also supports session draining, which allows an application server to gracefully complete current sessions after a request for the server to take itself out of service.

Tip 4 – Proxy WebSocket Connections

HTTP, in all versions, is designed for “pull” communications, where the client requests files from the server. WebSocket is a tool to enable “push” and “push/pull” communications, where the server can proactively send files that the client hasn’t requested.

The WebSocket protocol makes it easier to support more robust interaction between client and server while reducing the amount of data transferred and minimizing latency. When needed, a full‑duplex connection, with client and server both initiating and receiving requests as needed, is achievable.

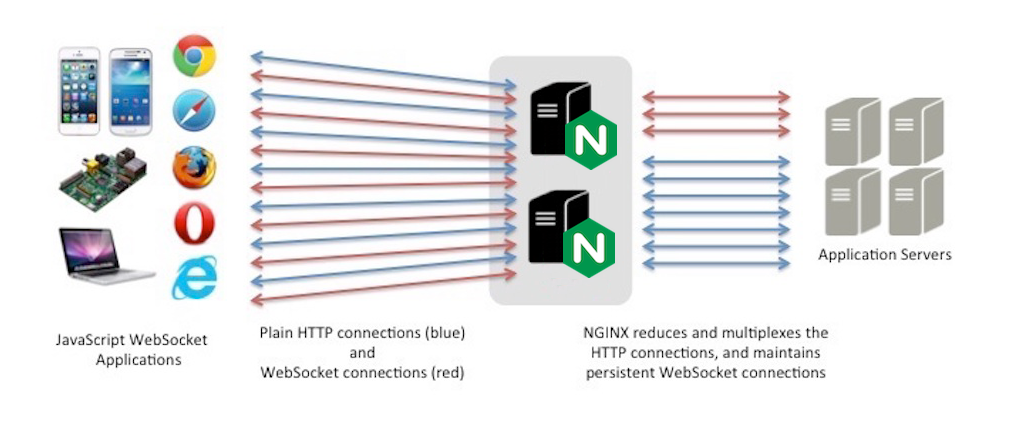

WebSocket protocol has a robust JavaScript interface and as such is a natural fit for Node.js as the application server – and, for web applications with moderate transaction volumes, as the web server as well. When transaction volumes rise, it makes sense to insert NGINX between clients and the Node.js web server, using NGINX or NGINX Plus to cache static files and to load balance among multiple application servers.

Node.js is often used in conjunction with Socket.IO – a WebSocket API that has become quite popular for use along with Node.js applications. This can cause port 80 (for HTTP) or port 443 (for HTTPS) to become quite crowded, and the solution is to proxy to the Socket.IO server. You can use NGINX for the proxy server, as described above, and also gain additional functionality such as static file caching, load balancing, and more.

Following is code for a server.js node application file that listens on port 5000. It acts as a proxy server (not a web server) and routes requests to the proper port:

var io = require('socket.io').listen(5000);

io.sockets.on('connection', function (socket) {

socket.on('set nickname', function (name) {

socket.set('nickname', name, function () {

socket.emit('ready');

});

});

socket.on('msg', function () {

socket.get('nickname', function (err, name) {

console.log('Chat message by ', name);

});

});

});Within your index.html file, add the following code to connect to your server application and instantiate a WebSocket between the application and the user’s browser:

<script src="/socket.io/socket.io.js"></script>

<script>// <![CDATA[

var socket = io(); // your initialization code here.

// ]]>

</script>For complete instructions, including NGINX configuration, see our blog post about using NGINX and NGINX Plus with Node.js and Socket.IO. For more depth about potential architectural and infrastructure issues for web applications of this kind, see our blog post about real‑time web applications and WebSocket.

Tip 5 – Implement SSL/TLS and HTTP/2

More and more sites are using SSL/TLS to secure all user interaction on the site. It’s your decision whether and when to make this move, but if and when you do, NGINX supports the transition in two ways:

- You can terminate an SSL/TLS connection to the client in NGINX, once you’ve set up NGINX as a reverse proxy. The Node.js server sends and receives unencrypted requests and content back and forth with the NGINX reverse proxy server.

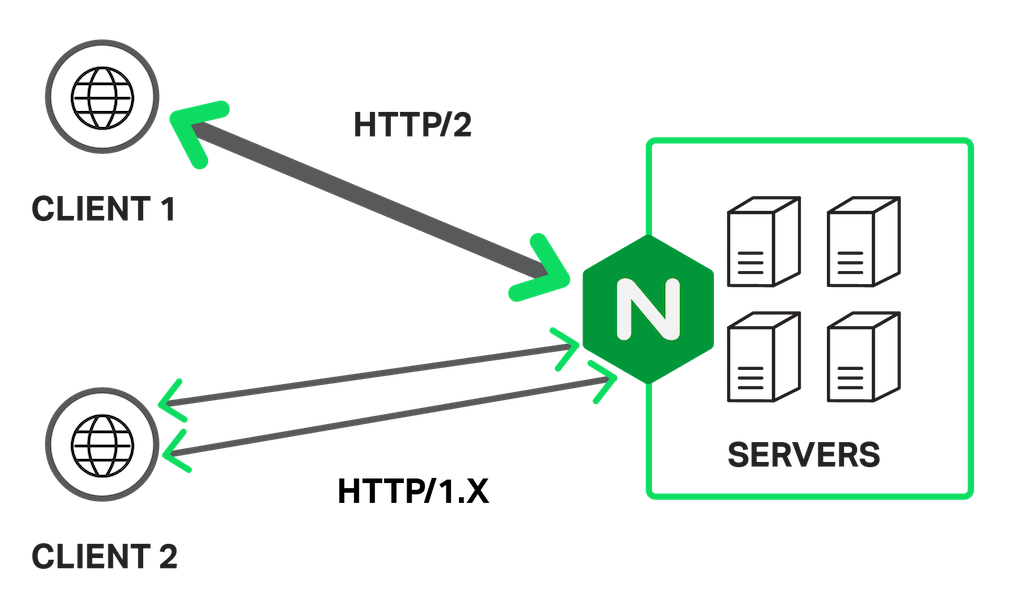

- Early indications are that using HTTP/2, the new version of the HTTP protocol, may largely or completely offset the performance penalty that is otherwise imposed by the use of SSL/TLS. NGINX supports HTTP/2 and you can terminate HTTP/2 along with SSL/TLS, again eliminating any need for changes in the Node.js application server(s).

Among the implementation steps you need to take are updating the URL in the Node.js configuration file, establishing and optimizing secure connections in your NGINX configuration, and using SPDY or HTTP/2 if desired. Adding HTTP/2 support means that browser versions that support HTTP/2 communicate with your application using the new protocol; older browser versions continue to use HTTP/1.x.

The following configuration code is for a Ghost blog using SPDY, as described here. It includes advanced features such as OCSP stapling. For considerations around using NGINX for SSL termination, including the OCSP stapling option, see here. For a general overview of the same topics, see here.

You need make only minor alterations to configure your Node.js application and to upgrade from SPDY to HTTP/2, now or when SPDY support goes away in early 2016.

server {

server_name domain.com;

listen 443 ssl spdy;

spdy_headers_comp 6;

spdy_keepalive_timeout 300;

keepalive_timeout 300;

ssl_certificate_key /etc/nginx/ssl/domain.key;

ssl_certificate /etc/nginx/ssl/domain.crt;

ssl_session_cache shared:SSL:10m;

ssl_session_timeout 24h;

ssl_buffer_size 1400;

ssl_stapling on;

ssl_stapling_verify on;

ssl_trusted_certificate /etc/nginx/ssl/trust.crt;

resolver 8.8.8.8 8.8.4.4 valid=300s;

add_header Strict-Transport-Security 'max-age=31536000; includeSubDomains';

add_header X-Cache $upstream_cache_status;

location / {

proxy_cache STATIC;

proxy_cache_valid 200 30m;

proxy_cache_valid 404 1m;

proxy_pass http://ghost_upstream;

proxy_ignore_headers X-Accel-Expires Expires Cache-Control;

proxy_ignore_headers Set-Cookie;

proxy_hide_header Set-Cookie;

proxy_hide_header X-powered-by;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

proxy_set_header Host $http_host;

expires 10m;

}

location /content/images {

alias /path/to/ghost/content/images;

access_log off;

expires max;

}

location /assets {

alias /path/to/ghost/themes/uno-master/assets;

access_log off;

expires max;

}

location /public {

alias /path/to/ghost/built/public;

access_log off;

expires max;

}

location /ghost/scripts {

alias /path/to/ghost/core/built/scripts;

access_log off;

expires max;

}

location ~ ^/(?:ghost|signout) {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

proxy_pass http://ghost_upstream;

add_header Cache-Control "no-cache, private, no-store,

must-revalidate, max-stale=0, post-check=0, pre-check=0";

proxy_set_header X-Forwarded-Proto https;

}

}

Conclusion

This blog post describes some of the most important performance improvements you can make in your Node.js applications. It focuses on the addition of NGINX to your application mix alongside Node.js – by using NGINX as a reverse proxy server, to cache static files, for load balancing, for proxying WebSocket connections, and to terminate the SSL/TLS and HTTP/2 protocols.

The combination of NGINX and Node.js is widely recognized as a way to create new microservices‑friendly applications or add flexibility and capability to existing SOA‑based applications that use Java or Microsoft .NET. This post helps you optimize your Node.js applications and, if you choose, to bring the partnership between Node.js and NGINX to life.